Machine Learning

Keith Dillon

Fall 2018

Topic 1: Introduction and the Perceptron

Course Information¶

- Labs/Participation - 15%

- Homework - 15%

- Midterm - 20%

- Final Exam - 25%

- Project - 25%

Point of exams is basically to make sure you didn't cheat on labs and homeworks.

Will discuss project later. Basically it will be a more complete version of a lab project, including validation and writeup. And a poster session defending your analysis.

Topics (preliminary list)¶

- Introduction

- Python Tools

- Basic Linear Algebra Concepts & Jargon

- Linear Algebra & Dimensionality Reduction

- k Nearest Neighbor Classification

- Clustering

- Statistics

- Probability

- Regression

- Optimization

- Ensembles

- Bias, Variance, & Performance Metrics

- Support Vector Machines and Kernels

- Neural Networks

Note that some topics are shorter or longer than a single course meeting, so the topic numbers do not directly map to class number.

Content Breakdown¶

I. Fundamentals

- Linear algebra

- Prob & Stat

II. Learning Methods

- Classification

- Regression

- Clustering

III. Usage & Theory

- Training

- Performance metrics

- Overfitting, estimator bias & variance

- Curse of dimensionality

Prerequisites¶

Programming skills necessary. We will be using Python.

Vector geometry & calculus

Some exposure to Prob & Stat would be nice

Some Linear Algebra would be really nice

I will cover/review the bare essentials of Probability, Statistics, & Linear Algebra needed. You are responsible for figuring out the programming on your own.

Books¶

There is no required text. Most of the old favorites use Matlab. There is a vast supply of free resources online.

- “Learning From Data”, Abu-Mostafa

- “Deep Learning, Vol. 1: From Basics to Practice”, Glassner

- An Introduction to Statistical Learning. James, Witten, Hastie and Tibshirani

- The Elements of Statistical Learning, 2e. Hastie, Tibshirani and Friedman

Outline¶

Basic Machine Learning introduction

Perceptron example

Python tools (depending on time)

import numpy as np

from matplotlib import pyplot as plt

%matplotlib inline

"Essence of Machine Learning" - Abu-Mostafa¶

- A pattern exists

- Cannot pin it down mathematically

- We have data for it

- Alternatives: expertise, domain knowledge, science

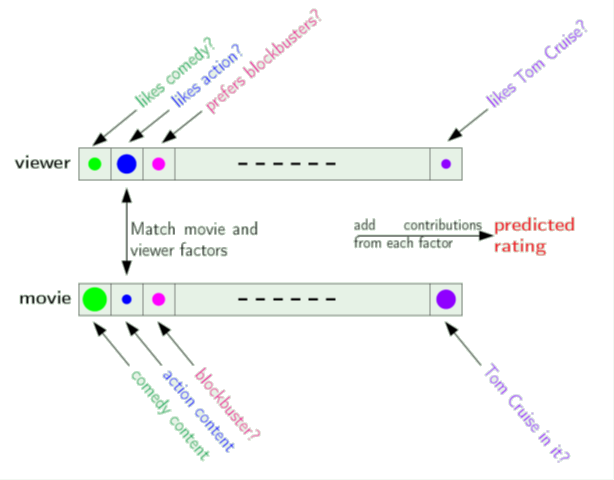

Example: Movie Rating Prediction¶

Compare user profiles to movie profiles

-Yaser Abu-Mostafa, Learning From Data

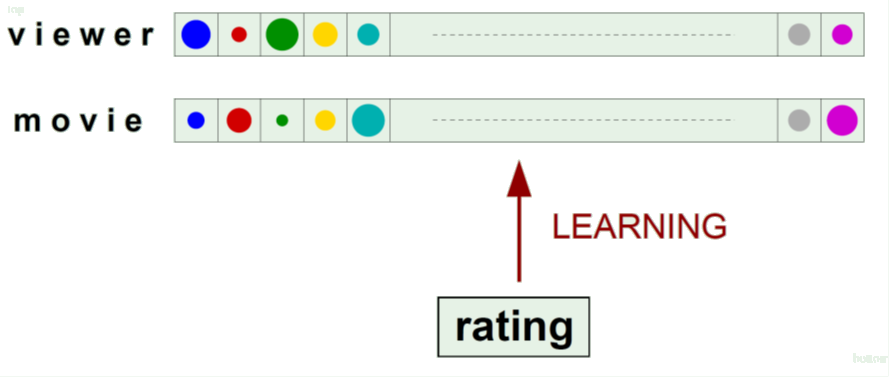

Movie Rating Prediction - the Learning Approach¶

Compare user profiles to movie profiles

-Yaser Abu-Mostafa, Learning From Data

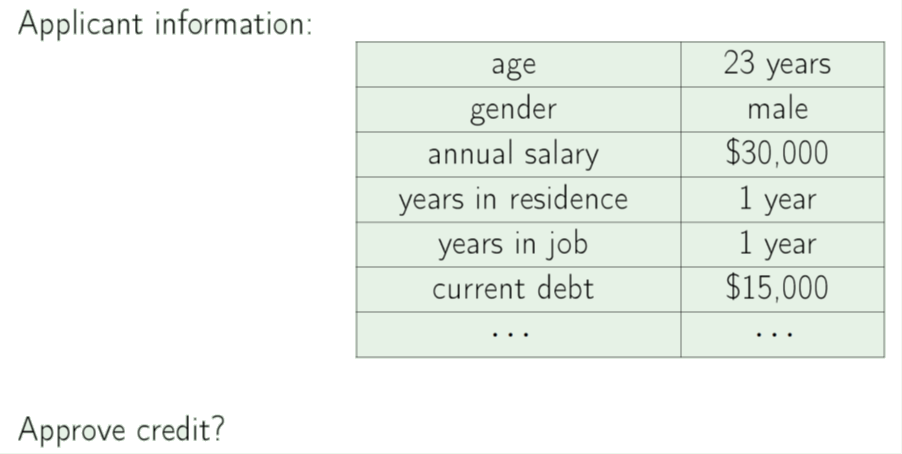

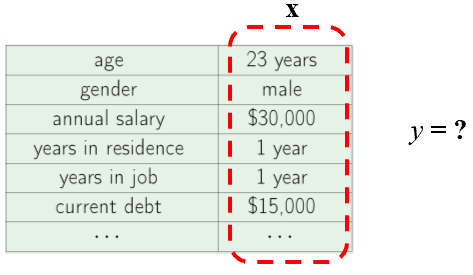

Example: Credit Scoring¶

Determine which applicants will be good credit customers.

-Yaser Abu-Mostafa, Learning From Data

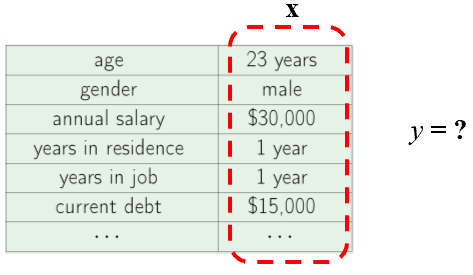

Formal Method¶

Input vector $\mathbf x$ $\leftarrow$ information we want function to use

Output scalar $y$ $\leftarrow$ desired output of our learning machine

Data $(\mathbf x_1, y_1), (\mathbf x_2, y_2), ..., (\mathbf x_N, y_N)$

Function $f(\mathbf x) \approx y$

Example Application: Credit Scoring¶

Determine which applicants will be good credit customers.

- Function $f(\mathbf x) \approx y$

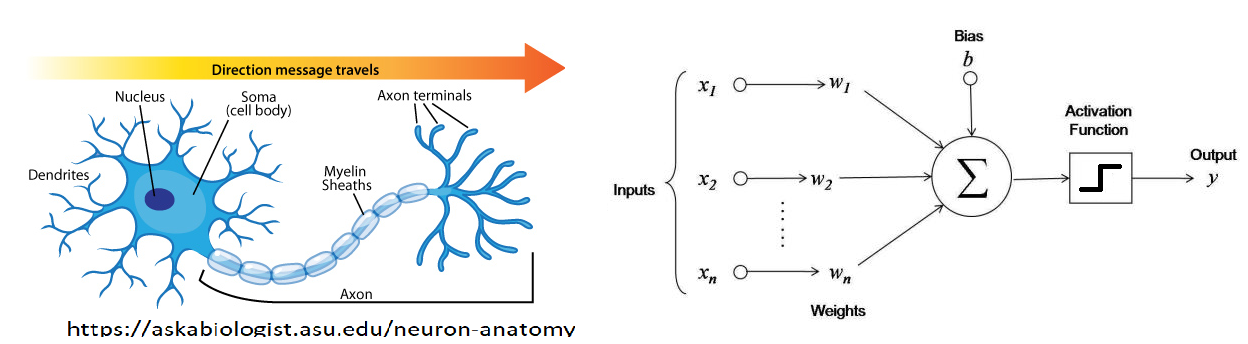

Example Method: Perceptron, an artificial neuron¶

\begin{align} f(\mathbf x) = f(x_1, x_2, ..., x_n) &= \begin{cases} +1, \text{ if } \sum_{i=1}^{n}w_i x_i + b > 0 \\ -1, \text{ if } \sum_{i=1}^{n}w_i x_i + b < 0 \end{cases} \\ &= \text{sign}\left\{ \sum_{i=1}^{n}w_i x_i + b \right\} = \text{sign}(\mathbf w^T \mathbf x) \end{align}

Perceptron Applied to Credit Scoring¶

2D Example¶

\begin{align} f(\mathbf x) = \text{sign}\left\{ \sum_{i=1}^{n}w_i x_i + b \right\} = \text{sign}(w_1 x_1 + w_2 x_2 + ... + b) = \text{sign}(w_1 x_1 + w_2 x_2 + b) \approx y \end{align}

X = np.array([

[8000, 4000],

[4000, 2000],

[5000, 6000],

[3000, 5000],

[ 0, 2000]

])

y = np.array([+1,+1,-1,-1,-1])

for i in np.arange(0,len(X)):

x_i = X[i]

y_i = y[i]

#print(i,x_i,y_i)

if y_i==-1: # negative samples

plt.scatter(x_i[0], x_i[1], s=120, marker='_', linewidths=2,color='r')

if y_i==1: # positive samples

plt.scatter(x_i[0], x_i[1], s=120, marker='+', linewidths=2,color='b')

plt.plot([1000,10000],[1000,6000]) # example hyperplane

plt.xlabel('income');

plt.ylabel('debt');

Little python understanding check¶

- arrays

- loops

- if statements

- scatter plot

Learning¶

That dividing line is determined by our parameters, $w_1$, $w_2$, and $b$.

Can you find parameters that work?

Learning: determining parameters automatically using data

Discuss¶

Suppose you had a certain input profile $(x_1, x_2, ...)$ you wanted to get a high ($y = +1$) output for, guaranteed. Ignore all else. How might you set the weights to accomplish it?

\begin{align} f(\mathbf x) = \text{sign}\left\{ \sum_{i=1}^{n}w_i x_i + b \right\} = \text{sign}(w_1 x_1 + w_2 x_2 + ... + b) = \text{sign}(w_1 x_1 + w_2 x_2 + b) \approx y \end{align}

Perceptron Learning Algorithm¶

choose starting $\mathbf w$, $b$, stepsize $\eta$.

For each $(\mathbf x_i, y_i)$: test $f(\mathbf w,b, \mathbf x_i) = y_i$?

- If $f(\mathbf w,b, \mathbf x_i) \neq y_i$, set $(\mathbf w,b) = (\mathbf w,b) + \eta \, y_i \times (\mathbf x_i,1)$

N = 100

X = np.random.rand(N,2)*100000

w_true = np.array([2,-1])

b_true = -30000

f_true = lambda x :np.sign(w_true[0]*x[0]+w_true[1]*x[1] + b_true);

y = np.zeros([N,1])

for i in np.arange(0,len(X)):

x_i = X[i]

y_i = f_true(x_i)

y[i] = y_i

#print(i,x_i,y_i)

if y_i==-1: # negative samples

plt.scatter(x_i[0], x_i[1], s=120, marker='_', linewidths=2,color='r')

if y_i==1: # positive samples

plt.scatter(x_i[0], x_i[1], s=120, marker='+', linewidths=2,color='b')

plt.xlabel('income');

plt.ylabel('debt');

plt.plot([(-b_true-100000*w_true[1])/w_true[0],-b_true/w_true[0]],[100000,0]); # example hyperplane

w = np.zeros(2)

b = np.zeros(1)

f = lambda w,b,x :np.sign(w[0]*x[0]+w[1]*x[1] + b);

acc = np.zeros([N,1])

print(w)

eta = 1e-5;

for i in np.arange(0,len(X)):

x_i = X[i]

y_i = y[i]

print(x_i, y_i, f(w,b,x_i), w,b)

for j in np.arange(0,len(X)):

acc[i] = acc[i] + y[j]*f(w,b,X[j])/len(X);

if(y_i != f(w,b,x_i)): # If not classified correctly, adjust the line to account for that point.

b = b + 1e5*eta*y_i;

w[0] = w[0] + eta*y_i*x_i[0];

w[1] = w[1] + eta*y_i*x_i[1];

for i in np.arange(0,len(X)):

x_i = X[i]

y_i = f_true(x_i)

if y_i==-1: # negative samples

plt.scatter(x_i[0], x_i[1], s=120, marker='_', linewidths=2,color='r')

if y_i==1: # positive samples

plt.scatter(x_i[0], x_i[1], s=120, marker='+', linewidths=2,color='b')

plt.xlabel('income');

plt.ylabel('debt');

plt.plot([(-b_true-100000*w_true[1])/w_true[0],-b_true/w_true[0]],[100000,0]); # true hyperplane

plt.plot([(-b-100000*w[1])/w[0],-b/w[0]],[100000,0]); # estimated hyperplane

plt.figure();

plt.plot(acc); # estimated accuracy

plt.xlabel('iteration');

plt.ylabel('accuracy');

[0. 0.] [79047.17409904 92413.65937758] [1.] [0.] [0. 0.] [0.] [65936.81878069 23437.17315452] [1.] [1.] [0.79047174 0.92413659] [1.] [ 4475.9826906 47668.18083499] [-1.] [1.] [0.79047174 0.92413659] [1.] [74615.79576763 57401.06809845] [1.] [1.] [0.74571191 0.44745479] [0.] [14951.88773888 48838.24186155] [-1.] [1.] [0.74571191 0.44745479] [0.] [67318.49243657 38098.90598651] [1.] [1.] [ 0.59619304 -0.04092763] [-1.] [82090.05002439 32740.96408474] [1.] [1.] [ 0.59619304 -0.04092763] [-1.] [84709.66991572 76173.43521956] [1.] [1.] [ 0.59619304 -0.04092763] [-1.] [81679.6926738 41755.18069756] [1.] [1.] [ 0.59619304 -0.04092763] [-1.] [8.43471190e+04 6.61603571e+01] [1.] [1.] [ 0.59619304 -0.04092763] [-1.] [ 7369.81050993 41773.77814243] [-1.] [1.] [ 0.59619304 -0.04092763] [-1.] [38168.97572235 55606.77154137] [-1.] [-1.] [ 0.52249493 -0.45866541] [-2.] [12752.44416639 22399.42333763] [-1.] [-1.] [ 0.52249493 -0.45866541] [-2.] [31557.83740976 96141.77952247] [-1.] [-1.] [ 0.52249493 -0.45866541] [-2.] [96471.41359195 48045.30997642] [1.] [1.] [ 0.52249493 -0.45866541] [-2.] [ 7141.53653585 41235.90313523] [-1.] [-1.] [ 0.52249493 -0.45866541] [-2.] [67215.44646415 44418.91968916] [1.] [1.] [ 0.52249493 -0.45866541] [-2.] [29521.38157515 71506.9954368 ] [-1.] [-1.] [ 0.52249493 -0.45866541] [-2.] [ 6237.50004253 10791.11502352] [-1.] [-1.] [ 0.52249493 -0.45866541] [-2.] [ 4893.99237724 25521.20919222] [-1.] [-1.] [ 0.52249493 -0.45866541] [-2.] [90217.04809144 9211.27124424] [1.] [1.] [ 0.52249493 -0.45866541] [-2.] [42308.40513416 58840.54690417] [-1.] [-1.] [ 0.52249493 -0.45866541] [-2.] [91816.41620696 96847.68690601] [1.] [1.] [ 0.52249493 -0.45866541] [-2.] [40225.75809175 34859.69540036] [1.] [1.] [ 0.52249493 -0.45866541] [-2.] [24079.67129798 20732.03765699] [-1.] [1.] [ 0.52249493 -0.45866541] [-2.] [39313.51924475 78125.20368444] [-1.] [-1.] [ 0.28169822 -0.66598579] [-3.] [33998.05954102 15450.69632852] [1.] [-1.] [ 0.28169822 -0.66598579] [-3.] [28390.15371072 85631.51745704] [-1.] [-1.] [ 0.62167881 -0.51147883] [-2.] [18275.17818657 15772.39639424] [-1.] [1.] [ 0.62167881 -0.51147883] [-2.] [54347.33634182 24974.39653541] [1.] [1.] [ 0.43892703 -0.66920279] [-3.] [ 1656.02176558 46287.06793713] [-1.] [-1.] [ 0.43892703 -0.66920279] [-3.] [75275.46065488 18869.24859795] [1.] [1.] [ 0.43892703 -0.66920279] [-3.] [31822.77874649 49213.23062105] [-1.] [-1.] [ 0.43892703 -0.66920279] [-3.] [13881.72999178 42707.13442813] [-1.] [-1.] [ 0.43892703 -0.66920279] [-3.] [89799.30694257 30893.76682511] [1.] [1.] [ 0.43892703 -0.66920279] [-3.] [87536.38649431 28707.03984227] [1.] [1.] [ 0.43892703 -0.66920279] [-3.] [52408.32613745 62996.04381558] [1.] [-1.] [ 0.43892703 -0.66920279] [-3.] [29428.87049306 29460.29525579] [-1.] [1.] [ 0.96301029 -0.03924235] [-2.] [47505.92419167 27657.12389329] [1.] [1.] [ 0.66872159 -0.33384531] [-3.] [ 6103.72737938 98241.78589991] [-1.] [-1.] [ 0.66872159 -0.33384531] [-3.] [69031.68944027 35916.8926645 ] [1.] [1.] [ 0.66872159 -0.33384531] [-3.] [58884.02195346 20305.96849884] [1.] [1.] [ 0.66872159 -0.33384531] [-3.] [44187.61533655 12166.14484858] [1.] [1.] [ 0.66872159 -0.33384531] [-3.] [33719.19603622 49793.02353537] [-1.] [1.] [ 0.66872159 -0.33384531] [-3.] [ 4209.93880184 38679.83880461] [-1.] [-1.] [ 0.33152963 -0.83177554] [-4.] [77227.62029251 98831.82829562] [1.] [-1.] [ 0.33152963 -0.83177554] [-4.] [39535.66980891 4328.7282063 ] [1.] [1.] [1.10380583 0.15654274] [-3.] [18305.37053224 30356.86823481] [-1.] [1.] [1.10380583 0.15654274] [-3.] [94144.01590399 1168.26253702] [1.] [1.] [ 0.92075213 -0.14702594] [-4.] [38280.45870098 27603.73129614] [1.] [1.] [ 0.92075213 -0.14702594] [-4.] [53965.15001405 4039.80933315] [1.] [1.] [ 0.92075213 -0.14702594] [-4.] [32911.30634509 508.30964235] [1.] [1.] [ 0.92075213 -0.14702594] [-4.] [76255.1909773 81944.55916404] [1.] [1.] [ 0.92075213 -0.14702594] [-4.] [57945.03660094 67011.48309335] [1.] [1.] [ 0.92075213 -0.14702594] [-4.] [80720.23422702 44367.7794063 ] [1.] [1.] [ 0.92075213 -0.14702594] [-4.] [39100.09858355 51624.43418554] [-1.] [1.] [ 0.92075213 -0.14702594] [-4.] [ 7602.47593726 40854.96140941] [-1.] [-1.] [ 0.52975114 -0.66327028] [-5.] [92142.91191505 65635.67036519] [1.] [1.] [ 0.52975114 -0.66327028] [-5.] [73426.32028374 84201.57219305] [1.] [-1.] [ 0.52975114 -0.66327028] [-5.] [ 3728.48818315 48147.26533336] [-1.] [1.] [1.26401434 0.17874544] [-4.] [98949.80423363 54055.46062177] [1.] [1.] [ 1.22672946 -0.30272721] [-5.] [53268.18300147 83316.8637238 ] [-1.] [1.] [ 1.22672946 -0.30272721] [-5.] [12906.28559993 88374.56726679] [-1.] [-1.] [ 0.69404763 -1.13589585] [-6.] [20611.73200199 7171.66508698] [1.] [1.] [ 0.69404763 -1.13589585] [-6.] [68153.4129133 80564.19299347] [1.] [-1.] [ 0.69404763 -1.13589585] [-6.] [71760.48312544 41710.25560349] [1.] [1.] [ 1.37558176 -0.33025392] [-5.] [11489.93013172 2581.91468916] [-1.] [1.] [ 1.37558176 -0.33025392] [-5.] [72550.68297416 88935.94850858] [1.] [1.] [ 1.26068246 -0.35607307] [-6.] [81048.10484659 50837.727744 ] [1.] [1.] [ 1.26068246 -0.35607307] [-6.] [11374.13566491 45426.09966339] [-1.] [-1.] [ 1.26068246 -0.35607307] [-6.] [71588.64687811 56065.63617798] [1.] [1.] [ 1.26068246 -0.35607307] [-6.] [79510.05303499 82960.01555673] [1.] [1.] [ 1.26068246 -0.35607307] [-6.] [31087.21182282 54395.1365762 ] [-1.] [1.] [ 1.26068246 -0.35607307] [-6.] [16085.21963242 73190.27449669] [-1.] [-1.] [ 0.94981034 -0.90002443] [-7.] [ 4676.49307175 76286.46782708] [-1.] [-1.] [ 0.94981034 -0.90002443] [-7.] [98741.00535979 57126.78000453] [1.] [1.] [ 0.94981034 -0.90002443] [-7.] [75620.85816464 16628.63517817] [1.] [1.] [ 0.94981034 -0.90002443] [-7.] [97501.55420063 30452.41868028] [1.] [1.] [ 0.94981034 -0.90002443] [-7.] [60426.06508912 49808.97740708] [1.] [1.] [ 0.94981034 -0.90002443] [-7.] [21091.27442061 56416.47488604] [-1.] [-1.] [ 0.94981034 -0.90002443] [-7.] [45569.99993268 9148.56876645] [1.] [1.] [ 0.94981034 -0.90002443] [-7.] [46244.52554706 20704.9107788 ] [1.] [1.] [ 0.94981034 -0.90002443] [-7.] [79991.46257559 45461.19819196] [1.] [1.] [ 0.94981034 -0.90002443] [-7.] [88683.27486695 33336.94909386] [1.] [1.] [ 0.94981034 -0.90002443] [-7.] [13896.11408643 58496.41359432] [-1.] [-1.] [ 0.94981034 -0.90002443] [-7.] [87289.35889405 34528.58865391] [1.] [1.] [ 0.94981034 -0.90002443] [-7.] [24913.40046774 87884.18295258] [-1.] [-1.] [ 0.94981034 -0.90002443] [-7.] [86006.63698005 51512.29792589] [1.] [1.] [ 0.94981034 -0.90002443] [-7.] [37489.13605457 90781.86151485] [-1.] [-1.] [ 0.94981034 -0.90002443] [-7.] [61792.66988585 6979.22551619] [1.] [1.] [ 0.94981034 -0.90002443] [-7.] [ 9453.88240842 88007.32274327] [-1.] [-1.] [ 0.94981034 -0.90002443] [-7.] [54619.26089595 815.53264113] [1.] [1.] [ 0.94981034 -0.90002443] [-7.] [ 7098.49144932 32553.66935713] [-1.] [-1.] [ 0.94981034 -0.90002443] [-7.] [ 401.6339852 24586.55324278] [-1.] [-1.] [ 0.94981034 -0.90002443] [-7.] [38918.54187889 6651.14703213] [1.] [1.] [ 0.94981034 -0.90002443] [-7.] [69386.35813403 90660.08782964] [1.] [-1.] [ 0.94981034 -0.90002443] [-7.] [96861.66180892 14465.35876421] [1.] [1.] [1.64367392 0.00657644] [-6.] [92895.12589085 78284.59933034] [1.] [1.] [1.64367392 0.00657644] [-6.] [39364.36732204 96494.53017332] [-1.] [1.] [1.64367392 0.00657644] [-6.] [ 765.17110317 23489.10401366] [-1.] [-1.] [ 1.25003025 -0.95836886] [-7.]

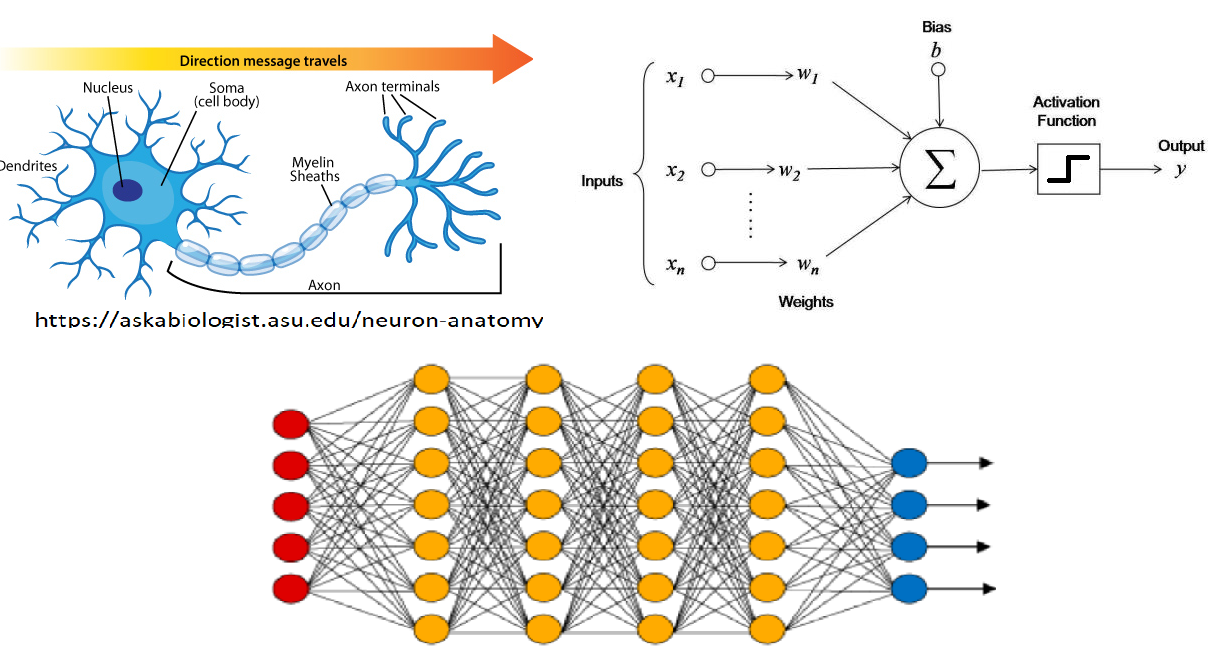

...So that's everything in a nutshell (almost)¶

Models plus optimization algorithms to fit them to data--just like a brain structure that learns from experiences.

Simple example that can extend all the way to cutting-edge Deep Learning techniques.

Two Main Steps¶

- Learning (Supervised) - using data to optimize function $f(\mathbf x_i) \approx y_i$

- Inference - apply $f(\mathbf x')$ to a new sample to estimate the unknown $y'$.

The things Learning optimizes are Parameters.

Hyperparameters: Learning rate (eta), # of parameters, # of iterations, ...

Where again...¶

Input vector $\mathbf x$ $\leftarrow$ information we want function to use

Output scalar $y$ $\leftarrow$ desired output of our learning machine

Data $(\mathbf x_1, y_1), (\mathbf x_2, y_2), ..., (\mathbf x_N, y_N)$

Function $f(\mathbf x) \approx y$

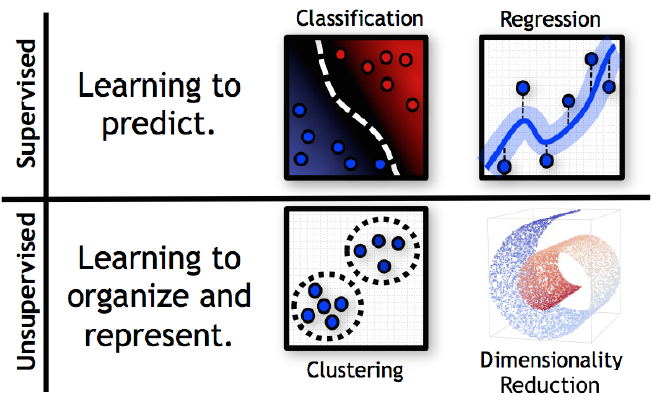

Machine Learning:¶

- Classification - determining what something is, given features

- Prediction - determining future value, cost, sea level, from past data

- Clustering - finding subgroups

- Dimensionality Reduction - find the meaningful variations in the data

Machine Learning Origins... a lot¶

Linear Algebra

Statistics

Inverse Problem Theory

Optimization

Pattern Recognition

Artificial Intelligence

Statistical Learning Theory

This Class¶

- Does not presume Linear Algebra & Statists knowledge/ability. But will need to learn.

- Will cover major methods and key issues at a practical level (e.g., how to invert matrices it in python).

This Class, U of New Haven Style¶

Professional focus (as opposed to research)

Experiential (as opposed to passive note-taking)

Objectives:¶

- Be able to apply machine learning methods to data

- Be able to discuss alternative methods critically (job, job interview, etc.)

Approach¶

- Discussion of methods - homeworks, tests

- Weekly homework - your own work please. Same questions will be on tests.

- In-class (and beyond) labs

- Final project.

Labs will start off solving toy problems on simple datasets. As we proceed we will be using more realistic datasets based on class interests.