Principal component analysis¶

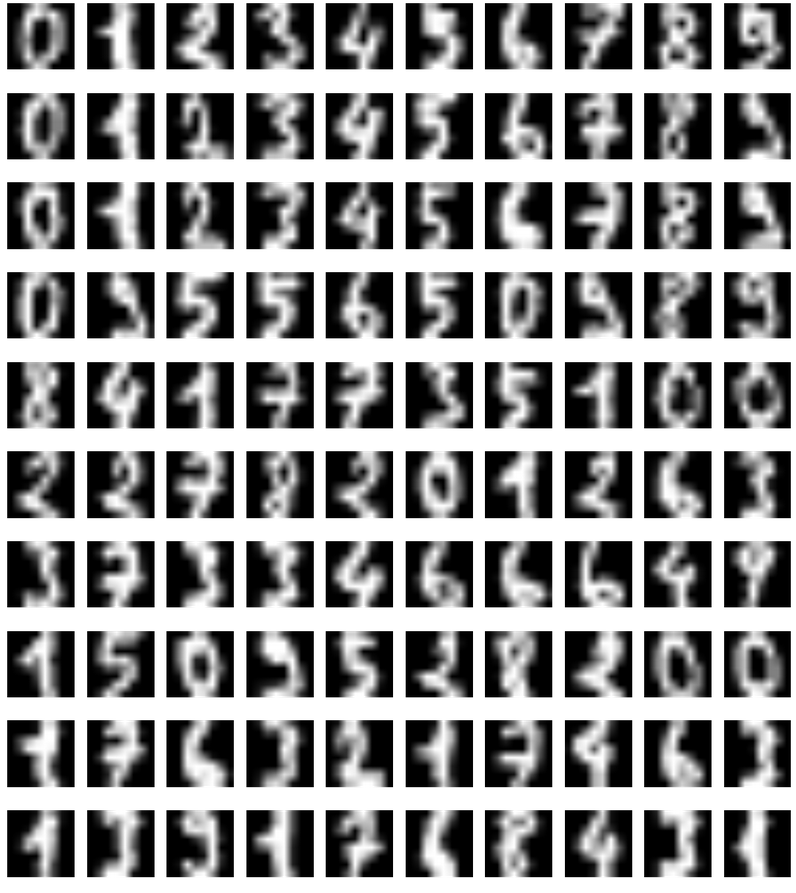

We will be performing a PCA on the classic MNIST dataset of handwritten digits.

Wikipedia page has good description of principal component analysis https://en.wikipedia.org/wiki/Principal_component_analysis

Import the dataset and use pylab to explore.

from sklearn.datasets import load_digits

digits = load_digits()

import matplotlib.pylab as pl

# %matplotlib inline

%pylab inline

pl.gray();

See load_digits documentation.

Using one figure with 100 subplots in 10-by-10 grid, display the first 100 images using pl.imshow.

To display only the images, use pl.xticks([]), pl.yticks([]) and pl.axis('off').

pl.imshow(digits.images[0])

pl.xticks([]), pl.yticks([]) and pl.axis('off')

pl.show()

PCA on subset¶

For simplicity we will look at the first 6 digits.

1- Load the first 6 digits of the MNIST digits dataset using scikit-learn.

import sklearn

six_digits = sklearn.datasets.load_digits(n_class=6).data

len(six_digits[0])

six_digits

scaler = sklearn.preprocessing.StandardScaler().fit_transform(six_digits)

scaler

3- Now that we have properly scaled images, we can apply the PCA transformation. Using scikit-learn's PCA, project our digits dataset into lower dimensional space. First try 10 components.

from sklearn.decomposition import PCA

pca = PCA(n_components = 10)

pca.fit_transform(scaler)

4- Due to the loss of information in projecting data into lower dimensional space, our transformation is never perfect. One way we can determine how well it worked is to plot the amount of explained variance. Using the function snippet below, plot the amount of explained variance of each of the principle components.

def scree_plot(num_components, pca, title=None):

ind = np.arange(num_components)

vals = pca.explained_variance_ratio_

plt.figure(figsize=(10, 6), dpi=250)

ax = plt.subplot(111)

ax.bar(ind, vals, 0.35,

color=[(0.949, 0.718, 0.004),

(0.898, 0.49, 0.016),

(0.863, 0, 0.188),

(0.694, 0, 0.345),

(0.486, 0.216, 0.541),

(0.204, 0.396, 0.667),

(0.035, 0.635, 0.459),

(0.486, 0.722, 0.329),

])

for i in range(num_components):

ax.annotate(r"%s%%" % ((str(vals[i]*100)[:4])), (ind[i]+0.2, vals[i]),

va="bottom", ha="center", fontsize=12)

ax.set_xticklabels(ind, fontsize=12)

ax.set_ylim(0, max(vals)+0.05)

ax.set_xlim(0-0.45, 8+0.45)

ax.xaxis.set_tick_params(width=0)

ax.yaxis.set_tick_params(width=2, length=12)

ax.set_xlabel("Principal Component", fontsize=12)

ax.set_ylabel("Variance Explained (%)", fontsize=12)

if title is not None:

plt.title(title, fontsize=16)

# from sklearn.decomposition import PCA

# from sklearn.preprocessing import StandardScaler

import numpy as np

%pylab inline

scree_plot(10, pca)

5- We need to pick an appropriate number of components to keep. Looking at the plot of explained variance, we are interested in finding the least number of principle components that explain the most variance. What is the optimal number of components to keep for the digits dataset?

6- Plot each of the eigen-vectors as images.

Another way to visualize our digits is to force a projection into 2-dimensional space in order to visualize the data on a 2-dimensional plane. The code snippet below will plot our digits projected into 2-dimensions on x-y axis.

# take first 4 components

cov = (scaler.T @ scaler) / len(scaler)

w, v = np.linalg.eig(cov)

# v[:, 0]

pl.imshow(v[:, 0].reshape(8,8)) # principal eigenvector

# plot projection

def plot_embedding(X, y, title=None):

x_min, x_max = np.min(X, 0), np.max(X, 0)

X = (X - x_min) / (x_max - x_min)

plt.figure(figsize=(10, 6), dpi=250)

ax = plt.subplot(111)

ax.axis('off')

ax.patch.set_visible(False)

for i in range(X.shape[0]):

plt.text(X[i, 0], X[i, 1], str(y[i]), color=plt.cm.Set1(y[i] / 10.), fontdict={'weight': 'bold', 'size': 12})

plt.xticks([]), plt.yticks([])

plt.ylim([-0.1,1.1])

plt.xlim([-0.1,1.1])

if title is not None:

plt.title(title, fontsize=16)

pca2 = PCA(n_components = 2)

proj = pca2.fit_transform(scaler)

plot_embedding(proj, load_digits(6).target)

7- Using the above method, project the digits dataset into 2-dimensions. Do you notice anything about the resulting projections? Does the plot remind you of anything? Looking at the results, which digits end up near each other in 2-dimensional space? Which digits have overlap in this new feature space?

There are 6 different categorical clusters. I noticed that the digits that look similar to each other ended up close to each other on the projection plot - for instance, 2 looks similar to 3, or at least moreso than comparisons among other digits. It reminds me of k-nearest neighbors clustering. Digits 5 and 3 end up near each other, and in fact, 2, 3, and 5 overlap in this new feature space.