Outline

- Homeworks 5 & 6

- Regression for Classification

- Activation functions

- Multilayer Perceptron and tensors

import numpy as np

from matplotlib import pyplot as plt

%matplotlib inline

Recall Regression $\mathbf y = f(\mathbf x;\beta_0, \boldsymbol\beta) + \boldsymbol\varepsilon $¶

Simple Linear Regression, $f(\mathbf x;\beta_0, \boldsymbol\beta) = \beta_0 + \boldsymbol\beta x$

Minimize $e^2 = \Vert \mathbf y - f(\mathbf x;\beta_0, \boldsymbol\beta) \Vert^2$ to find optimal $\boldsymbol\beta$ ~ LMS Loss

- Plan A. Guesstimate $\beta_0$, $\boldsymbol\beta$ by eye

- Plan B: analytically find minimizer using calculus

- Plan C: Use optimization - Newton method, LARS, gradient descent...

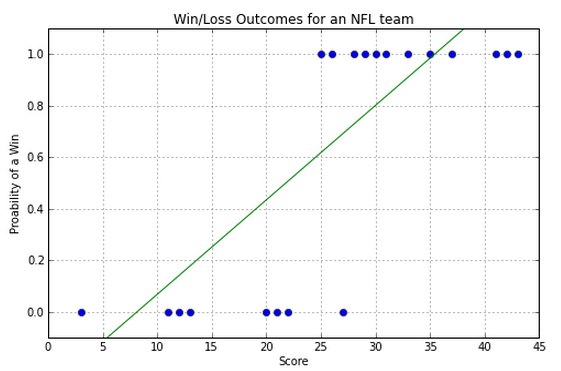

Regression for Classification¶

- Simple Linear Regression, $f(\mathbf x;\beta_0, \boldsymbol\beta) = \beta_0 + \boldsymbol\beta^T \mathbf x$

Minimize $e^2 = \Vert \mathbf y - f(\mathbf x;\beta_0, \boldsymbol\beta) \Vert^2$ to find optimal $\boldsymbol\beta$ ~ LMS Loss, ak.a L2, Least Squares

- Logistic Regression $f(\mathbf x;\beta_0, \boldsymbol\beta) = sigmoid(\beta_0 + \boldsymbol\beta^T \mathbf x)$

$$sigmoid(z) = \frac{1}{1+e^{-z}}$$

How might we fit this one?

Derivative of Sigmoid¶

$$ \frac{\partial}{\partial z} sigmoid(z) = \frac{\partial}{\partial z} \frac{1}{1+e^{-z}} = ... ? $$

$$ \frac{\partial}{\partial z} sigmoid(z) = sigmoid(z) (1 - sigmoid(z)) $$

Setting this equal to zero doesn't work, but with enough time and computing resources you can minimize anything, especially if you can compute the gradient...

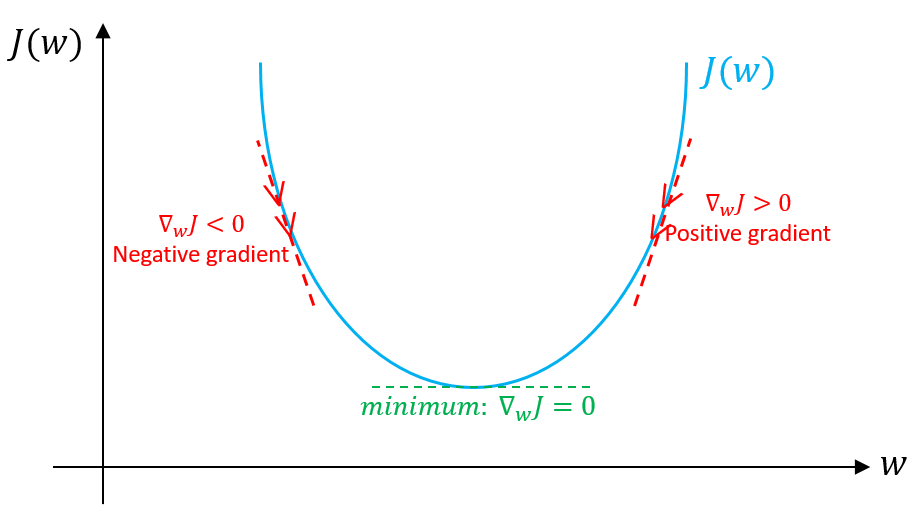

Gradient Descent¶

Went from too-trivial-to-cover in optimization classes, to the current state-of-the-art due to massive data sizes and parallel computing

Apply this to a toy problem.

What do we use for the step size?

Matrix Calculus¶

https://en.wikipedia.org/wiki/Matrix_calculus

https://www.math.uwaterloo.ca/~hwolkowi/matrixcookbook.pdf

"The Matrix Calculus You Need For Deep Learning" https://arxiv.org/abs/1802.01528

"This paper is an attempt to explain all the matrix calculus you need in order to understand the training of deep neural networks. We assume no math knowledge beyond what you learned in calculus 1...

Gradient Descent - improvements (or sometimes not...)¶

Stochastic

Acceleration

Adaptive step sizes

Averaging of steps

...

Stochastic Gradient Descent¶

Considered one of the key reasons for Deep Learning's success

Conventional gradient descent uses entire dataset to compute step.

Stochastic gradient descent uses randomly-chosen sample to make steps.

Batch stochastic gradient descent uses small batches to compute a step.

Epoch = single pass through the entire dataset in batch steps.

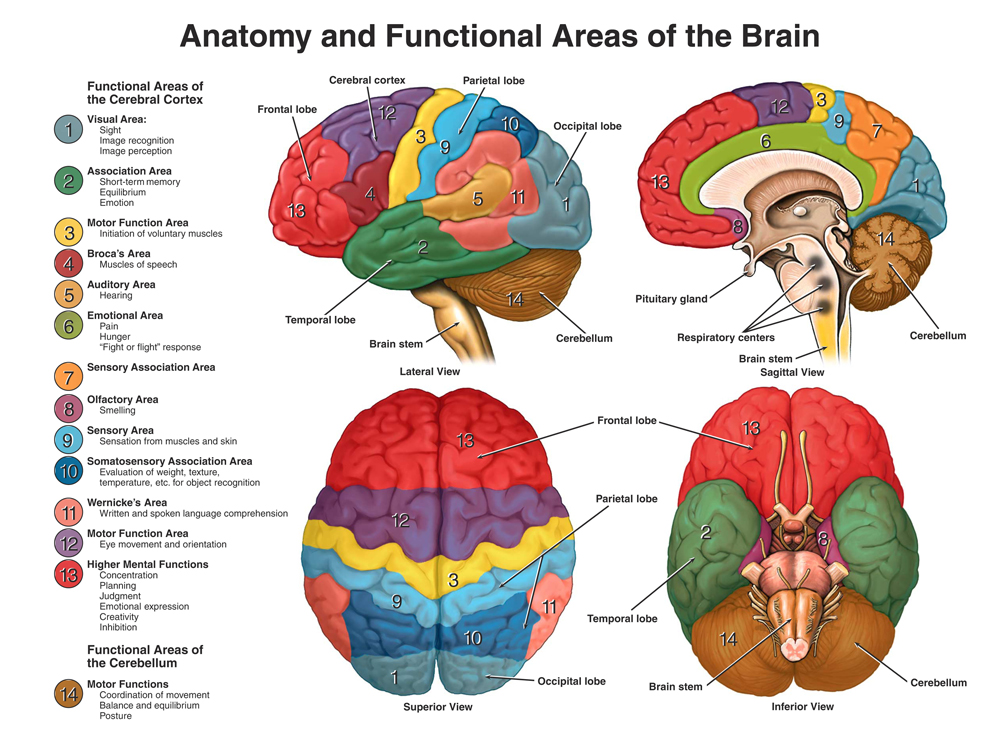

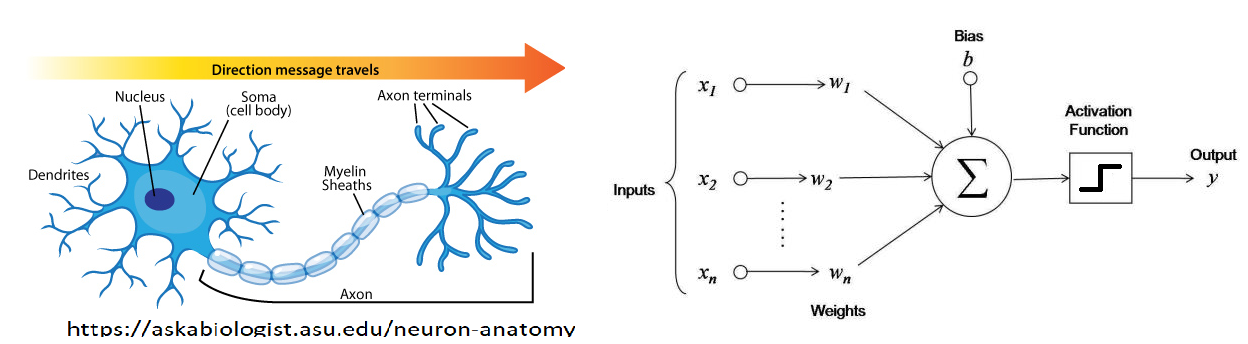

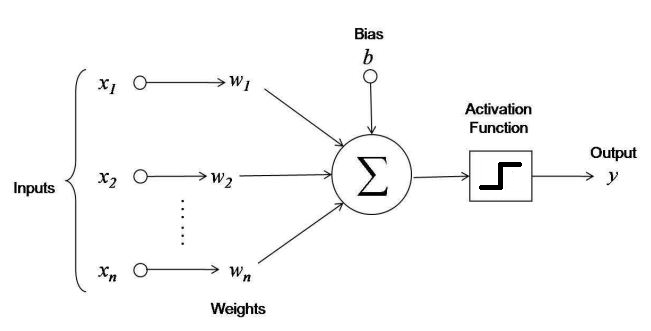

The Perceptron¶

\begin{align} f(\mathbf x) = f(x_1, x_2, ..., x_n) &= \begin{cases} +1, \text{ if } \sum_{i=1}^{n}w_i x_i + b > 0 \\ -1, \text{ if } \sum_{i=1}^{n}w_i x_i + b < 0 \end{cases} \\ &= \text{sign}\left\{ \sum_{i=1}^{n}w_i x_i + b \right\} = \text{sign}(\mathbf w^T \mathbf x) \end{align}

"Fire or not-fire" decision.

Look familiar??

Perceptron Learning Algorithm¶

choose starting $\mathbf w$, $b$, stepsize $\eta$.

For each $(\mathbf x_i, y_i)$: test $f(\mathbf w,b, \mathbf x_i) = y_i$?

- If $f(\mathbf w,b, \mathbf x_i) \neq y_i$, set $(\mathbf w,b) = (\mathbf w,b) + \eta \, y_i \times (\mathbf x_i,1)$

Can view this algorithm as gradient descent for the loss function:

$$ L(f(\mathbf x_i), y_i) = \max(0, -y_i \mathbf w^T (\mathbf x_i,1)) $$

N = 100

X = np.random.rand(N,2)*100000

w_true = np.array([2,-1])

b_true = -30000

f_true = lambda x :np.sign(w_true[0]*x[0]+w_true[1]*x[1] + b_true);

y = np.zeros([N,1])

for i in np.arange(0,len(X)):

x_i = X[i]

y_i = f_true(x_i)

y[i] = y_i

#print(i,x_i,y_i)

if y_i==-1: # negative samples

plt.scatter(x_i[0], x_i[1], s=120, marker='_', linewidths=2,color='r')

if y_i==1: # positive samples

plt.scatter(x_i[0], x_i[1], s=120, marker='+', linewidths=2,color='b')

plt.xlabel('income');

plt.ylabel('debt');

plt.plot([(-b_true-100000*w_true[1])/w_true[0],-b_true/w_true[0]],[100000,0]); # example hyperplane

w = np.zeros(2)

b = np.zeros(1)

f = lambda w,b,x :np.sign(w[0]*x[0]+w[1]*x[1] + b);

acc = np.zeros([N,1])

print(w)

eta = 1e-5;

for i in np.arange(0,len(X)):

x_i = X[i]

y_i = y[i]

print(x_i, y_i, f(w,b,x_i), w,b)

for j in np.arange(0,len(X)):

acc[i] = acc[i] + y[j]*f(w,b,X[j])/len(X);

if(y_i != f(w,b,x_i)): # If not classified correctly, adjust the line to account for that point.

b = b + 1e5*eta*y_i;

w[0] = w[0] + eta*y_i*x_i[0];

w[1] = w[1] + eta*y_i*x_i[1];

for i in np.arange(0,len(X)):

x_i = X[i]

y_i = f_true(x_i)

if y_i==-1: # negative samples

plt.scatter(x_i[0], x_i[1], s=120, marker='_', linewidths=2,color='r')

if y_i==1: # positive samples

plt.scatter(x_i[0], x_i[1], s=120, marker='+', linewidths=2,color='b')

plt.xlabel('income');

plt.ylabel('debt');

plt.plot([(-b_true-100000*w_true[1])/w_true[0],-b_true/w_true[0]],[100000,0]); # true hyperplane

plt.plot([(-b-100000*w[1])/w[0],-b/w[0]],[100000,0]); # estimated hyperplane

plt.figure();

plt.plot(acc); # estimated accuracy

plt.xlabel('iteration');

plt.ylabel('accuracy');

[0. 0.] [13723.3608167 75277.41888745] [-1.] [0.] [0. 0.] [0.] [79362.77836352 85478.52815608] [1.] [-1.] [-0.13723361 -0.75277419] [-1.] [42615.55008504 26490.89973895] [1.] [1.] [0.65639418 0.10201109] [0.] [75422.28132833 58005.80424725] [1.] [1.] [0.65639418 0.10201109] [0.] [86139.04471236 56589.61165622] [1.] [1.] [0.65639418 0.10201109] [0.] [36593.98514949 80359.3878045 ] [-1.] [1.] [0.65639418 0.10201109] [0.] [13025.99274702 7021.72749747] [-1.] [-1.] [ 0.29045432 -0.70158279] [-1.] [52822.2833057 37376.0898795] [1.] [-1.] [ 0.29045432 -0.70158279] [-1.] [78005.72635067 5138.01294186] [1.] [1.] [ 0.81867716 -0.32782189] [0.] [60692.92958558 45509.89648218] [1.] [1.] [ 0.81867716 -0.32782189] [0.] [49219.34340121 44827.54862898] [1.] [1.] [ 0.81867716 -0.32782189] [0.] [88444.2670938 30129.40175661] [1.] [1.] [ 0.81867716 -0.32782189] [0.] [59816.66348373 97412.82802919] [-1.] [1.] [ 0.81867716 -0.32782189] [0.] [27757.6309493 87377.2217137] [-1.] [-1.] [ 0.22051052 -1.30195017] [-1.] [53299.7638845 39338.69775738] [1.] [-1.] [ 0.22051052 -1.30195017] [-1.] [73764.46253786 30054.85727575] [1.] [1.] [ 0.75350816 -0.90856319] [0.] [34217.42226001 8318.56584866] [1.] [1.] [ 0.75350816 -0.90856319] [0.] [49571.51557809 91852.04537399] [-1.] [-1.] [ 0.75350816 -0.90856319] [0.] [81364.20298183 14993.83402587] [1.] [1.] [ 0.75350816 -0.90856319] [0.] [31126.57251819 19691.51517489] [1.] [1.] [ 0.75350816 -0.90856319] [0.] [37958.46466261 65184.80555997] [-1.] [-1.] [ 0.75350816 -0.90856319] [0.] [61652.44945585 97694.0437717 ] [-1.] [-1.] [ 0.75350816 -0.90856319] [0.] [ 2353.53670776 63712.96326183] [-1.] [-1.] [ 0.75350816 -0.90856319] [0.] [38390.27688979 67921.66718479] [-1.] [-1.] [ 0.75350816 -0.90856319] [0.] [40888.43687017 75892.68742295] [-1.] [-1.] [ 0.75350816 -0.90856319] [0.] [74738.24828729 94923.49756392] [1.] [-1.] [ 0.75350816 -0.90856319] [0.] [ 9699.68869477 73664.99853873] [-1.] [1.] [1.50089064 0.04067179] [1.] [83487.1018538 49242.26856885] [1.] [1.] [ 1.40389376 -0.6959782 ] [0.] [30961.85973415 35554.14179014] [-1.] [1.] [ 1.40389376 -0.6959782 ] [0.] [73801.49078199 85041.30266161] [1.] [-1.] [ 1.09427516 -1.05151962] [-1.] [20524.41328918 22371.37143855] [-1.] [1.] [ 1.83229007 -0.20110659] [0.] [44843.47711923 67446.21837938] [-1.] [1.] [ 1.62704593 -0.4248203 ] [-1.] [70333.99764748 39706.48233518] [1.] [1.] [ 1.17861116 -1.09928249] [-2.] [49088.77154908 51781.20128967] [1.] [1.] [ 1.17861116 -1.09928249] [-2.] [57354.7687967 27331.43588883] [1.] [1.] [ 1.17861116 -1.09928249] [-2.] [32920.32055562 80216.43003532] [-1.] [-1.] [ 1.17861116 -1.09928249] [-2.] [57718.91253994 18402.49828925] [1.] [1.] [ 1.17861116 -1.09928249] [-2.] [83172.52732677 55683.25317691] [1.] [1.] [ 1.17861116 -1.09928249] [-2.] [69658.42202392 63253.79157534] [1.] [1.] [ 1.17861116 -1.09928249] [-2.] [53431.44121138 73232.6279778 ] [1.] [-1.] [ 1.17861116 -1.09928249] [-2.] [19502.92134617 43142.92587155] [-1.] [1.] [ 1.71292558 -0.36695621] [-1.] [ 4157.1673262 31349.69886087] [-1.] [-1.] [ 1.51789636 -0.79838547] [-2.] [35062.45597676 9707.74369604] [1.] [1.] [ 1.51789636 -0.79838547] [-2.] [19210.60398393 23165.08033351] [-1.] [1.] [ 1.51789636 -0.79838547] [-2.] [35317.19011083 37907.85161598] [1.] [1.] [ 1.32579032 -1.03003627] [-3.] [ 5873.75738567 82482.85435453] [-1.] [-1.] [ 1.32579032 -1.03003627] [-3.] [86591.11201646 6925.60051819] [1.] [1.] [ 1.32579032 -1.03003627] [-3.] [19387.73066604 80317.5395235 ] [-1.] [-1.] [ 1.32579032 -1.03003627] [-3.] [61853.09058093 6202.16010372] [1.] [1.] [ 1.32579032 -1.03003627] [-3.] [88498.26041527 2970.07578258] [1.] [1.] [ 1.32579032 -1.03003627] [-3.] [55742.98654028 15644.75354266] [1.] [1.] [ 1.32579032 -1.03003627] [-3.] [58527.12098895 1858.78860821] [1.] [1.] [ 1.32579032 -1.03003627] [-3.] [98825.03006685 59477.34001537] [1.] [1.] [ 1.32579032 -1.03003627] [-3.] [ 9075.12505925 30048.74887253] [-1.] [-1.] [ 1.32579032 -1.03003627] [-3.] [60192.30231707 65308.74162548] [1.] [1.] [ 1.32579032 -1.03003627] [-3.] [72331.13944994 83858.81637454] [1.] [1.] [ 1.32579032 -1.03003627] [-3.] [ 8895.96411178 89573.47965724] [-1.] [-1.] [ 1.32579032 -1.03003627] [-3.] [94106.53807945 67794.25375434] [1.] [1.] [ 1.32579032 -1.03003627] [-3.] [ 4031.12246035 79965.21164046] [-1.] [-1.] [ 1.32579032 -1.03003627] [-3.] [99693.20948241 94374.28902679] [1.] [1.] [ 1.32579032 -1.03003627] [-3.] [59705.06869844 87982.66642082] [1.] [-1.] [ 1.32579032 -1.03003627] [-3.] [30929.35049867 20759.01233207] [1.] [1.] [ 1.92284101 -0.15020961] [-2.] [76577.20290689 961.89542771] [1.] [1.] [ 1.92284101 -0.15020961] [-2.] [9381.54132735 6284.61231639] [-1.] [1.] [ 1.92284101 -0.15020961] [-2.] [44111.1504127 4665.94718565] [1.] [1.] [ 1.8290256 -0.21305573] [-3.] [ 4415.25574087 50387.96887058] [-1.] [-1.] [ 1.8290256 -0.21305573] [-3.] [32338.16213939 59709.21569003] [-1.] [1.] [ 1.8290256 -0.21305573] [-3.] [18876.10497619 59518.81868499] [-1.] [-1.] [ 1.50564397 -0.81014789] [-4.] [95736.14785683 98188.97578933] [1.] [1.] [ 1.50564397 -0.81014789] [-4.] [27975.02573678 77868.3064094 ] [-1.] [-1.] [ 1.50564397 -0.81014789] [-4.] [17729.5289688 92109.84849566] [-1.] [-1.] [ 1.50564397 -0.81014789] [-4.] [36521.75903011 16143.07501605] [1.] [1.] [ 1.50564397 -0.81014789] [-4.] [57956.62100778 2017.20219494] [1.] [1.] [ 1.50564397 -0.81014789] [-4.] [21518.23402594 76270.73374172] [-1.] [-1.] [ 1.50564397 -0.81014789] [-4.] [66488.83305308 76694.95370586] [1.] [1.] [ 1.50564397 -0.81014789] [-4.] [47307.36301154 6175.95316908] [1.] [1.] [ 1.50564397 -0.81014789] [-4.] [75962.38844011 56610.69385111] [1.] [1.] [ 1.50564397 -0.81014789] [-4.] [19565.89807481 19874.30880842] [-1.] [1.] [ 1.50564397 -0.81014789] [-4.] [71368.1121729 38362.40729647] [1.] [1.] [ 1.30998499 -1.00889097] [-5.] [90982.68708548 99543.5872423 ] [1.] [1.] [ 1.30998499 -1.00889097] [-5.] [22481.59011456 80831.69977071] [-1.] [-1.] [ 1.30998499 -1.00889097] [-5.] [93975.72173309 84935.1293092 ] [1.] [1.] [ 1.30998499 -1.00889097] [-5.] [94484.52207988 62993.39865061] [1.] [1.] [ 1.30998499 -1.00889097] [-5.] [78115.87246557 64983.01978696] [1.] [1.] [ 1.30998499 -1.00889097] [-5.] [66875.20230037 81431.72135846] [1.] [1.] [ 1.30998499 -1.00889097] [-5.] [33039.3918795 10640.36583136] [1.] [1.] [ 1.30998499 -1.00889097] [-5.] [34468.11822633 85492.85435176] [-1.] [-1.] [ 1.30998499 -1.00889097] [-5.] [83461.02513346 93381.59532689] [1.] [1.] [ 1.30998499 -1.00889097] [-5.] [85986.65211849 75795.30611726] [1.] [1.] [ 1.30998499 -1.00889097] [-5.] [56623.38917932 85197.8934976 ] [-1.] [-1.] [ 1.30998499 -1.00889097] [-5.] [63374.2038784 32578.61474986] [1.] [1.] [ 1.30998499 -1.00889097] [-5.] [79833.34897622 42847.62367994] [1.] [1.] [ 1.30998499 -1.00889097] [-5.] [82492.01307169 27886.60685383] [1.] [1.] [ 1.30998499 -1.00889097] [-5.] [86314.2080203 69154.34509246] [1.] [1.] [ 1.30998499 -1.00889097] [-5.] [58203.15538488 47349.96465853] [1.] [1.] [ 1.30998499 -1.00889097] [-5.] [16077.98754892 9795.51233416] [-1.] [1.] [ 1.30998499 -1.00889097] [-5.] [74392.34819441 60298.44181584] [1.] [1.] [ 1.14920512 -1.1068461 ] [-6.] [67533.70105419 99472.64237439] [1.] [-1.] [ 1.14920512 -1.1068461 ] [-6.] [43789.54160642 55968.35939394] [1.] [1.] [ 1.82454213 -0.11211967] [-5.] [52317.62171692 73244.36397925] [1.] [1.] [ 1.82454213 -0.11211967] [-5.]

Activation Functions¶

Key nonlinearity needed for Artificial Neural Networks to do anything interesting.

Consider what happens with networks of ANN's with no activation function.

Note these are scalar functions with scalar inputs. First compute the inner product then apply function.

Activation Functions - Step function¶

First big idea, from 1950's.

Essentially same as sign function.

$$ f(\mathbf x) = \text{sign}(\mathbf w^T \mathbf x) $$

No good way to train big networks made of these, so this function isn't used.

x = np.arange(-10, 10, 0.1)

plt.plot(x, np.sign(x), linewidth=3);

plt.xlabel('x');

plt.ylabel('sign(x)');

Activation Functions - Sigmoid¶

$$\sigma(x) = \frac{1}{1 + e^{-x}}$$

The next popular option that came along, can optimize by taking gradients because smooth.

x = np.arange(-10, 10, 0.1)

plt.plot(x, 1 / (1 + np.exp(-x)), linewidth=3);

plt.xlabel('x');

plt.ylabel('sigma(x)');

Activation Functions - Rectified Linear Unit "ReLU"¶

$$ \text{ReLU}(x) = \alpha \max(0,x)= \begin{cases} 0, \text{ if } x < 0 \\ \alpha x, \text{ if } x > 0 \end{cases} $$

The activation function that was popular when Deep Learning became famous.

Not smooth but gradient still simple to do computationally.

Variants such as "Leaky ReLU" improve implementation details.

x = np.arange(-10, 10, 0.1)

plt.plot(x, [max(0, i) for i in x], linewidth=3);

plt.xlabel('x');

plt.ylabel('ReLU(x)');

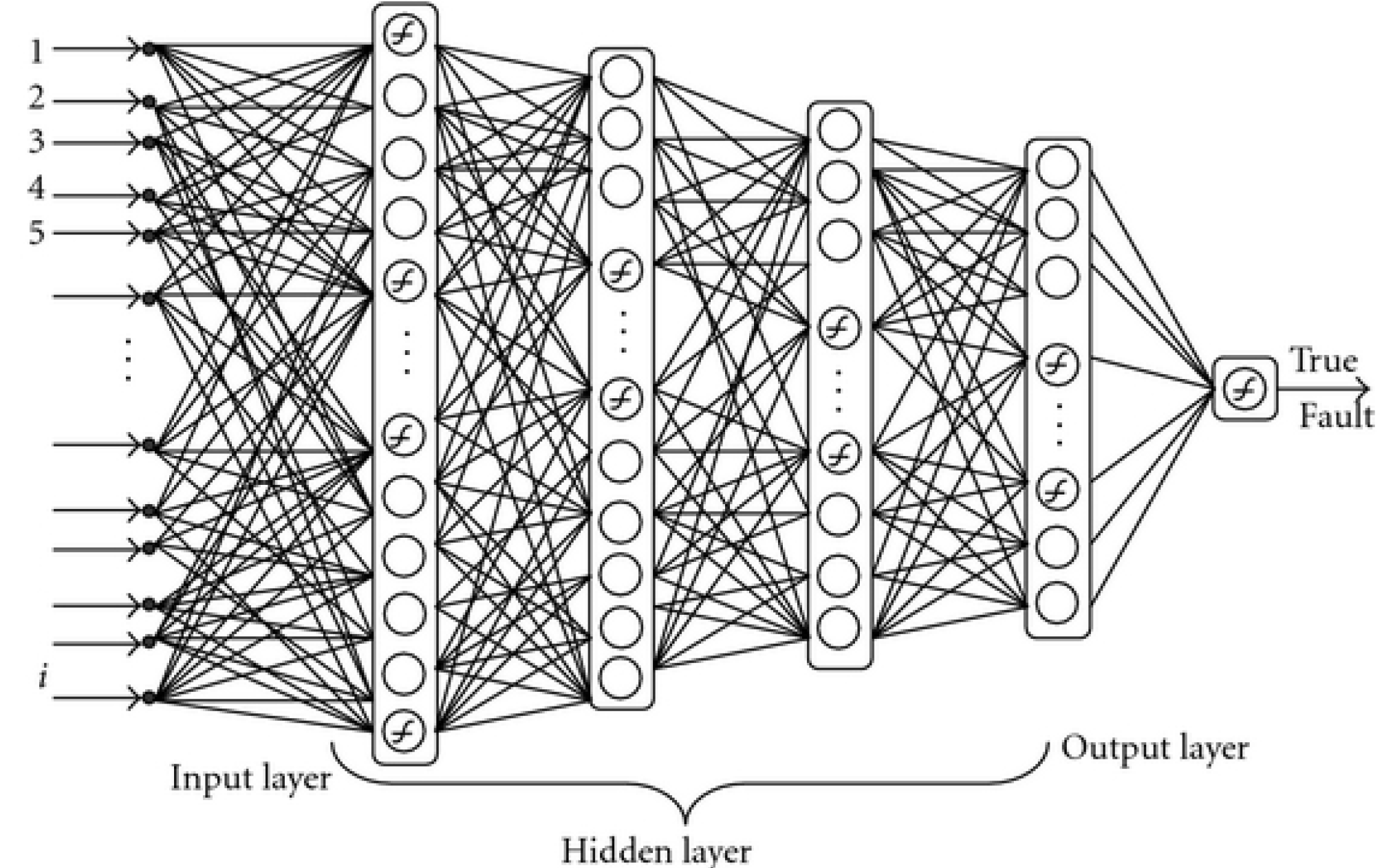

Multilayer (Artificial) Neural Networks - "Feed forward"¶

How do we even decide what neurons to connect where and all?

Tensor Perspective¶

Input data $\mathbf x$ can be viewed as vectors, matrices, or beyond... generally called "tensors"

Consider a layer with multiple outputs (e.g. multi-class classifier) $$ f_1(\mathbf x) = \hat{y}_1 \\ f_2(\mathbf x) = \hat{y}_2 \\ ... $$ Layers themselves viewed as functions on tensors.

$$ "\mathbf f(\mathbf x)" = \hat{\mathbf y} $$

Multiple Layers in Tensor perspective¶

Two-layer network as concatenated single-layer networks: $$ \mathbf f^{(1)}(\mathbf x) = \hat{\mathbf y}^{(1)} \\ \mathbf f^{(2)}(\mathbf x) = \hat{\mathbf y}^{(2)} \\ ... $$ Multilayer network as composition of tensor functions $$ \mathbf f^{(2)}\big(\mathbf f^{(1)}(\mathbf x) \big) = \hat{\mathbf y}^{(2)} \\ $$

Just another model to fit: $$ f_{(entire\_network)}(\mathbf x) = \hat{y} $$

Need to take care that input and output sizes match

Optimize a big Loss function of everything

Optimize Neural Nets¶

As always, we want to minimize a loss function: $L(f(\mathbf x),y)$.

Current state-of-the-art:

- Many loss functions possible (next class)

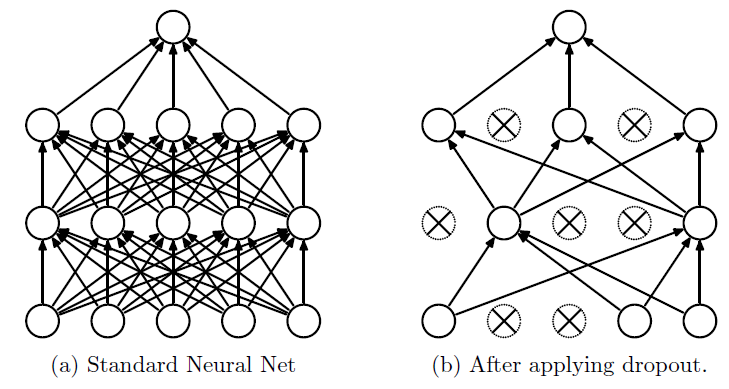

- Regularization (can change per-layer), L1, L2, Dropout, "early stopping"

- Minimize using gradient descent (Backpropagation algorithm -- next semester).

Regularization¶

L2 (a.k.a. weight decay) implies we do what?

L1?

Dropout...

Dropout¶

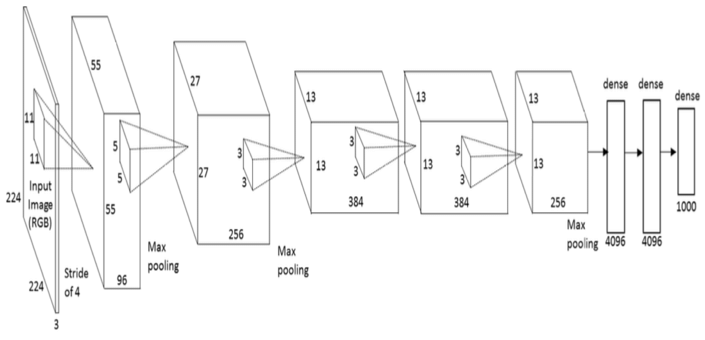

Deep Architecture¶

AlexNet:

"Imagenet classification with deep convolutional neural networks" A Krizhevsky, I Sutskever, GE Hinton, NIPS 2012

Basically we try stuff using intuition and (educated?) guesswork. Special kinds of layers, based on biological inspiration from the vision system, are very popular due to sucesses in Machine Learning competitions.

Lab: Install Tensorflow & Keras¶

Ideally using Anaconda.

Or via command line:

conda create -n tensorflow_py36 python=3.6

conda activate tensorflow_py36

conda install tensorflow

conda install keras

conda install matplotlib numpy (and whatever else...)

Or from within Anaconda-Navigator using GUI.

Plan B: Cloud-based Notebooks¶

Google CoLab: https://colab.research.google.com/notebooks/welcome.ipynb

Kaggle Kernel: https://www.kaggle.com/kernels