Unstructured Data & Natural Language Processing

Keith Dillon

Spring 2020

Topic 2: Handling Text

This topic:¶

- Character encodings, unicode

- Regular expressions

- Tokenization, segmentation, & stemming

- Approximate sequence matching

Reading:

- https://www.oreilly.com/library/view/fluent-python/9781491946237/ch04.html

- J&M Chapter 2, "Regular Expressions, Text Normalization, and Edit Distance"

- "The Algorithm Design Manual," Chapter 8, Steven Skiena, 1997.

- http://www.langmead-lab.org/teaching-materials/

Text Processing Levels¶

- Character

- Words

- Sentences / multiple words

- Paragraphs / multiple sentences

- Document

- Corpus / multiple documents

Source: Taming Text, p 9

Character¶

- Character encodings

- Case (upper and lower)

- Punctuation

- Numbers

Words¶

- Word segmentation: dividing text into words. Fairly easy for English and other languages that use whitespace; much harder for languages like Chinese and Japanese.

- Stemming: the process of shortening a word to its base or root form.

- Abbreviations, acronyms, and spelling. All help understand words.

Sentences¶

- Sentence boundary detection is a well-understood problem in English, but is still not perfect.

- Phrase detection. San Francisco and quick red fox are examples of phrases.

- Parsing: breaking sentences down into subject-verb and other relation- ships often yields useful information about words and their relation- ships to each other.

- Combining the definitions of words and their relationships to each other to determine the meaning of a sentence.

Paragraphs¶

At this level, processing becomes more difficult in an effort to find deeper understanding of an author’s intent.

For example, algorithms for summarization often require being able to identify which sentences are more important than others.

Document¶

Similar to the paragraph level, understanding the meaning of a document often requires knowledge that goes beyond what’s contained in the actual document.

Authors often expect readers to have a certain background or possess certain reading skills.

Corpus¶

At this level, people want to quickly find items of interest as well as group related documents and read summaries of those documents.

Applications that can aggregate and organize facts and opinions and find relationships are particularly useful.

I. Character Encodings¶

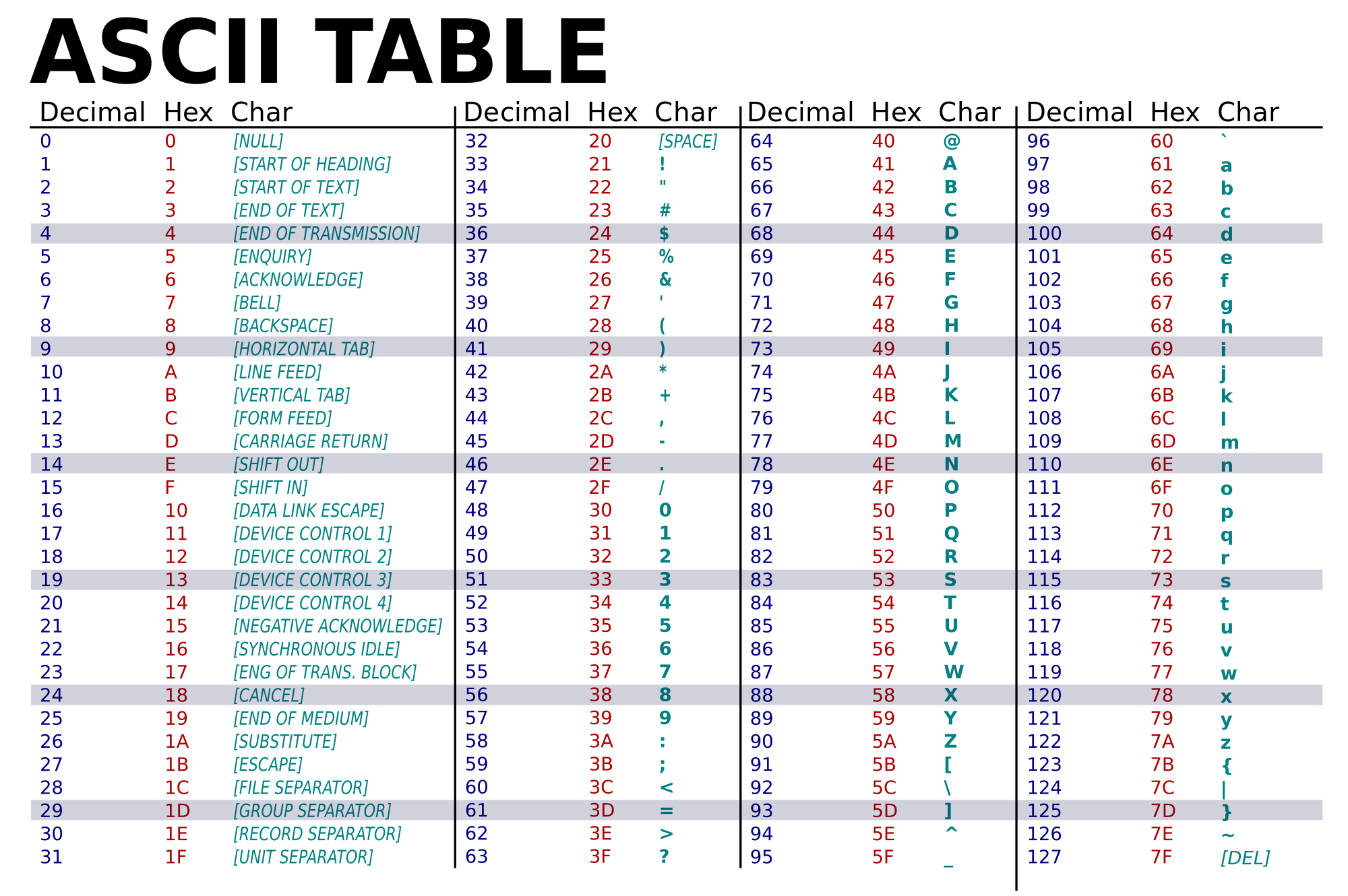

Character Encodings - map character to binary¶

- ASCII - 7 bits

- char - 8-bit - a-z,A-Z,0-9,...

- multi-byte encodings for other languages

ascii(38)

'38'

str(38)

'38'

chr(38)

'&'

Unicode - we all hate it¶

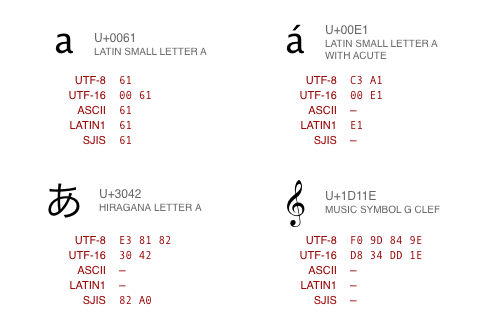

Unicode - "code points"¶

lookup table of unique numbers denoting every possible character

Encoding still needed -- various standards

- UTF-8 (dominant standard),16 - variable-length

- UTF-32 - 4-byte

chr(2^30+1) # for python 3

'\x1d'

Mojibake¶

Incorrect, unreadable characters shown when computer software fails to show text correctly.

It is a result of text being decoded using an unintended character encoding.

Very common in Japanese websites, hence the name:

文字 (moji) "character" + 化け (bake) "transform"

Good News: It is systematic (find the right encoding) and easy to fix

Example¶

# manually load text without specifying encoding

with open('shakespeare_all.txt') as f:

shakespeare = f.read()

shakespeare[:50]

'\ufeffTHE SONNETS\nby William Shakespeare\n\n\n\n '

FTFY module¶

shakespeare[:50]

'\ufeffTHE SONNETS\nby William Shakespeare\n\n\n\n '

import ftfy

ftfy.fix_text(shakespeare[:50])

'THE SONNETS\nby William Shakespeare\n\n\n\n '

help(ftfy)

Help on package ftfy:

NAME

ftfy - ftfy: fixes text for you

DESCRIPTION

This is a module for making text less broken. See the `fix_text` function

for more information.

PACKAGE CONTENTS

bad_codecs (package)

badness

build_data

chardata

cli

fixes

formatting

FUNCTIONS

explain_unicode(text)

A utility method that's useful for debugging mysterious Unicode.

It breaks down a string, showing you for each codepoint its number in

hexadecimal, its glyph, its category in the Unicode standard, and its name

in the Unicode standard.

>>> explain_unicode('(╯°□°)╯︵ ┻━┻')

U+0028 ( [Ps] LEFT PARENTHESIS

U+256F ╯ [So] BOX DRAWINGS LIGHT ARC UP AND LEFT

U+00B0 ° [So] DEGREE SIGN

U+25A1 □ [So] WHITE SQUARE

U+00B0 ° [So] DEGREE SIGN

U+0029 ) [Pe] RIGHT PARENTHESIS

U+256F ╯ [So] BOX DRAWINGS LIGHT ARC UP AND LEFT

U+FE35 ︵ [Ps] PRESENTATION FORM FOR VERTICAL LEFT PARENTHESIS

U+0020 [Zs] SPACE

U+253B ┻ [So] BOX DRAWINGS HEAVY UP AND HORIZONTAL

U+2501 ━ [So] BOX DRAWINGS HEAVY HORIZONTAL

U+253B ┻ [So] BOX DRAWINGS HEAVY UP AND HORIZONTAL

fix_file(input_file, encoding=None, *, fix_entities='auto', remove_terminal_escapes=True, fix_encoding=True, fix_latin_ligatures=True, fix_character_width=True, uncurl_quotes=True, fix_line_breaks=True, fix_surrogates=True, remove_control_chars=True, remove_bom=True, normalization='NFC')

Fix text that is found in a file.

If the file is being read as Unicode text, use that. If it's being read as

bytes, then we hope an encoding was supplied. If not, unfortunately, we

have to guess what encoding it is. We'll try a few common encodings, but we

make no promises. See the `guess_bytes` function for how this is done.

The output is a stream of fixed lines of text.

fix_text(text, *, fix_entities='auto', remove_terminal_escapes=True, fix_encoding=True, fix_latin_ligatures=True, fix_character_width=True, uncurl_quotes=True, fix_line_breaks=True, fix_surrogates=True, remove_control_chars=True, remove_bom=True, normalization='NFC', max_decode_length=1000000)

Given Unicode text as input, fix inconsistencies and glitches in it,

such as mojibake.

Let's start with some examples:

>>> print(fix_text('ünicode'))

ünicode

>>> print(fix_text('Broken text… it’s flubberific!',

... normalization='NFKC'))

Broken text... it's flubberific!

>>> print(fix_text('HTML entities <3'))

HTML entities <3

>>> print(fix_text('<em>HTML entities <3</em>'))

<em>HTML entities <3</em>

>>> print(fix_text("¯\\_(ã\x83\x84)_/¯"))

¯\_(ツ)_/¯

>>> # This example string starts with a byte-order mark, even if

>>> # you can't see it on the Web.

>>> print(fix_text('\ufeffParty like\nit’s 1999!'))

Party like

it's 1999!

>>> print(fix_text('LOUD NOISES'))

LOUD NOISES

>>> len(fix_text('fi' * 100000))

200000

>>> len(fix_text(''))

0

Based on the options you provide, ftfy applies these steps in order:

- If `remove_terminal_escapes` is True, remove sequences of bytes that are

instructions for Unix terminals, such as the codes that make text appear

in different colors.

- If `fix_encoding` is True, look for common mistakes that come from

encoding or decoding Unicode text incorrectly, and fix them if they are

reasonably fixable. See `fixes.fix_encoding` for details.

- If `fix_entities` is True, replace HTML entities with their equivalent

characters. If it's "auto" (the default), then consider replacing HTML

entities, but don't do so in text where you have seen a pair of actual

angle brackets (that's probably actually HTML and you shouldn't mess

with the entities).

- If `uncurl_quotes` is True, replace various curly quotation marks with

plain-ASCII straight quotes.

- If `fix_latin_ligatures` is True, then ligatures made of Latin letters,

such as `fi`, will be separated into individual letters. These ligatures

are usually not meaningful outside of font rendering, and often represent

copy-and-paste errors.

- If `fix_character_width` is True, half-width and full-width characters

will be replaced by their standard-width form.

- If `fix_line_breaks` is true, convert all line breaks to Unix style

(CRLF and CR line breaks become LF line breaks).

- If `fix_surrogates` is true, ensure that there are no UTF-16 surrogates

in the resulting string, by converting them to the correct characters

when they're appropriately paired, or replacing them with \ufffd

otherwise.

- If `remove_control_chars` is true, remove control characters that

are not suitable for use in text. This includes most of the ASCII control

characters, plus some Unicode controls such as the byte order mark

(U+FEFF). Useful control characters, such as Tab, Line Feed, and

bidirectional marks, are left as they are.

- If `remove_bom` is True, remove the Byte-Order Mark at the start of the

string if it exists. (This is largely redundant, because it's a special

case of `remove_control_characters`. This option will become deprecated

in a later version.)

- If `normalization` is not None, apply the specified form of Unicode

normalization, which can be one of 'NFC', 'NFKC', 'NFD', and 'NFKD'.

- The default normalization, NFC, combines characters and diacritics that

are written using separate code points, such as converting "e" plus an

acute accent modifier into "é", or converting "ka" (か) plus a dakuten

into the single character "ga" (が). Unicode can be converted to NFC

form without any change in its meaning.

- If you ask for NFKC normalization, it will apply additional

normalizations that can change the meanings of characters. For example,

ellipsis characters will be replaced with three periods, all ligatures

will be replaced with the individual characters that make them up,

and characters that differ in font style will be converted to the same

character.

- If anything was changed, repeat all the steps, so that the function is

idempotent. "&amp;" will become "&", for example, not "&".

`fix_text` will work one line at a time, with the possibility that some

lines are in different encodings, allowing it to fix text that has been

concatenated together from different sources.

When it encounters lines longer than `max_decode_length` (1 million

codepoints by default), it will not run the `fix_encoding` step, to avoid

unbounded slowdowns.

If you're certain that any decoding errors in the text would have affected

the entire text in the same way, and you don't mind operations that scale

with the length of the text, you can use `fix_text_segment` directly to

fix the whole string in one batch.

fix_text_segment(text, *, fix_entities='auto', remove_terminal_escapes=True, fix_encoding=True, fix_latin_ligatures=True, fix_character_width=True, uncurl_quotes=True, fix_line_breaks=True, fix_surrogates=True, remove_control_chars=True, remove_bom=True, normalization='NFC')

Apply fixes to text in a single chunk. This could be a line of text

within a larger run of `fix_text`, or it could be a larger amount

of text that you are certain is in a consistent encoding.

See `fix_text` for a description of the parameters.

ftfy = fix_text(text, *, fix_entities='auto', remove_terminal_escapes=True, fix_encoding=True, fix_latin_ligatures=True, fix_character_width=True, uncurl_quotes=True, fix_line_breaks=True, fix_surrogates=True, remove_control_chars=True, remove_bom=True, normalization='NFC', max_decode_length=1000000)

Given Unicode text as input, fix inconsistencies and glitches in it,

such as mojibake.

Let's start with some examples:

>>> print(fix_text('ünicode'))

ünicode

>>> print(fix_text('Broken text… it’s flubberific!',

... normalization='NFKC'))

Broken text... it's flubberific!

>>> print(fix_text('HTML entities <3'))

HTML entities <3

>>> print(fix_text('<em>HTML entities <3</em>'))

<em>HTML entities <3</em>

>>> print(fix_text("¯\\_(ã\x83\x84)_/¯"))

¯\_(ツ)_/¯

>>> # This example string starts with a byte-order mark, even if

>>> # you can't see it on the Web.

>>> print(fix_text('\ufeffParty like\nit’s 1999!'))

Party like

it's 1999!

>>> print(fix_text('LOUD NOISES'))

LOUD NOISES

>>> len(fix_text('fi' * 100000))

200000

>>> len(fix_text(''))

0

Based on the options you provide, ftfy applies these steps in order:

- If `remove_terminal_escapes` is True, remove sequences of bytes that are

instructions for Unix terminals, such as the codes that make text appear

in different colors.

- If `fix_encoding` is True, look for common mistakes that come from

encoding or decoding Unicode text incorrectly, and fix them if they are

reasonably fixable. See `fixes.fix_encoding` for details.

- If `fix_entities` is True, replace HTML entities with their equivalent

characters. If it's "auto" (the default), then consider replacing HTML

entities, but don't do so in text where you have seen a pair of actual

angle brackets (that's probably actually HTML and you shouldn't mess

with the entities).

- If `uncurl_quotes` is True, replace various curly quotation marks with

plain-ASCII straight quotes.

- If `fix_latin_ligatures` is True, then ligatures made of Latin letters,

such as `fi`, will be separated into individual letters. These ligatures

are usually not meaningful outside of font rendering, and often represent

copy-and-paste errors.

- If `fix_character_width` is True, half-width and full-width characters

will be replaced by their standard-width form.

- If `fix_line_breaks` is true, convert all line breaks to Unix style

(CRLF and CR line breaks become LF line breaks).

- If `fix_surrogates` is true, ensure that there are no UTF-16 surrogates

in the resulting string, by converting them to the correct characters

when they're appropriately paired, or replacing them with \ufffd

otherwise.

- If `remove_control_chars` is true, remove control characters that

are not suitable for use in text. This includes most of the ASCII control

characters, plus some Unicode controls such as the byte order mark

(U+FEFF). Useful control characters, such as Tab, Line Feed, and

bidirectional marks, are left as they are.

- If `remove_bom` is True, remove the Byte-Order Mark at the start of the

string if it exists. (This is largely redundant, because it's a special

case of `remove_control_characters`. This option will become deprecated

in a later version.)

- If `normalization` is not None, apply the specified form of Unicode

normalization, which can be one of 'NFC', 'NFKC', 'NFD', and 'NFKD'.

- The default normalization, NFC, combines characters and diacritics that

are written using separate code points, such as converting "e" plus an

acute accent modifier into "é", or converting "ka" (か) plus a dakuten

into the single character "ga" (が). Unicode can be converted to NFC

form without any change in its meaning.

- If you ask for NFKC normalization, it will apply additional

normalizations that can change the meanings of characters. For example,

ellipsis characters will be replaced with three periods, all ligatures

will be replaced with the individual characters that make them up,

and characters that differ in font style will be converted to the same

character.

- If anything was changed, repeat all the steps, so that the function is

idempotent. "&amp;" will become "&", for example, not "&".

`fix_text` will work one line at a time, with the possibility that some

lines are in different encodings, allowing it to fix text that has been

concatenated together from different sources.

When it encounters lines longer than `max_decode_length` (1 million

codepoints by default), it will not run the `fix_encoding` step, to avoid

unbounded slowdowns.

If you're certain that any decoding errors in the text would have affected

the entire text in the same way, and you don't mind operations that scale

with the length of the text, you can use `fix_text_segment` directly to

fix the whole string in one batch.

guess_bytes(bstring)

NOTE: Using `guess_bytes` is not the recommended way of using ftfy. ftfy

is not designed to be an encoding detector.

In the unfortunate situation that you have some bytes in an unknown

encoding, ftfy can guess a reasonable strategy for decoding them, by trying

a few common encodings that can be distinguished from each other.

Unlike the rest of ftfy, this may not be accurate, and it may *create*

Unicode problems instead of solving them!

It doesn't try East Asian encodings at all, and if you have East Asian text

that you don't know how to decode, you are somewhat out of luck. East

Asian encodings require some serious statistics to distinguish from each

other, so we can't support them without decreasing the accuracy of ftfy.

If you don't know which encoding you have at all, I recommend

trying the 'chardet' module, and being appropriately skeptical about its

results.

The encodings we try here are:

- UTF-16 with a byte order mark, because a UTF-16 byte order mark looks

like nothing else

- UTF-8, because it's the global standard, which has been used by a

majority of the Web since 2008

- "utf-8-variants", because it's what people actually implement when they

think they're doing UTF-8

- MacRoman, because Microsoft Office thinks it's still a thing, and it

can be distinguished by its line breaks. (If there are no line breaks in

the string, though, you're out of luck.)

- "sloppy-windows-1252", the Latin-1-like encoding that is the most common

single-byte encoding

VERSION

5.5.1

FILE

/home/user01/anaconda3/envs/slides/lib/python3.7/site-packages/ftfy/__init__.py

What about the line breaks?

with open('shakespeare_all.txt') as f:

shakespeare = f.read().splitlines()

shakespeare[:10]

['\ufeffTHE SONNETS', 'by William Shakespeare', '', '', '', ' 1', ' From fairest creatures we desire increase,', " That thereby beauty's rose might never die,", ' But as the riper should by time decease,', ' His tender heir might bear his memory:']

# Munging text

shakespeare = [ftfy.fix_text(line.strip()) for line in shakespeare if line]

shakespeare[:10]

['THE SONNETS', 'by William Shakespeare', '1', 'From fairest creatures we desire increase,', "That thereby beauty's rose might never die,", 'But as the riper should by time decease,', 'His tender heir might bear his memory:', 'But thou contracted to thine own bright eyes,', "Feed'st thy light's flame with self-substantial fuel,", 'Making a famine where abundance lies,']

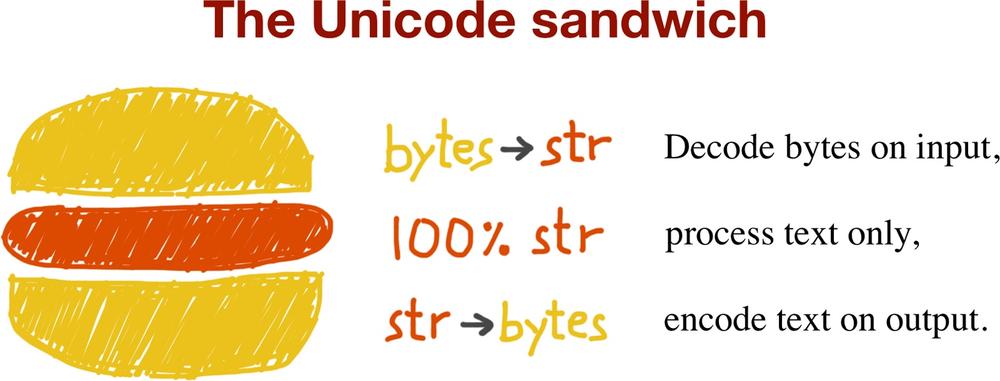

Unicode Sandwich¶

best practice for handling text

"bytes should be decoded to str as early as possible on input (e.g., when opening a file for reading). The “meat” of the sandwich is the business logic of your program, where text handling is done exclusively on str objects. You should never be encoding or decoding in the middle of other processing. On output, the str are encoded to bytes as late as possible. Most web frameworks work like that, and we rarely touch bytes when using them."

https://www.oreilly.com/library/view/fluent-python/9781491946237/ch04.html

Summary¶

- Unicode is a necessary evil

- Alway try to keep it Unicode

- Be explicit about encodings

- If you see mojibake, don't ╯°□°)╯︵ ┻━┻. ftfy

II. String Processing and Regular Expressions¶

Strings¶

Array (list) of characters

a = "Hello"

b = "World"

print(a+b)

print(a+' '+b+'.')

HelloWorld Hello World.

from string import *

whos

Variable Type Data/Info

-------------------------------------------------

Formatter type <class 'string.Formatter'>

Template _TemplateMetaclass <class 'string.Template'>

ascii_letters str abcdefghijklmnopqrstuvwxy<...>BCDEFGHIJKLMNOPQRSTUVWXYZ

ascii_lowercase str abcdefghijklmnopqrstuvwxyz

ascii_uppercase str ABCDEFGHIJKLMNOPQRSTUVWXYZ

capwords function <function capwords at 0x10250eb70>

digits str 0123456789

hexdigits str 0123456789abcdefABCDEF

octdigits str 01234567

punctuation str !"#$%&'()*+,-./:;<=>?@[\]^_`{|}~

Student Activity¶

Let's make a simple password generator function!

Your one-liner should return something like this:

'kZmuSUVeVC'

'mGEsuIfl91'

'FEFsWwAgLM'

import random

import string

n = 10

random = ''.join((random.choice(string.ascii_letters + string.digits) for n in range(n)))

Regular Expressions ("regex")¶

Used in grep, awk, ed, perl, ...

Regular expression is a pattern matching language, the "RE language".

A Domain Specific Language (DSL). Powerful (but limited language). E.g. SQL, Markdown.

from re import *

whos

Variable Type Data/Info ----------------------------------- A RegexFlag RegexFlag.ASCII ASCII RegexFlag RegexFlag.ASCII DOTALL RegexFlag RegexFlag.DOTALL I RegexFlag RegexFlag.IGNORECASE IGNORECASE RegexFlag RegexFlag.IGNORECASE L RegexFlag RegexFlag.LOCALE LOCALE RegexFlag RegexFlag.LOCALE M RegexFlag RegexFlag.MULTILINE MULTILINE RegexFlag RegexFlag.MULTILINE Match type <class 're.Match'> Pattern type <class 're.Pattern'> S RegexFlag RegexFlag.DOTALL U RegexFlag RegexFlag.UNICODE UNICODE RegexFlag RegexFlag.UNICODE VERBOSE RegexFlag RegexFlag.VERBOSE X RegexFlag RegexFlag.VERBOSE a str Hello b str World compile function <function compile at 0x7f35d3befea0> error type <class 're.error'> escape function <function escape at 0x7f35d3bf10d0> findall function <function findall at 0x7f35d3befd90> finditer function <function finditer at 0x7f35d3befe18> fullmatch function <function fullmatch at 0x7f35d3befae8> match function <function match at 0x7f35d3c10f28> purge function <function purge at 0x7f35d3beff28> search function <function search at 0x7f35d3befb70> split function <function split at 0x7f35d3befd08> sub function <function sub at 0x7f35d3befbf8> subn function <function subn at 0x7f35d3befc80> template function <function template at 0x7f35d3bf1048>

Regex string functions¶

- findall() - Returns a list containing all matches

- search() - Returns a Match object if there is a match anywhere in the string

- split() - Returns a list where the string has been split at each match

- sub() - Replaces one or many matches with a string

- match() apply the pattern at the start of the string

Motivating example¶

Write a regex to match common misspellings of calendar: "calendar", "calandar", or "celender"

# Let's explore how to do this

# Patterns to match

dat0 = ["calendar", "calandar", "celender"]

# Patterns to not match

dat1 = ["foo", "cal", "calli", "calaaaandar"]

# Interleave them

string = " ".join([item

for pair in zip(dat0, dat1)

for item in pair])

string

'calendar foo calandar cal celender calli'

# You match it with literals

literal1 = 'calendar'

literal2 = 'calandar'

literal3 = 'celender'

patterns = "|".join([literal1, literal2, literal3])

patterns

'calendar|calandar|celender'

import re

print(re.findall(patterns, string))

['calendar', 'calandar', 'celender']

... a better way¶

Let's write it with regex language

# A little bit of meta-programming (strings all the way down!)

sub_pattern = '[ae]'

pattern2 = sub_pattern.join(["c","l","nd","r"])

print(pattern2)

print(string)

re.findall(pattern2, string)

c[ae]l[ae]nd[ae]r calendar foo calandar cal celender calli

['calendar', 'calandar', 'celender']

Regex Terms¶

- target string: This term describes the string that we will be searching, that is, the string in which we want to find our match or search pattern.

- search expression: The pattern we use to find what we want. Most commonly called the regular expression.

- literal: A literal is any character we use in a search or matching expression, for example, to find ind in windows the ind is a literal string - each character plays a part in the search, it is literally the string we want to find.

- metacharacter: A metacharacter is one or more special characters that have a unique meaning and are NOT used as literals in the search expression. For example "." means any character.

- escape sequence: An escape sequence is a way of indicating that we want to use one of our metacharacters as a literal.

function(search_expression, target_string)¶

pick function based on goal (find all matches, replace matches, find first match, ...)

form search expression to account for variations in target we allow. E.g. possible misspellings.

Metacharacters¶

special characters that have a unique meaning

Escape sequence "\"¶

A way of indicating that we want to use one of our metacharacters as a literal.

In a regular expression an escape sequence is metacharacter \ (backslash) in front of the metacharacter that we want to use as a literal.

Ex: If we want to find \file in the target string c:\file then we would need to use the search expression \\file (each \ we want to search for as a literal (there are 2) is preceded by an escape sequence ).

Special Escape Sequences¶

\A - specified characters at beginning of string. Ex: "\AThe"

\b - specified characters at beginning or end of a word. Ex: r"\bain" r"ain\b"

\B - specified characters present but NOT at beginning (or end) of a word. Ex: r"\Bain" r"ain\B"

\d - string contains digits (numbers from 0-9)

\D - string DOES NOT contain digits

\s - string contains a white space character

\S - string DOES NOT contain a white space character

\w - string contains any word characters (characters from a to Z, digits from 0-9, and the underscore _ character)

\W - string DOES NOT contain any word characters

\Z - specified characters are at the end of the string

Set¶

a set of characters inside a pair of square brackets [] with a special meaning:

[arn] one of the specified characters (a, r, or n) are present

[a-n] any lower case character, alphabetically between a and n

[^arn] any character EXCEPT a, r, and n

[0123] any of the specified digits (0, 1, 2, or 3) are present

[0-9] any digit between 0 and 9

[0-5][0-9] any two-digit numbers from 00 and 59

[a-zA-Z] any character alphabetically between a and z, lower case OR upper case

[+] In sets, +, *, ., |, (), $,{} has no special meaning, so [+] means any + character in the string

target_string = '415-805-1888'

pattern1 = '[0-9][0-9][0-9]-[0-9][0-9][0-9]-[0-9][0-9][0-9][0-9]'

pattern2 = '\d\d\d-\d\d\d-\d\d\d\d'

pattern3 = '\d{3}-\d{3}-\d{4}'

print(re.search(pattern1,target_string))

print(re.search(pattern2,target_string))

print(re.search(pattern3,target_string))

<re.Match object; span=(0, 12), match='415-805-1888'> <re.Match object; span=(0, 12), match='415-805-1888'> <re.Match object; span=(0, 12), match='415-805-1888'>

Great!

\d{3}-\d{3}-\d{4} uses Quantifiers.

Quantifiers: allow you to specify how many times the preceding expression should match.

{} is extact quantifier.

print(re.findall('x?','xxxy'))

['x', 'x', 'x', '', '']

print(re.findall('x+','xxxy'))

['xxx']

Capturing groups¶

Problem: You have odd line breaks in your text.

text = 'Long-\nterm problems with short-\nterm solutions.'

print(text)

Long- term problems with short- term solutions.

Solution: Write a regex to find the "dash with line break" and replace it with just a line break.

import re

# 1st Attempt

text = 'Long-\nterm problems with short-\nterm solutions.'

re.sub('(\w+)-\n(\w+)', r'-', text)

'- problems with - solutions.'

Not right!

We need capturing groups!

Caputuring groups allow you to apply regex operators to the groups that have been matched by regex.

For for example, if you wanted to list all the image files in a folder. You could then use a pattern such as ^(IMG\d+\.png)$ to capture and extract the full filename, but if you only wanted to capture the filename without the extension, you could use the pattern ^(IMG\d+)\.png$ which only captures the part before the period.

re.sub('(\w+)-\n(\w+)', r'\1-\2', text)

'Long-term problems with short-term solutions.'

The parentheses around the word characters (specified by \w) means that any matching text should be captured into a group.

The '\1' and '\2' specifiers refer to the text in the first and second captured groups.

"Long" and "term" are the first and second captured groups for the first match.

"short" and "term" are the first and second captured groups for the next match.

NOTE: 1-based indexing

Regex: Connection to statistical concepts¶

False positives (Type I error): Matching strings that we should not have matched

False negatives (Type II error): Not matching strings that we should have matched

Reducing the error rate for a task often involves two antagonistic efforts:

- Minimizing false positives

- Minimizing false negatives

In a perfect world, you would be able to minimize both but in reality you often have to trade one for the other.

III. Tokenization, segmentation, & stemming¶

By The End Of This section You Should Be Able To:¶

- Code two different ways of segmenting sentences

- Recognize there are different tokenizers

- List the difference among stemmers

- Explain the difference between stemming and lemmatization

4 ways to show mastery ¶

- Define the technical term in Plain English

- Calculate by hand

- Code by hand (i.e., above the API)

- Code by using the best library (i.e., below the API)

1. Plain English definition of sentence segmentation:¶

Sentence segmentation is dividing a stream of language into component sentences.

Sentences can be defined as a set of words that is complete in itself, typically containing a subject and predicate. Sentences typically are statements, questions, exclamations, or commands.

Sentence segmentation is typically done using punctuation, particularly the full stop character "." as a reasonable approximation.

However there are complications because full stop character is also used to end abbreviations, which may or may not also terminate a sentence.

For example, Dr. Evil.

2. Sentence segmentation by hand¶

A Confederacy Of Dunces

By John Kennedy Toole

A green hunting cap squeezed the top of the fleshy balloon of a head. The green earflaps, full of large ears and uncut hair and the fine bristles that grew in the ears themselves, stuck out on either side like turn signals indicating two directions at once. Full, pursed lips protruded beneath the bushy black moustache and, at their corners, sank into little folds filled with disapproval and potato chip crumbs. In the shadow under the green visor of the cap Ignatius J. Reilly’s supercilious blue and yellow eyes looked down upon the other people waiting under the clock at the D.H. Holmes department store, studying the crowd of people for signs of bad taste in dress.

sentence_1 = A green hunting cap squeezed the top of the fleshy balloon of a head.

sentence_2 = The green earflaps, full of large ears and uncut hair and the fine bristles that grew in the ears themselves, stuck out on either side like turn signals indicating two directions at once.

sentence_3 = Full, pursed lips protruded beneath the bushy black moustache and, at their corners, sank into little folds filled with disapproval and potato chip crumbs.

sentence_4 = In the shadow under the green visor of the cap Ignatius J. Reilly’s supercilious blue and yellow eyes looked down upon the other people waiting under the clock at the D.H. Holmes department store, studying the crowd of people for signs of bad taste in dress.

3. Code by hand (i.e., above the API)¶

text = """A green hunting cap squeezed the top of the fleshy balloon of a head. The green earflaps, full of large ears and uncut hair and the fine bristles that grew in the ears themselves, stuck out on either side like turn signals indicating two directions at once. Full, pursed lips protruded beneath the bushy black moustache and, at their corners, sank into little folds filled with disapproval and potato chip crumbs. In the shadow under the green visor of the cap Ignatius J. Reilly’s supercilious blue and yellow eyes looked down upon the other people waiting under the clock at the D.H. Holmes department store, studying the crowd of people for signs of bad taste in dress. """

import re

pattern = "|".join(['!', # end with "!"

'\?', # end with "?"

'\.\D', # end with "." and the full stop is not followed by a number

'\.\s']) # end with "." and the full stop is followed by a whitespace

re.split(pattern, text)

['A green hunting cap squeezed the top of the fleshy balloon of a head', 'The green earflaps, full of large ears and uncut hair and the fine bristles that grew in the ears themselves, stuck out on either side like turn signals indicating two directions at once', 'Full, pursed lips protruded beneath the bushy black moustache and, at their corners, sank into little folds filled with disapproval and potato chip crumbs', 'In the shadow under the green visor of the cap Ignatius J', 'Reilly’s supercilious blue and yellow eyes looked down upon the other people waiting under the clock at the D', '', 'Holmes department store, studying the crowd of people for signs of bad taste in dress', '']

3. Code by hand (i.e., above the API): Version 2.0¶

pattern = "(?<!\w\.\w.)(?<![A-Z][a-z]\.)(?<=\.|\?)\s"

re.split(pattern, text)

['A green hunting cap squeezed the top of the fleshy balloon of a head.', 'The green earflaps, full of large ears and uncut hair and the fine bristles that grew in the ears themselves, stuck out on either side like turn signals indicating two directions at once.', 'Full, pursed lips protruded beneath the bushy black moustache and, at their corners, sank into little folds filled with disapproval and potato chip crumbs.', 'In the shadow under the green visor of the cap Ignatius J.', 'Reilly’s supercilious blue and yellow eyes looked down upon the other people waiting under the clock at the D.H. Holmes department store, studying the crowd of people for signs of bad taste in dress.', '']

4. Code by using the best library (i.e., below the API)¶

from textblob import TextBlob

text = """A green hunting cap squeezed the top of the fleshy balloon of a head. The green earflaps, full of large ears and uncut hair and the fine bristles that grew in the ears themselves, stuck out on either side like turn signals indicating two directions at once. Full, pursed lips protruded beneath the bushy black moustache and, at their corners, sank into little folds filled with disapproval and potato chip crumbs. In the shadow under the green visor of the cap Ignatius J. Reilly’s supercilious blue and yellow eyes looked down upon the other people waiting under the clock at the D.H. Holmes department store, studying the crowd of people for signs of bad taste in dress. """

blob = TextBlob(text)

blob.sentences

[Sentence("A green hunting cap squeezed the top of the fleshy balloon of a head."),

Sentence("The green earflaps, full of large ears and uncut hair and the fine bristles that grew in the ears themselves, stuck out on either side like turn signals indicating two directions at once."),

Sentence("Full, pursed lips protruded beneath the bushy black moustache and, at their corners, sank into little folds filled with disapproval and potato chip crumbs."),

Sentence("In the shadow under the green visor of the cap Ignatius J. Reilly’s supercilious blue and yellow eyes looked down upon the other people waiting under the clock at the D.H. Holmes department store, studying the crowd of people for signs of bad taste in dress.")]

Tokenization¶

Toenization: Breaking a stream of text up into words, phrases, symbols, or other meaningful elements called tokens

The simplest way to tokenize is to split on white space

sentence1 = 'Sky is blue and trees are green'

sentence1.split(' ')

['Sky', 'is', 'blue', 'and', 'trees', 'are', 'green']

Sometimes you might also want to deal with abbreviations, hypenations, puntuations and other characters.

In those cases, you would want to use regex.

However, going through a sentence multiple times can be slow to run if the corpus is long

import re

sentence2 = 'This state-of-the-art technology is cool, isn\'t it?'

sentence2 = re.sub('-', ' ', sentence2)

sentence2 = re.sub('[,|.|?]', '', sentence2)

sentence2 = re.sub('n\'t', ' not', sentence2)

sentence2_tokens = re.split('\s+', sentence2)

print(sentence2_tokens)

['This', 'state', 'of', 'the', 'art', 'technology', 'is', 'cool', 'is', 'not', 'it']

In this case, there are 11 tokens and the size of the vocabulary is 10

print('Number of tokens:', len(sentence2_tokens))

print('Number of vocabulary:', len(set(sentence2_tokens)))

Number of tokens: 11 Number of vocabulary: 10

Most text manipulation packages have tokenize functions¶

from nltk.tokenize import word_tokenize

text1 = "It's true that the chicken was the best bamboozler in the known multiverse."

tokens = word_tokenize(text1)

print(tokens)

['It', "'s", 'true', 'that', 'the', 'chicken', 'was', 'the', 'best', 'bamboozler', 'in', 'the', 'known', 'multiverse', '.']

import nltk

help(nltk.tokenize)

Help on package nltk.tokenize in nltk:

NAME

nltk.tokenize - NLTK Tokenizer Package

DESCRIPTION

Tokenizers divide strings into lists of substrings. For example,

tokenizers can be used to find the words and punctuation in a string:

>>> from nltk.tokenize import word_tokenize

>>> s = '''Good muffins cost $3.88\nin New York. Please buy me

... two of them.\n\nThanks.'''

>>> word_tokenize(s)

['Good', 'muffins', 'cost', '$', '3.88', 'in', 'New', 'York', '.',

'Please', 'buy', 'me', 'two', 'of', 'them', '.', 'Thanks', '.']

This particular tokenizer requires the Punkt sentence tokenization

models to be installed. NLTK also provides a simpler,

regular-expression based tokenizer, which splits text on whitespace

and punctuation:

>>> from nltk.tokenize import wordpunct_tokenize

>>> wordpunct_tokenize(s)

['Good', 'muffins', 'cost', '$', '3', '.', '88', 'in', 'New', 'York', '.',

'Please', 'buy', 'me', 'two', 'of', 'them', '.', 'Thanks', '.']

We can also operate at the level of sentences, using the sentence

tokenizer directly as follows:

>>> from nltk.tokenize import sent_tokenize, word_tokenize

>>> sent_tokenize(s)

['Good muffins cost $3.88\nin New York.', 'Please buy me\ntwo of them.', 'Thanks.']

>>> [word_tokenize(t) for t in sent_tokenize(s)]

[['Good', 'muffins', 'cost', '$', '3.88', 'in', 'New', 'York', '.'],

['Please', 'buy', 'me', 'two', 'of', 'them', '.'], ['Thanks', '.']]

Caution: when tokenizing a Unicode string, make sure you are not

using an encoded version of the string (it may be necessary to

decode it first, e.g. with ``s.decode("utf8")``.

NLTK tokenizers can produce token-spans, represented as tuples of integers

having the same semantics as string slices, to support efficient comparison

of tokenizers. (These methods are implemented as generators.)

>>> from nltk.tokenize import WhitespaceTokenizer

>>> list(WhitespaceTokenizer().span_tokenize(s))

[(0, 4), (5, 12), (13, 17), (18, 23), (24, 26), (27, 30), (31, 36), (38, 44),

(45, 48), (49, 51), (52, 55), (56, 58), (59, 64), (66, 73)]

There are numerous ways to tokenize text. If you need more control over

tokenization, see the other methods provided in this package.

For further information, please see Chapter 3 of the NLTK book.

PACKAGE CONTENTS

api

casual

mwe

nist

punkt

regexp

repp

sexpr

simple

stanford

stanford_segmenter

texttiling

toktok

treebank

util

FUNCTIONS

sent_tokenize(text, language='english')

Return a sentence-tokenized copy of *text*,

using NLTK's recommended sentence tokenizer

(currently :class:`.PunktSentenceTokenizer`

for the specified language).

:param text: text to split into sentences

:param language: the model name in the Punkt corpus

word_tokenize(text, language='english', preserve_line=False)

Return a tokenized copy of *text*,

using NLTK's recommended word tokenizer

(currently an improved :class:`.TreebankWordTokenizer`

along with :class:`.PunktSentenceTokenizer`

for the specified language).

:param text: text to split into words

:type text: str

:param language: the model name in the Punkt corpus

:type language: str

:param preserve_line: An option to keep the preserve the sentence and not sentence tokenize it.

:type preserve_line: bool

DATA

improved_close_quote_regex = re.compile('([»”’])')

improved_open_quote_regex = re.compile('([«“‘„]|[`]+)')

improved_open_single_quote_regex = re.compile("(?i)(\\')(?!re|ve|ll|m|...

improved_punct_regex = re.compile('([^\\.])(\\.)([\\]\\)}>"\\\'»”’ ]*)...

FILE

/home/user01/anaconda3/envs/slides/lib/python3.7/site-packages/nltk/tokenize/__init__.py

Morphemes¶

A morpheme is the smallest unit of language that has meaning.

There are two types of morpheme:

- stems

- affixes (suffixes, prefixes, infixes, and circumfixes)

"believe" is a stem.

"un" and "able" are affixes.

What we want to do in NLP preprocessing is get the stem a stem by eliminating the affixes from a token.

Stemming¶

Stemming usually refers to a crude heuristic process that chops off the ends of words.

Ex: automates, automating and automatic could be stemmed to automat

from nltk import stem

porter = stem.porter.PorterStemmer()

porter.stem("cars")

'car'

porter.stem("octopi")

'octopi'

porter.stem("am")

'am'

There are 3 types of commonly used stemmers, and each consists of slightly different rules for systematically replacing affixes in tokens. In general, Lancaster stemmer stems the most aggresively, i.e. removing the most suffix from the tokens, followed by Snowball and Porter

Porter Stemmer:

- Most commonly used stemmer and the most gentle stemmers

- The most computationally intensive of the algorithms (Though not by a very significant margin)

- The oldest stemming algorithm in existence

Snowball Stemmer:

- Universally regarded as an improvement over the Porter Stemmer

- Slightly faster computation time than the Porter Stemmer

Lancaster Stemmer:

- Very aggressive stemming algorithm

- With Porter and Snowball Stemmers, the stemmed representations are usually fairly intuitive to a reader

- With Lancaster Stemmer, shorter tokens that are stemmed will become totally obfuscated

- The fastest algorithm and will reduce the vocabulary

- However, if one desires more distinction between tokens, Lancaster Stemmer is not recommended

from nltk import stem

tokens = ['player', 'playa', 'playas', 'pleyaz']

# Define Porter Stemmer

porter = stem.porter.PorterStemmer()

# Define Snowball Stemmer

snowball = stem.snowball.EnglishStemmer()

# Define Lancaster Stemmer

lancaster = stem.lancaster.LancasterStemmer()

print('Porter Stemmer:', [porter.stem(i) for i in tokens])

print('Snowball Stemmer:', [snowball.stem(i) for i in tokens])

print('Lancaster Stemmer:', [lancaster.stem(i) for i in tokens])

Porter Stemmer: ['player', 'playa', 'playa', 'pleyaz'] Snowball Stemmer: ['player', 'playa', 'playa', 'pleyaz'] Lancaster Stemmer: ['play', 'play', 'playa', 'pleyaz']

Lemmatization¶

Lemmatization aims to remove inflectional endings only and to return the base or dictionary form of a word, which is known as the lemma.

This is doing things properly with the use of a vocabulary and morphological analysis of words.

Check for understanding¶

How are stemming and lemmatization similar?

The goal of both stemming and lemmatization is to reduce inflectional forms and derivationally-related forms of a token to a common base form.

from textblob import Word

w = Word("cars")

w.lemmatize()

'car'

Word("octopi").lemmatize()

'octopus'

Word("am").lemmatize()

'am'

from textblob import Word

w = Word("litter")

w.definitions

['the offspring at one birth of a multiparous mammal', 'rubbish carelessly dropped or left about (especially in public places)', 'conveyance consisting of a chair or bed carried on two poles by bearers', 'material used to provide a bed for animals', 'strew', 'make a place messy by strewing garbage around', 'give birth to a litter of animals']

Summary¶

- Tokenization separates words in a sentence

- You would normalize or process the sentence during tokenization to obtain sensible tokens

- These normalizations include:

- Replacing special characters with spaces such as

,.-=!using regex - Lowercasing

- Stemming to remove the suffix of tokens to make tokens more uniform

- Replacing special characters with spaces such as

- There are three types of commonly used stemmers. They are Porter, Snowball and Lancaster

- Lancaster is the fastest and also the most aggressive stemmer and Snowball is a good balance between speed and quality of stemming

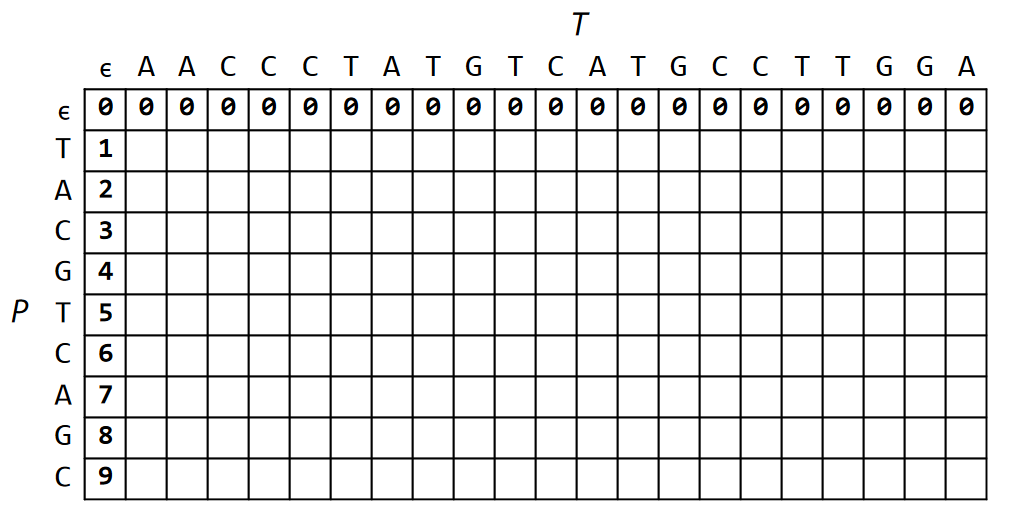

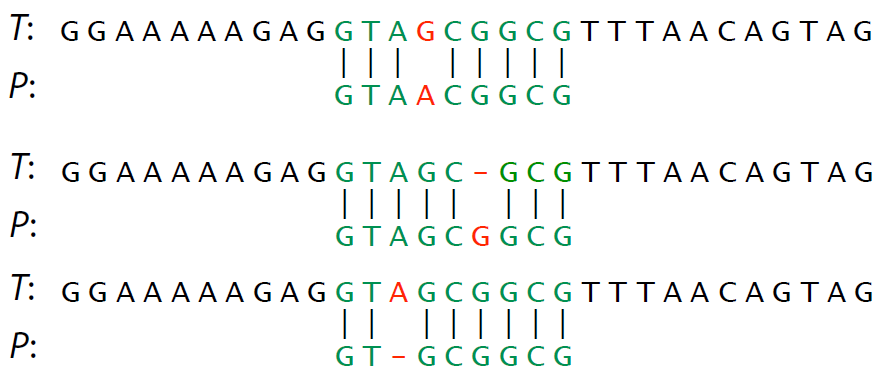

IV. Approximate Sequence Matching¶

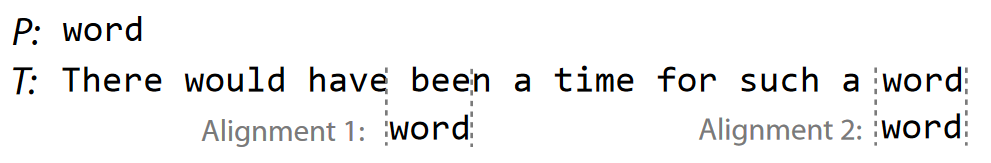

Exact Matching¶

Fing places where pattern $P$ is found within text $T$.

What python functions do this?

Also very important problem. Not trivial for massive datasets.

Alignment - compare $P$ to same-length substring of $T$ at some starting point.

How many calculations will this take in the most naive approach possible?

Improving on the Naive Exact-matching algorithm¶

Naive approach: test all possible alignments for match.

Ideas for improvement:

- stop comparing given alignment at first mismatch.

- use result of prior alignments to shorten or skip subsequent alignments.

The Boyer-Moore algorithm¶

Approximate matching: Motivational problems¶

- Matching Regular Expressions to text efficiently

- Biological sequence alignment between different species

- Matching noisy trajectories through space

- Clustering sequences into a few groups of most similar classes

- Applying k Nearest Neighbors classification to sequences

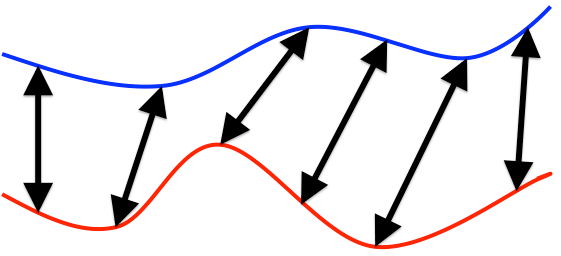

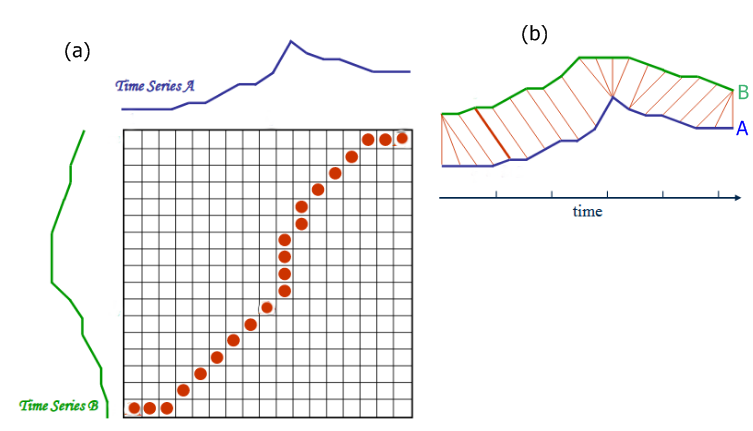

Matching time-series with varying timescales¶

How similar are these two curves, assuming we ignore varying timescales?

Example: we wish to determine location of hiker given altitude measurements during hike.

Note the amount of warping is often not the distance metric. We first "warp" the pattern, then compute distance some other way, e.g. LMS. Final distance as the closest LMS possible over all acceptable warpings.

Dynamic Time Warping

II. Dynamic Programming Review¶

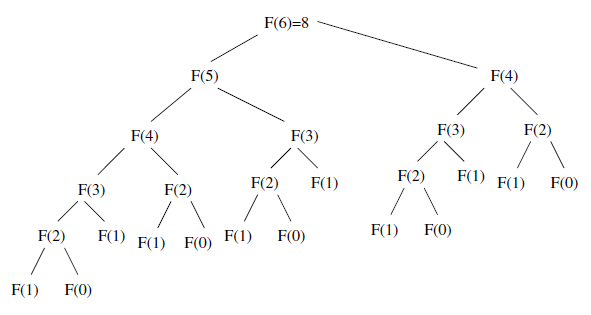

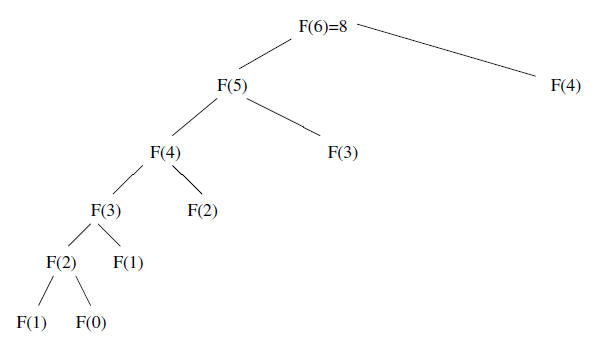

Fibonacci sequence¶

\begin{align} f(n) &= f(n-1) + f(n-2) \\ f(0) &= 0 \\ f(1) &= 1 \\ \end{align}Recursive calculation¶

Inefficient due to repeatedly calculating same terms

def fib_recursive(n):

if n == 0: return 0

if n == 1: return 1

return fib_recursive(n-1) + fib_recursive(n-2)

Each term requires two additional terms be calculated. Exponential time.

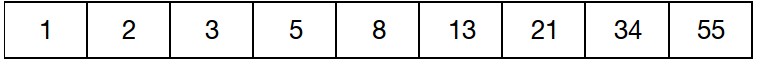

DP calculation¶

Intelligently plan terms to calculate and store ("cache"), e.g. in a table.

Each term requires one term be calculated.

DP Caching¶

Always plan out the data strcture and calculation order.

- need to make sure you have sufficient space

- need to choose optimal strategy to fill in

The data structure to fill in for Fibonacci is trivial:

Optimal order is to start at bottom and work up to $n$, so always have what you need for next term.

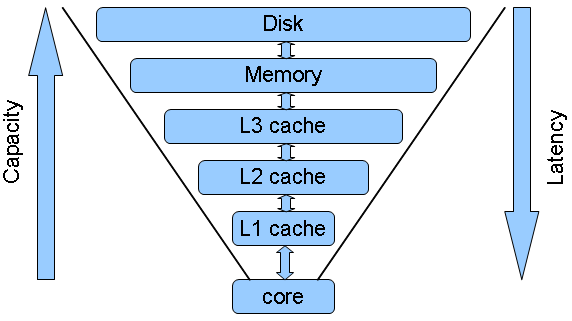

What are caches?¶

Caches are storage for information to be used in the near future in a more accessible form.

The difference between dynamic programming and recursion¶

1) Direction

Recursion: Starts at the end/largest and divides into subproblems.

DP: Starts at the smallest and builds up a solution.

2) Amount of computation:

During recursion, the same sub-problems are solved multiple times.

DP is basically a memorization technique which uses a table to store the results of sub-problem so that if same sub-problem is encountered again in future, it could directly return the result instead of re-calculating it.

DP = apply common sense to do recursive problems more efficiently.

def fib_recursive(n):

if n == 0: return 0

if n == 1: return 1

return fib_recursive(n-1) + fib_recursive(n-2)

def fib_dp(n):

fib_seq = [0, 1]

for i in range(2,n+1):

fib_seq.append(fib_seq[i-1] + fib_seq[i-2])

return fib_seq[n]

Let's compare the runtime

%timeit -n4 fib_recursive(30)

417 ms ± 2.83 ms per loop (mean ± std. dev. of 7 runs, 4 loops each)

%timeit -n4 fib_dp(100)

26.2 µs ± 704 ns per loop (mean ± std. dev. of 7 runs, 4 loops each)

Important Point¶

The hardest parts of dynamic programming is:

- Recognizing when to use it

- Picking the right data structure for caching

Generator approach¶

def fib():

a, b = 0, 1

while True:

a, b = b, a+b

yield a

f = fib()

for i in range(10):

print(next(f))

1 1 2 3 5 8 13 21 34 55

from itertools import islice

help(islice)

Help on class islice in module itertools: class islice(builtins.object) | islice(iterable, stop) --> islice object | islice(iterable, start, stop[, step]) --> islice object | | Return an iterator whose next() method returns selected values from an | iterable. If start is specified, will skip all preceding elements; | otherwise, start defaults to zero. Step defaults to one. If | specified as another value, step determines how many values are | skipped between successive calls. Works like a slice() on a list | but returns an iterator. | | Methods defined here: | | __getattribute__(self, name, /) | Return getattr(self, name). | | __iter__(self, /) | Implement iter(self). | | __next__(self, /) | Implement next(self). | | __reduce__(...) | Return state information for pickling. | | __setstate__(...) | Set state information for unpickling. | | ---------------------------------------------------------------------- | Static methods defined here: | | __new__(*args, **kwargs) from builtins.type | Create and return a new object. See help(type) for accurate signature.

n = 100

next(islice(fib(), n-1, n))

354224848179261915075

%timeit -n4 next(islice(fib(), n-1, n))

The slowest run took 9.46 times longer than the fastest. This could mean that an intermediate result is being cached. 21.7 µs ± 28.4 µs per loop (mean ± std. dev. of 7 runs, 4 loops each)

Memoization¶

A technique used in computing to speed up programs. This is accomplished by memorizing the calculation results of processed input such as the results of function calls.

If the same input or a function call with the same parameters is used, the previously stored results can be used again and unnecessary calculation are avoided.

In many cases a simple array is used for storing the results, but lots of other structures can be used as well, such as associative arrays, called hashes in Perl or dictionaries in Python.

Source: http://www.amazon.com/Algorithm-Design-Manual-Steve-Skiena/dp/0387948600

def fib_recursive(n):

if n == 0: return 0

if n == 1: return 1

return fib_recursive(n-1) + fib_recursive(n-2)

def fib_dp(n):

cache = [0,1]

for i in range(2,n+1):

cache.append(cache[i-1]+cache[i-2])

return cache[n]

def memoize(f):

memo = {}

def helper(x):

if x not in memo:

memo[x] = f(x)

return memo[x]

return helper

fib_recursive_memoized = memoize(fib_recursive)

fib_recursive_memoized(9)

34

%timeit fib_recursive(9)

17.1 µs ± 74.1 ns per loop (mean ± std. dev. of 7 runs, 100000 loops each)

%timeit fib_recursive_memoized(9)

179 ns ± 1.44 ns per loop (mean ± std. dev. of 7 runs, 10000000 loops each)

Memoization in Python (LRU cache)¶

LRU = Least Recently Used

from functools import lru_cache

@lru_cache()

def fib_recursive(n):

"Calculate nth Fibonacci number using recursion"

if n == 0: return 0

if n == 1: return 1

return fib_recursive(n-1) + fib_recursive(n-2)

%timeit fib_recursive(n)

119 ns ± 0.435 ns per loop (mean ± std. dev. of 7 runs, 10000000 loops each)

Memoization in Python (joblib)¶

Exercise: Change making problem.¶

The objective is to determine the smallest number of currency of a particular denomination required to make change for a given amount.

For example, if the denomination of the currency are \$1 and \\$2 and it was required to make change for \$3 then we would use \\$1 + \$2 i.e. 2 pieces of currency.

However if the amount was \$4 then we would could either use \\$1+\$1+\\$1+\$1 or \\$1+\$1+\\$2 or \$2+\\$2 and the minimum number of currency would 2 (\$2+\\$2).

Solution: dynamic programming (DP).¶

The minimum number of coins required to make change for \$P is the number of coins required to make change for the amount \\$P-x plus 1 (+1 because we need another coin to get us from \$P-x to P).

These can be illustrated mathematically as:

Let us assume that we have $n$ currency of distinct denomination. Where the denomination of the currency $i$ is $v_i$. We can sort the currency according to denomination values such that $v_1<v_2<v_3<..<v_n$

Let us use $C(p)$ to denote the minimum number of currency required to make change for $ \$p$

Using the principles of recursion $C(p)=min_i C(p-v_i)+1$

For example, assume we want to make 5, and $v_1=1, v_2=2, v_3=3$.

Therefore $C(5) = min(C(5-1)+1, C(5-2)+1, C(5-3)+1)$ $\Longrightarrow min(C(4)+1, C(3)+1, C(2)+1)$

Exercise: Compute a polynomial over a grid of points¶

How can we use redundancy here?

DP for RE matching¶

https://sites.google.com/site/jennyshelloworld/company-blog/regular-expression-matching---dp

Things get tricky when using wildcards ".", "?", "*"

Mini-summary¶

In a recursive approach, your function recursively calls itself on smaller subproblems as needed, until the calculation is done. Potentially performing many redundant calculations naively.

With memoization, you form a cache of these recursive calls, and check if the same one is called again before recalculating. Still potential danger since do not plan memory needs or usage.

With Dynamic Programming, you generally follow some plan to produce the cache of results for smaller subproblems.

III. Edit Distance¶

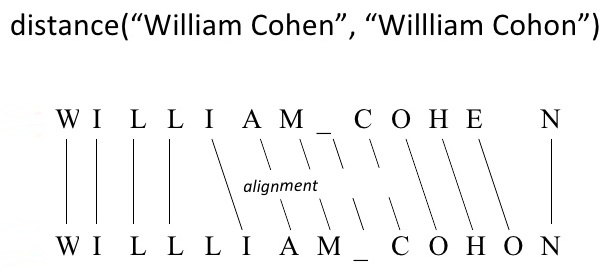

Edit Distance between two strings¶

Minimum number of operations needed to convert one string into other

Ex. typo correction: how do we decide what "scool" was supposed to be?

Consider possibilities with lowest edit distance. "school" or "cool".

Hamming distance - operations consist only of substitutions of character (i.e. count differences)

Levenshtein distance - operations are the removal, insertion, or substitution of a character

"Fuzzy String Matching"

def hammingDistance(x, y):

''' Return Hamming distance between x and y '''

assert len(x) == len(y)

nmm = 0

for i in range(0, len(x)):

if x[i] != y[i]:

nmm += 1

return nmm

hammingDistance('brown', 'blown')

1

hammingDistance('cringe', 'orange')

2

Levenshtein distance (between strings $P$ and $T$)¶

Special case where the operations are the insertion, deletion, or substitution of a character

- Insertion – Insert a single character into pattern $P$ to help it match text $T$ , such as changing “ago” to “agog.”

- Deletion – Delete a single character from pattern $P$ to help it match text $T$ , such as changing “hour” to “our.”

- Substitution – Replace a single character from pattern $P$ with a different character in text $T$ , such as changing “shot” to “spot.”

Count the minimum number needed to convert $P$ into $T$.

Interchangably called "edit distance".

Exercise: What are the Hamming and Edit distances?¶

\begin{align} T: \text{"The quick brown fox"} \\ P: \text{"The quick grown fox"} \\ \end{align}Exercise: What are the Hamming and Edit distances?¶

Comprehension check: give three different ways to transform $P$ into $T$ (not necessarily fewest operations)

Edit distance - Divide and conquer¶

How do we use simpler comparisons to perform more complex ones?

Use substring match results to compute

Consider by starting from first character and building up

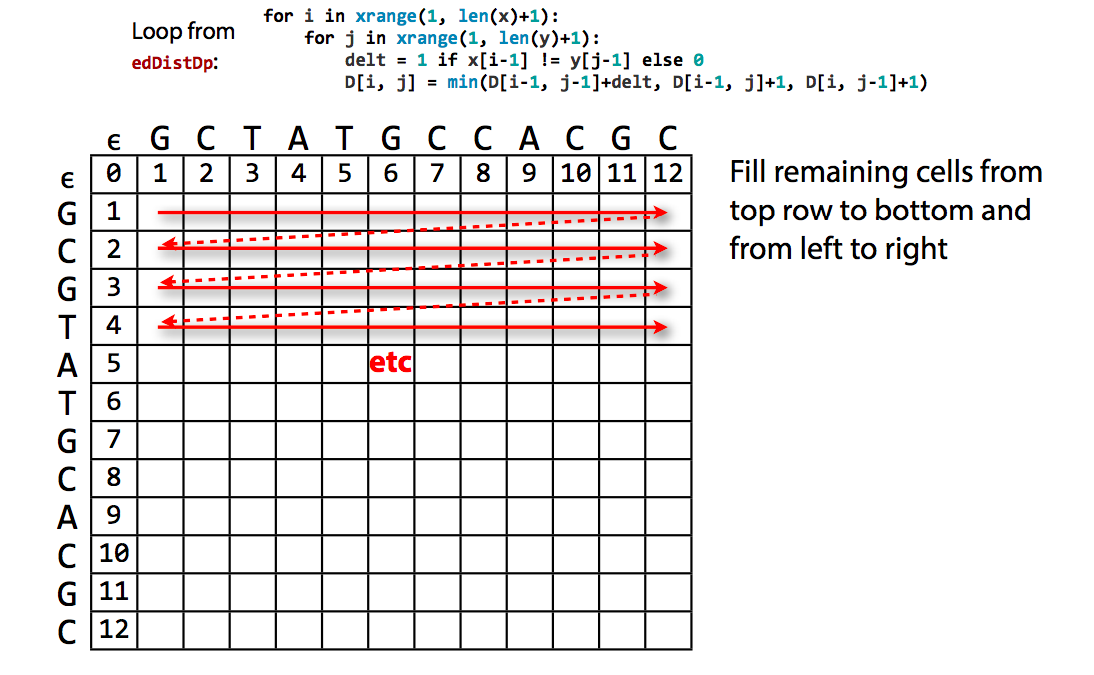

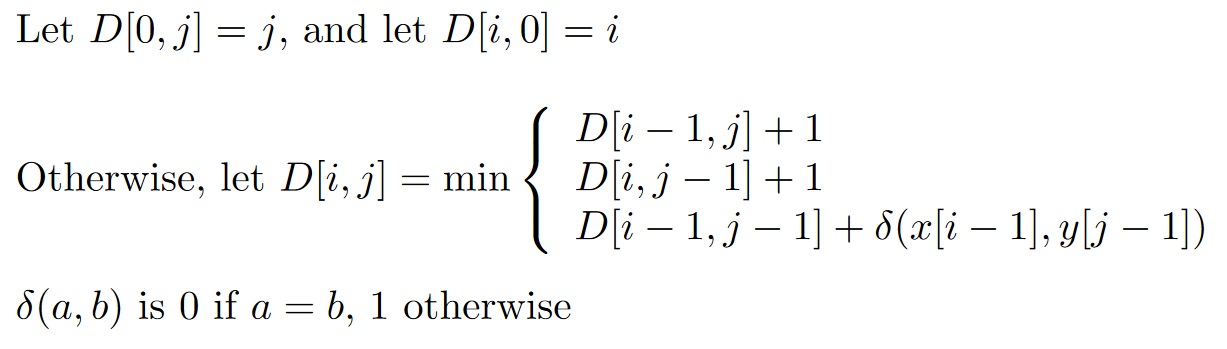

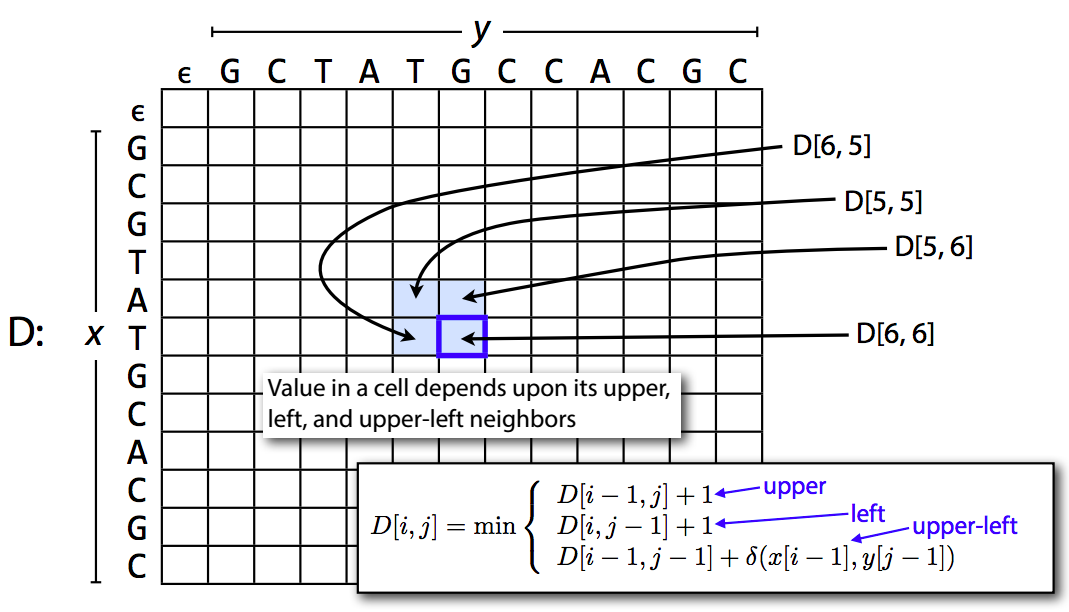

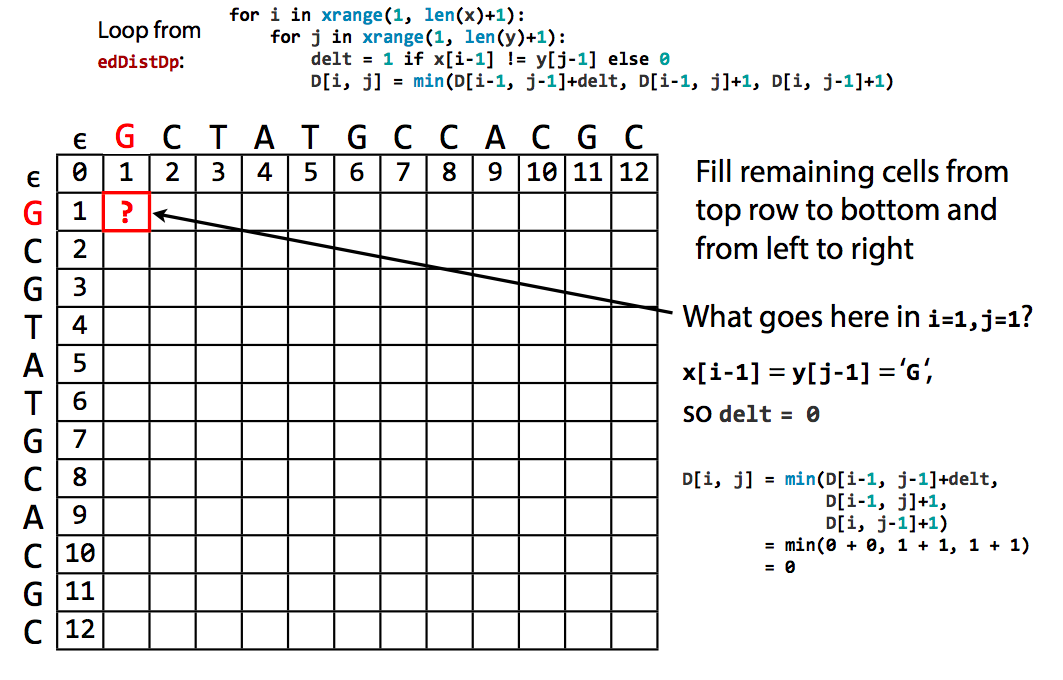

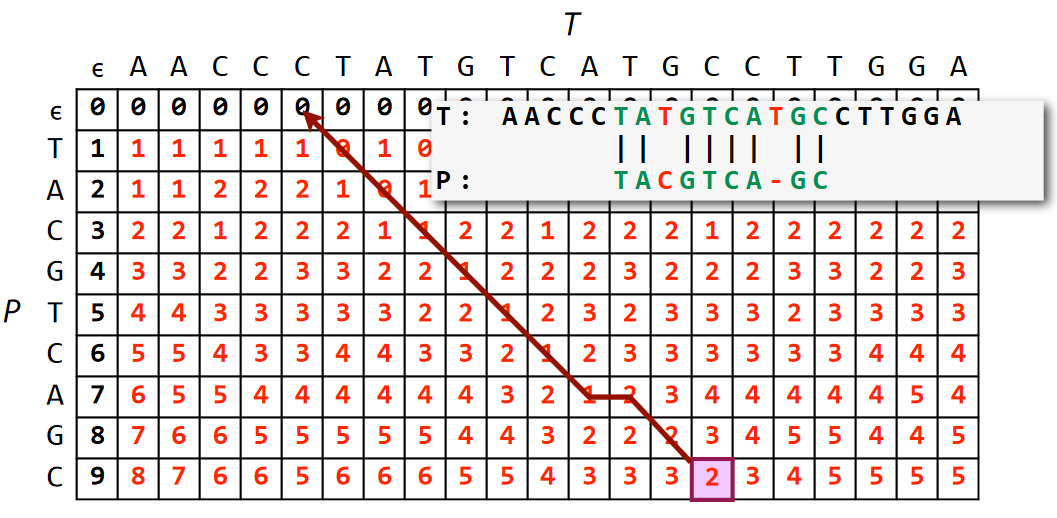

The DP matrix¶

Initialization¶

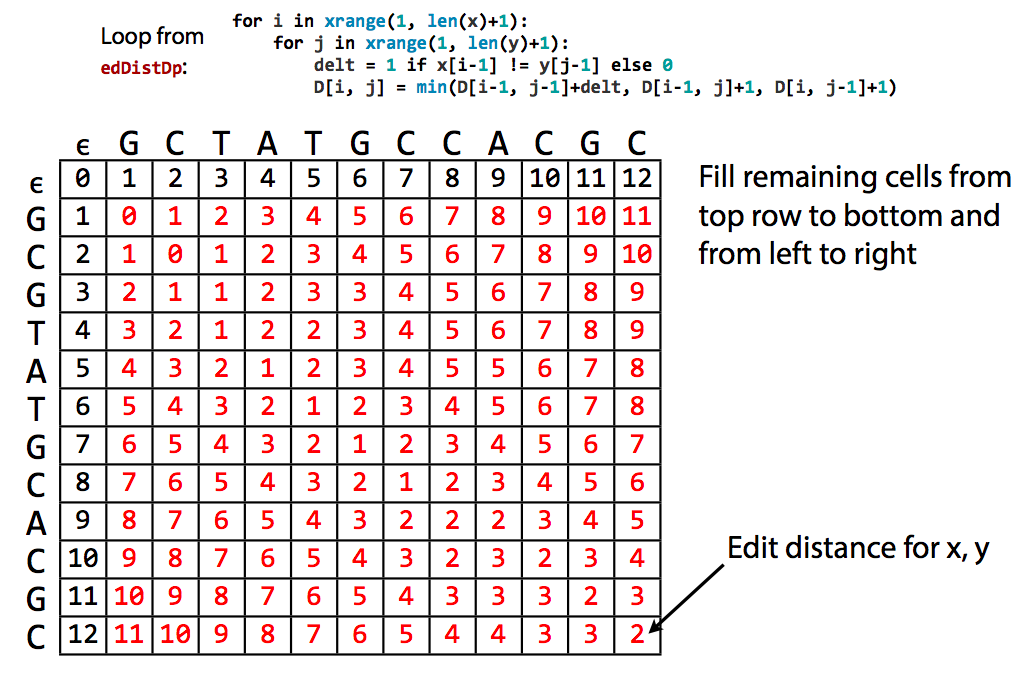

Computation¶

Final edit distance is lower right corner¶

Comparison of entirety of both strings

So the net result tells us the minimum number of operations is 2. But what are they?

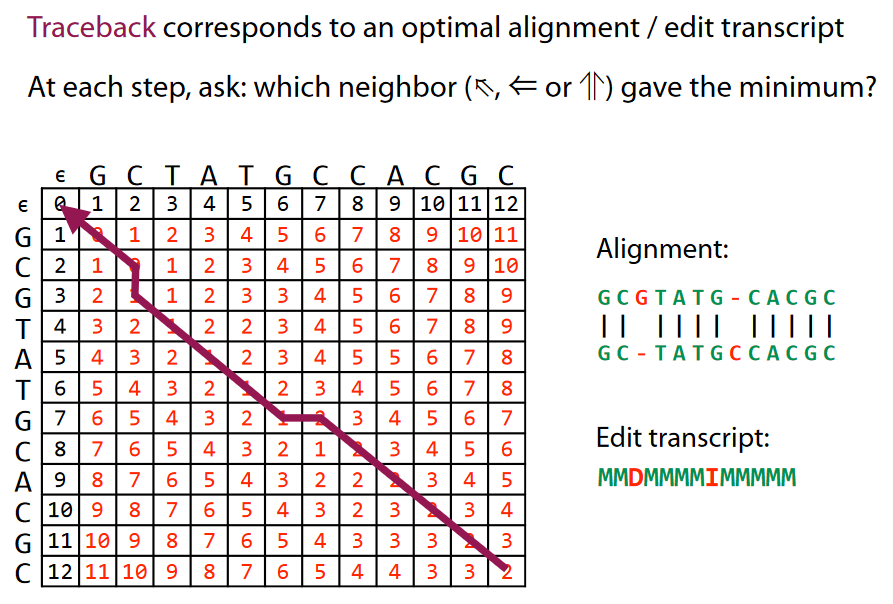

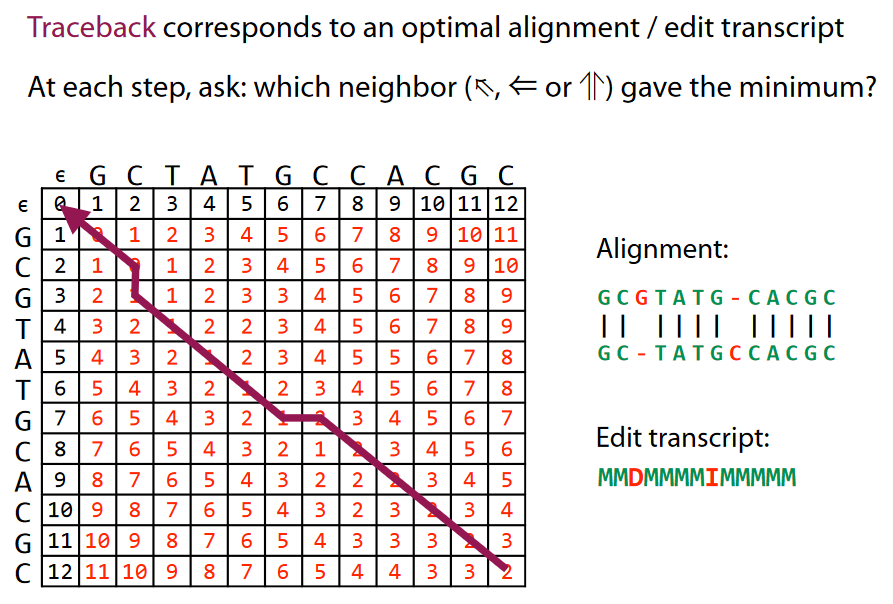

Traceback: the minimum "edit"¶

- Diagonal = Match (M) or Substitution (S) - depending if distance increased while following diagonal or remained constant

- Vertical = Deletion (D)

- Horizontal = Insertion (I)

Traceback: the minimum "edit"¶

- Diagonal = Match (M) or Substitution (S) - depending if distance increased while following diagonal or remained constant

- Vertical = Deletion (D)

- Horizontal = Insertion (I)

see http://www.cs.jhu.edu/~langmea/resources/lecture_notes/dp_and_edit_dist.pdf for more details

Note: use of (I) vs. (D) here depends which of the two strings you are editing to become the other

Note II: an error in backtrace line in picture, can you find it?

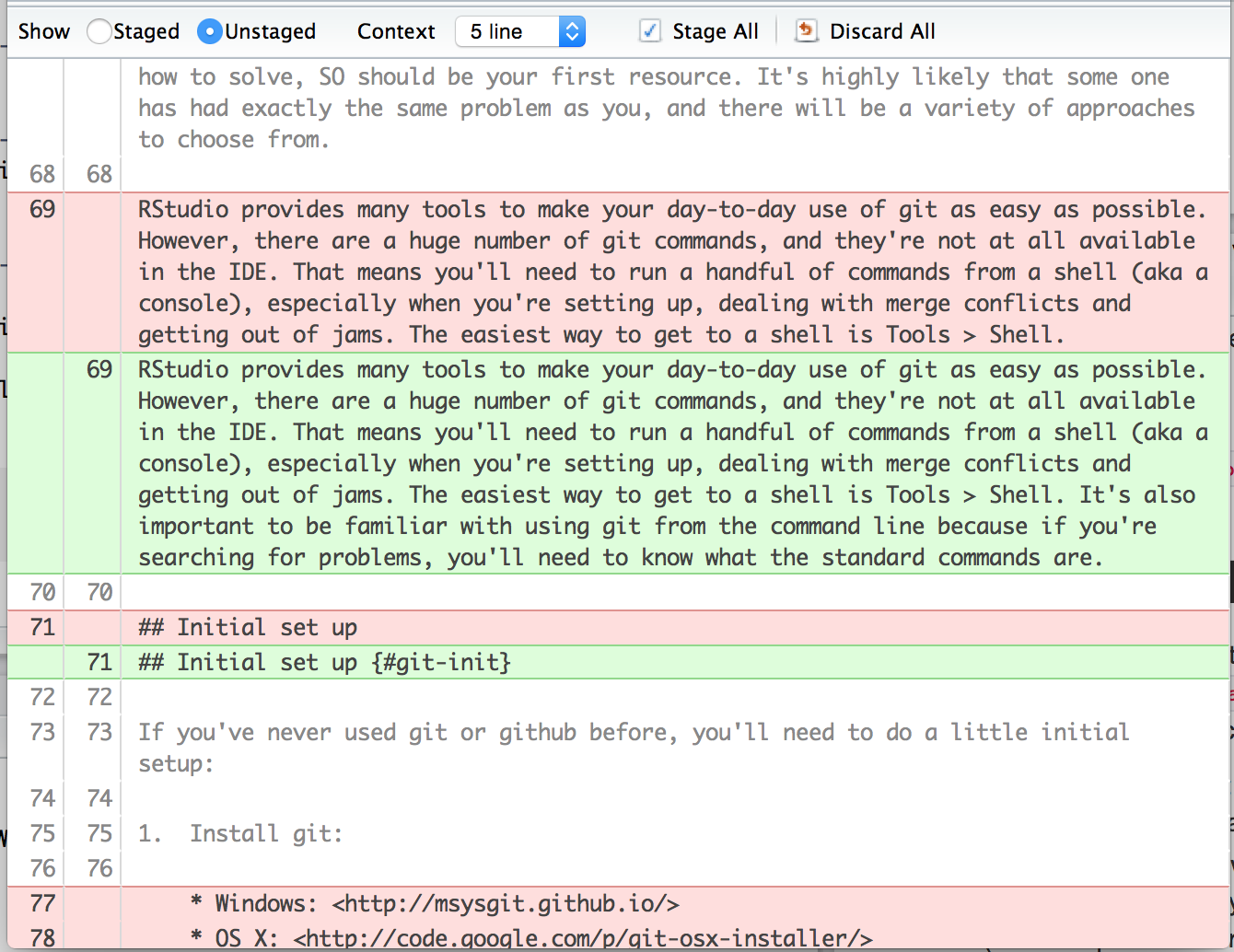

Traceback is a form of diff¶

Storing just diffs reduces storage amount.

For example, git

Seek lowest difference on bottom row, endpoint of substring in $T$.

def edDistRecursive(x, y):

if len(x) == 0: return len(y)

if len(y) == 0: return len(x)

delt = 1 if x[-1] != y[-1] else 0

vert = edDistRecursive(x[:-1], y) + 1

horz = edDistRecursive(x, y[:-1]) + 1

diag = edDistRecursive(x[:-1], y[:-1]) + delt

return min(diag, vert, horz)

edDistRecursive('Shakespeare', 'shake spear') # this takes a while!

3

Memoized version¶

def edDistRecursiveMemo(x, y, memo=None):

''' A version of edDistRecursive with memoization. For each x, y we see, we

record result from edDistRecursiveMemo(x, y). In the future, we retrieve

recorded result rather than re-run the function. '''

if memo is None: memo = {}

if len(x) == 0: return len(y)

if len(y) == 0: return len(x)

if (len(x), len(y)) in memo:

return memo[(len(x), len(y))]

delt = 1 if x[-1] != y[-1] else 0

diag = edDistRecursiveMemo(x[:-1], y[:-1], memo) + delt

vert = edDistRecursiveMemo(x[:-1], y, memo) + 1

horz = edDistRecursiveMemo(x, y[:-1], memo) + 1

ans = min(diag, vert, horz)

memo[(len(x), len(y))] = ans

return ans

edDistRecursiveMemo('Shakespeare', 'shake spear') # this is very fast

3

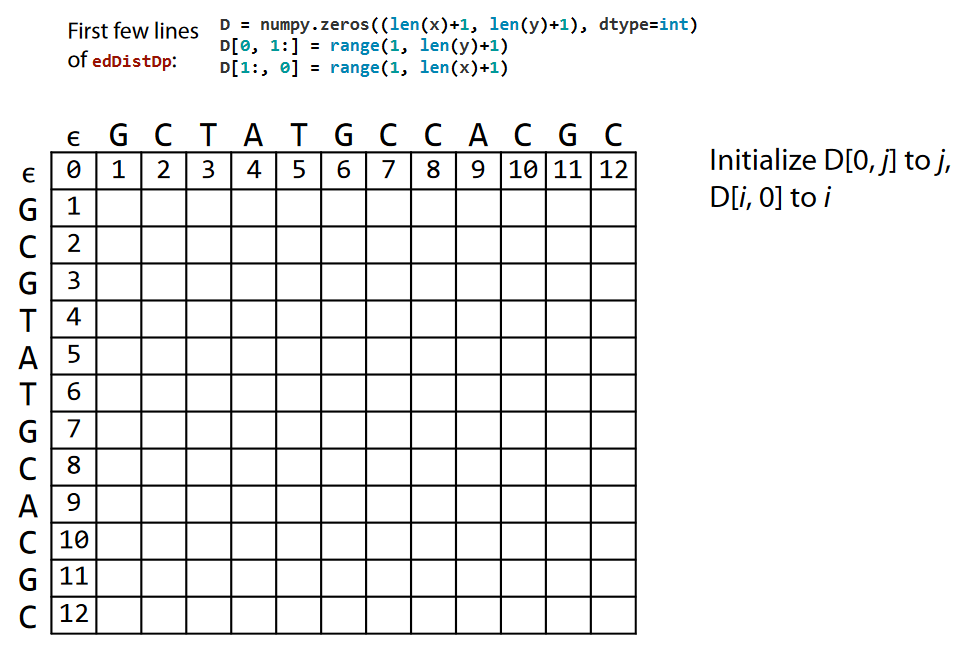

DP version¶

from numpy import zeros

def edDistDp(x, y):

""" Calculate edit distance between sequences x and y using

matrix dynamic programming. Return distance. """

D = zeros((len(x)+1, len(y)+1), dtype=int)

D[0, 1:] = range(1, len(y)+1)

D[1:, 0] = range(1, len(x)+1)

for i in range(1, len(x)+1):

for j in range(1, len(y)+1):

delt = 1 if x[i-1] != y[j-1] else 0

D[i, j] = min(D[i-1, j-1]+delt, D[i-1, j]+1, D[i, j-1]+1)

return D[len(x), len(y)]

edDistDp('Shakespeare', 'shake spear')

3

NLTK version¶

from nltk.metrics.distance import edit_distance

edit_distance('intention', 'execution')

5

edDistDp('intention', 'execution')

5

Levenshtein Demo¶

Check for Understanding¶

What are the givens for DP?

- A map

- A goal

What is the output of DP?

Best "path" from any "location" - meaning what?

How is the value calculated in DP?

It is the min/max of:

- The previous max

- The current value

How does DP generate the best "path"?

Start at goal and calculate the value function for every step all the path back to start

Mini-Summary (take 2?)¶

- Dynamic programming is a improved version of recrusion

- Dynamic programming uses caches to save compute, storing the results of previous calculations in a systematic way for later use

- Backtrace is storing the diff for later use.

Further Improvement/Optimization¶

Many more specialized algorithms exist in the fields DP is used.

DP is a category of methods, not a single method.

Can you think of ways to perform these tasks faster?

Plan B. Be even more clever about intermediate calculations. E.g. compute table in way more likely to find optimal sooner (before filling entire table).

Plan A. "good" approximations (hopefully). E.g. computing a match based on only short substrings, not entire string.

IV. Dynamic Time Warping¶

Matching time series: Dynamic Time Warping¶

Generally similar to edit distance algorithm, except we compute a function $d()$ at each comparison

import numpy as np

# define whatever distance metric you want

def d(x,y):

return abs(x-y)

# the DP DWT distance algorithm

def DTWDistance(A, B):

n = len(A)

m = len(B)

DTW = np.zeros((n,m))

for i in range(0,n):

for j in range(0,m):

DTW[i, j] = np.inf

DTW[0, 0] = 0

for i in range(1,n):

for j in range(1,m):

cost = d(A[i], B[j])

DTW[i, j] = cost + min((DTW[i-1, j ], # insertion

DTW[i , j-1], # deletion

DTW[i-1, j-1])) # match

print(DTW)

return DTW[n-1, m-1]

DTWDistance([1,2,3],[1,3,3,3,3])

[[ 0. inf inf inf inf] [inf 1. 2. 3. 4.] [inf 1. 1. 1. 1.]]

1.0

V. Summary¶

Pre-filtering, Pruning, etc.¶

When performing a slow search algorithm over a large dataset, start with fast algorithm with poor FPR to reject obvious mismatches.

Ex. BLAST (sequence alignment) in bioinformatics, followed by slow accurate structure alignment technique.

General result for optimal ordering of filters based on efficiency and speed: K. Dillon and Y.-P. Wang, “On efficient meta-filtering of big data,” in Engineering in Medicine and Biology Society (EMBC), 2016 IEEE 38th

Hints¶

The sooner you start to code, the longer the program will take.

– Roy Carlson, University of Wisconsin

- You will write 2 lines of code that will be ~140 characters. Like most computer science challenges, it will require more thinking that writing.

If you can’t write it down in English, you can’t code it. – Peter Halpern

- Practice the algorithm on your white desk table several times before trying to code.