Unstructured Data & Natural Language Processing

Keith Dillon

Spring 2020

Topic 3: Machine Learning in Natural Language Processing

This topic:¶

- Simple Machine Learning Problems

- Information retrieval

- Low-level parsing

- High-level semantics

- Data sources

- Project 1: NLP with Sklearn

Reading:¶

- https://github.com/Kyubyong/nlp_tasks

- RN: Russell & Norvig, "Artificial Intelligence: a Modern Introduction, 3e" (Chapter 22, 23).

- JM: Jurafsky & Martin, "Speech and Language Processing, 3e draft". https://web.stanford.edu/~jurafsky/slp3/

Overview - "degrees of artificial Intelligence"¶

- Information retrieval - can range from simple to very sophisticated methods, basically to find strings still

- Simple Machine Learning Problems - can be performed by "illiterate" algorithm that matches presense of strings

- Low-level parsing - modeling/using relationships between words within text, e.g. "adjective noun verbed"

- High-level semantics - underlying meaning or point, topic of essay

I. Info Retrieval¶

Search Engines¶

Information Retrieval Framework¶

- A corpus of documents

- paragraphs

- pages

- multipage texts

- Queries posed in a query language which specifies what the user wants to know

- just a list of words, such as [AI book];

- a phrase of words that must be adjacent, as in [“AI book”];

- can contain Boolean operators as in[AI AND book];

- can include non-Boolean operators such as[AI NEAR book] or [AI book site:www.aaai.org].

- A result set. the subset of documents that the IR system judges to berelevant to the query.

- relevant = likely to be of use to the person who posed the query, for the particular information need expressed in the query.

- A presentation of the result set.

- can be as simple as a ranked list of document titles

- or as complex as a rotating color map of the result set projected onto a three-dimensional space, rendered as a two-dimensional display.

Scoring Function¶

Choose document to Maximize $score(query,document)$

- Okapi BM25 ("Best Matching") - model documents based on set of words in them

- net score of a document is a weighted linear combination of the scores for each word in the query.

- depends on rarity of word, length of document, number of times word is repeated in document

- e.g. query = "the president" - don't allow result to be dominated by documents with many repeats of "the".

Scoring Function¶

Choose document to Maximize $score(query,document)$

- PageRank - Weight document (webpages) matches based on network connectivity

- find set of documents which match query

- rank based on "authority" as determined by links to document

- To solve "tyranny of TF scores" (term-frequencies, used as weighting previously).

- e.g. query = "CNN", presumably "cnn.com" is the search result with the most authority, though other results may use the word more because cnn.com may not talk about CNN very much, but instead the changing news itself. Meanwhile "ihatecnn.com" will mention CNN constantly as it obsesses over CNN's mistakes.

- HITS (Hyperlink-Induced Topic Search)

- find set of documents which match query

- rank based on authority within set of matches only

Plagiarism detection¶

- corpus contains potential sources of plagiarism

- need best match and a score which evaluates degree of similarity

- security/hacking problem - the query is intentially obfuscated to reduce scores

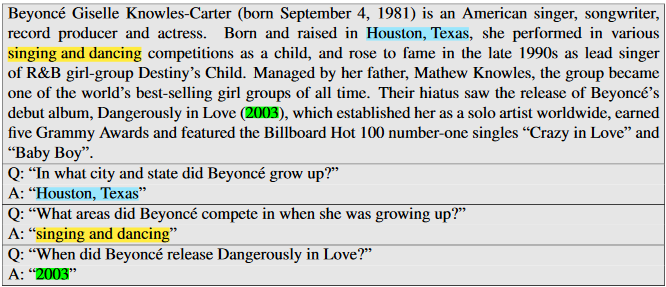

Question Answering (QA)¶

- A general A.I. task

- also addressed as variant of I.R. systems

- The query is a full question: [Who killed Abraham Lincoln?]

- JM Chapter 23: IR, Knowledge-based, & Neural methods

How might you apply the I.R. framework for QA?

II. Simple (more or less) Machine Learning Problems¶

Formal Machine Learning Framework & Jargon¶

Given training data $(\mathbf x_{(i)},y_i)$ for $i=1,...,m$.

Choose a model $f(\cdot)$ where we want to make $f(\mathbf x_{(i)})\approx y_i$ (for all $i$)

Define a loss function $L(f(\mathbf x), y)$ to minimize by changing $f(\cdot)$ ...by adjusting the weights.

Problem: machine learning uses vectors of numbers¶

How we apply to text?

Solutions:¶

- directly use ascii??

- one-hot encoding

- embedding

Spam detection¶

- Spam: Wholesale Fashion Watches -57% today. Designer watches for cheap ...

- Spam: You can buy ViagraFr \$1.85All Medications at unbeatableprices! ...

- Spam: WE CAN TREAT ANYTHING YOU SUFFER FROM JUST TRUST US ...

- Spam: Sta.rt earn*ingthe salary yo,u d-eserve by o’btainingthe prope,rcrede’ntials!

- Ham: The practical significance of hypertreewidth in identifyingmore ...

- Ham: Abstract: We will motivate the problem of social identity clustering: ...

- Ham: Good to see you my friend. Hey Peter, It was good to hear from you. ...

- Ham: PDS implies convexity of the resulting optimizationp roblem (Kernel Ridge...

Find $c$ which maximizes $P(c|text)$ where $c \in \{spam,ham\}$.

Exercise: describe an approach to implement this with some canned classifier (e.g. sklearn) and some text.

Language Identification¶

- “Hello, world”

- “Wie geht es dir”

Find $c$ which maximizes $P(c|text)$, where $c \in \{English,Spanish, German, ...\}$

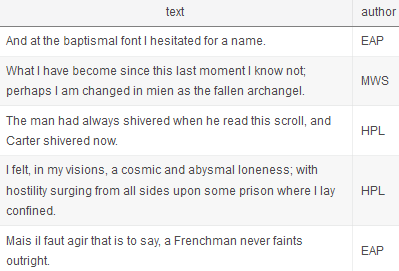

Author identification¶

- Authorship: find $c$ which maximizes $P(c|text)$, where $c \in \{EAP, MWS, HPL, ...\}$

- Plagiarism detection: $c \in \{student, not\_student\}$

Sentiment analysis¶

- Simple approach - classify based on positive vs. negative words: "awesome" vs. "sucks"

- High-level approach - classify based on high-level semantic understanding of text?

- one of the most popular and widely studied NLP tasks (Pang et al., 2002)

- won the Test-of-time award at NAACL 2018.

Other classification problems¶

- topic classification

- ...

Topic Modeling¶

- Determine small set of topics for corpus of documents, e.g. politics, art, sports

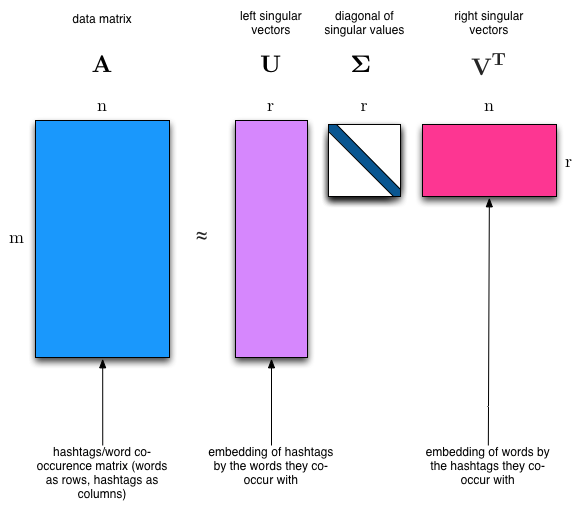

- Unsupervised learning problem - factor word-document matrix - SVD, NNMF, Latent Dirichlet Allocation

Classifier Performance¶

- Accuracy

- Precision

- Recall

When would we want to use something other than accuracy?

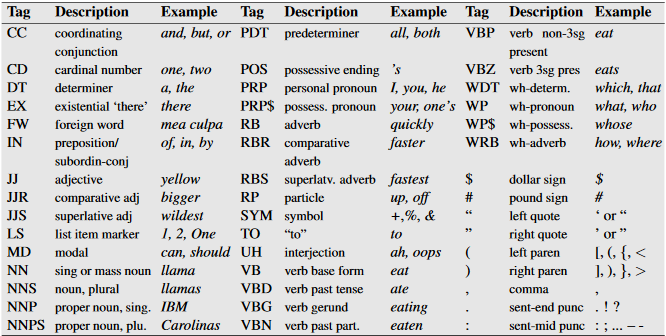

III. Low Level Parsing etc.¶

"Information Extraction"¶

Acquiring knowledge by skimming a text for

- occurrences of a particular class of object

- relationships among objects

Tasks:

- Web crawling/scraping/extraction

- extracting dates or prices by matching pattern: e.g [\$][0-9]+([.][0-9][0-9])?

- probabilistic models: HMM, CRF, ...

- Relationship extraction

- Named entity recognition (NER)

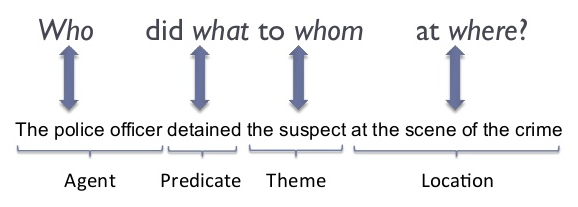

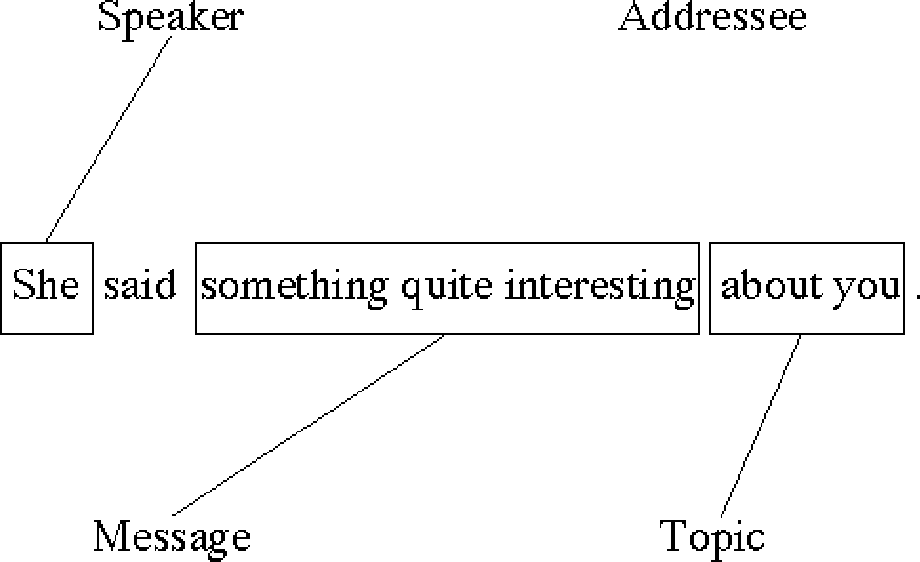

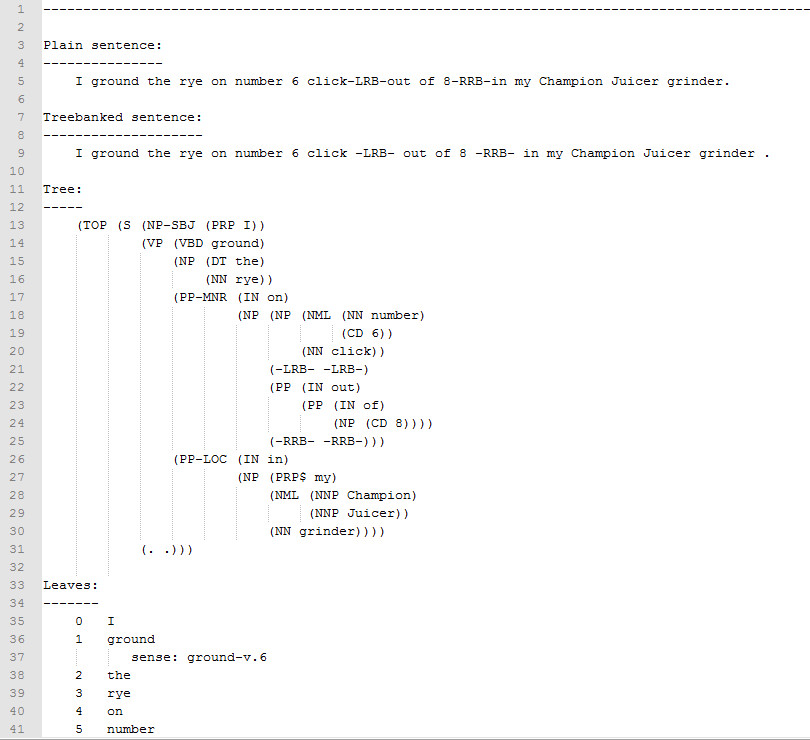

Semantic Role Labeling¶

- shallow semantic parsing

- Fit a particular chosen sentence model to text data

- e.g.: model = $<agent>$ did $<action>$ to $<object>$.

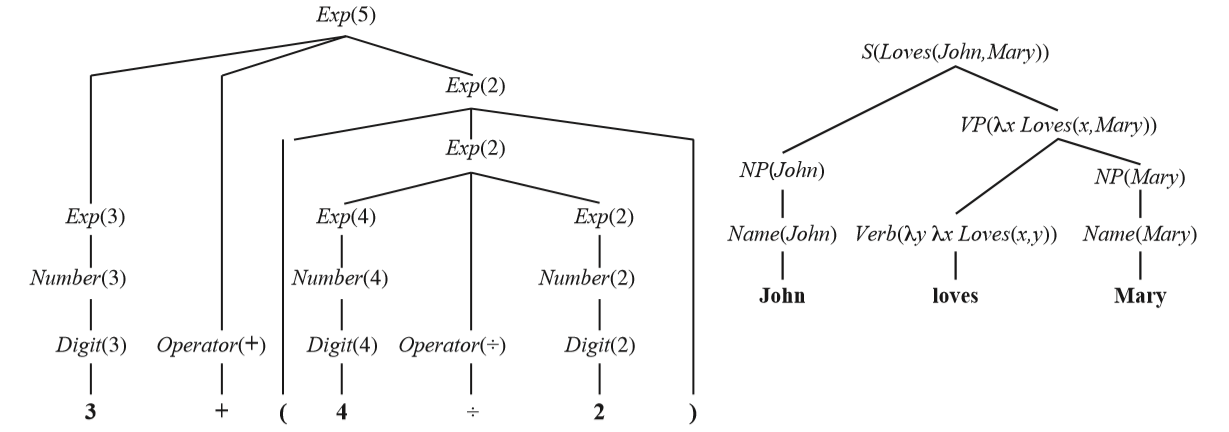

Syntactic Parsing¶

- Fit rules of language to text - form parse tree

- JM Chapter 11, CKY (dynamic programming) algorithm

- JM Chapter 12 - probabilistic context-free grammars

- JM Chapter 13 - dependency parsing

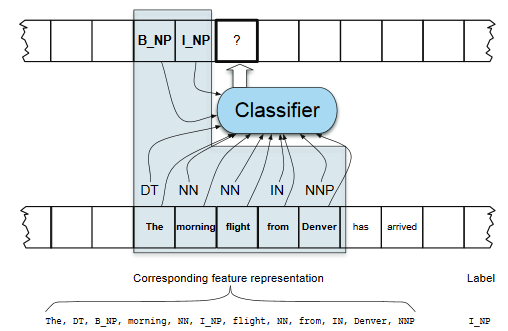

Machine Learning for Parsing¶

- JM Chapter 11.3, application to chunking (a kind of shallow or partial parsing)

- similar approach to other tasks, depending on what is used as features & labels

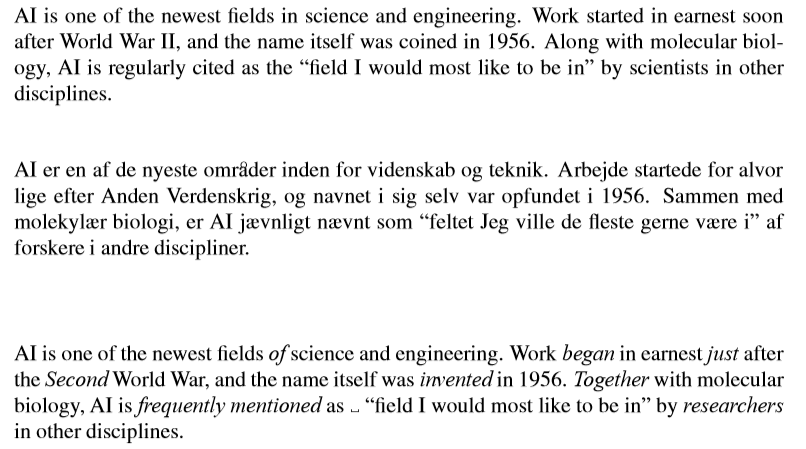

Machine Translation¶

Google translate, English to Danish to English:

- Rough translation, as provided by free online services, gives the “gist” of a foreign sentence or document, but contains errors.

- Pre-edited translation is used by companies to publish their documentation and sales materials in multiple languages. The original source text is written in a constrained language that is easier to translate automatically, and the results are usually edited by a human to correct any errors.

- Restricted-source translation works fully automatically, but only on highly stereotypical language, such as a weather report.

- JM Chapter 22 (TBD)

- NR Chapter 23.4

Bilingual evaluation understudy metric (BLEU; Papineni et al., 2002)¶

- measures similarity to human translations

- view output and reference as set of $n$ grams, and measure set similarity (#'s of equal elements), must choose $n$

- A (modified) precision score - fraction of output which is accurate

- output zero = nothing correct in the translation, output 1 = same as human translation

- enabled Machine Translation systems to scale up

- is still the standard metric for MT evaluation

- won the Test-of-time award at NAACL 2018.

III. High-Level Semantics¶

Question answering¶

- JM Chapter 23 (Question answering)

Watson "DeepQA"

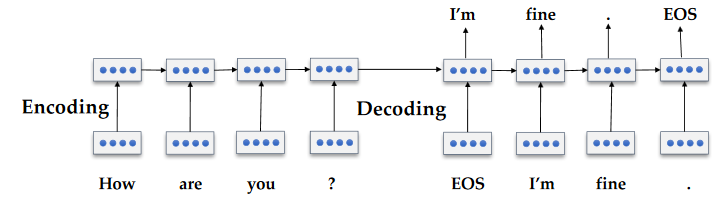

Chatbots¶

- Simple automated phone systems - VoiceXML

- a.k.a. Conversational agents

- JM Chapter 24 (Dialog Systems and Chatbots), 24.1.2 - sequence to sequence chatbots

Adaptation of machine translation technique

V. Data sources¶

Kinds of data¶

- Labeled - ranging from simple metadata or single word, to completely human-parsed into a easily-accesible framework

- datasets specifically made for specific task, e.g. SQuAD and wikiQA for QA

- Unlabeled - vastly more of this to be found

Canned and online datasets¶

- NLTK

- scikit-learn

- Tensorflow / keras

- https://github.com/awesomedata/awesome-public-datasets#natural-language

- https://www.kaggle.com/tags/nlp

FrameNet project¶

(Baker et al., 1998), object-oriented approach to linguistics

- International Computer Science Institute in Berkeley

- Frame- represents situation or task along with members etc

- -Net is relations between frames

- Annotated texs in XML format

Conference on Natural Language Learning (CoNLL)¶

Source of datasets and many NLP tasks, "annual task bake-off"

- CoNLL shared task datasets

- semantic role labelling task, a form of shallow semantic parsing

- chunking (Tjong Kim Sang et al., 2000)

- named entity recognition (Tjong Kim Sang et al., 2003)

- dependency parsing (Buchholz et al., 2006)

- ...many other tasks

- nltk.corpus.reader.conll

- 2019 task: "Cross-Framework Meaning Representation Parsing (MRP 2019). The goal of the task is to advance data-driven parsing into graph-structured representations of sentence meaning." http://mrp.nlpl.eu/

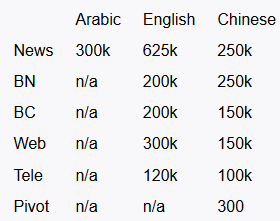

OntoNotes (Hovy et al., 2006)¶

- a large multilingual corpus with multiple annotations and high interannotator agreement

- has been used for the training and evaluation of a variety of tasks

- e.g. Dependency parsing and coreference resolution.

OntoNotes example¶

Wikipedia¶

- one of the most useful resources for training ML methods (Milne and Witten 2008)

- entity linking and disambiguation

- language modelling

- as a knowledge base

- a variety of other tasks.

Distant supervision (Mintz et al., 2009)¶

- A semi-supervised machine learning method - combine small amount of labeled data with large amount of unlabeled data

- use information from heuristics or existing knowledge bases to generate noisy patterns that can be used to automatically extract examples from large corpora.

- has been used extensively and is a common technique in relation extraction, information extraction, and sentiment analysis, among other tasks.

Relation extraction example: determine relationships between words in text.

- Use database of known related word pairs, and large databaset of unlabeled text.

- Finding sentence from unlabeled text which contain wordpairs and label these sentences as indicating the match

- train a model using the labeled sentences

V. Project I: NLP using sklearn¶

Project I¶

The goal of this project is to use a sklearn classifier to discriminate between sentences or paragraphs of two different corpuses, e.g. to try and determine the true author of a string of text. There are two key outcomes for this project:

- Coding of the basic text-to-vector conversion at a low level

- Implementation of an entire machine learning process to build the basic understanding needed for this course

Those already familiar with sklearn, machine learning, and processing text can choose to do a more sophisticated task.

Machine Learning Framework using Sklearn¶

Given training data $(\mathbf x_{(i)},y_i)$ for $i=1,...,m$ --> lists of samples $\verb|X|$ and labels $\verb|y|$

Choose a model $f(\cdot)$ where we want to make $f(\mathbf x_{(i)})\approx y_i$ (for all $i$) --> choose sklearn estimator to use

Define a loss function $L(f(\mathbf x), y)$ to minimize by changing $f(\cdot)$ ...by adjusting the weights --> default choises for estimators, sometimes multiple options

The Sklearn API¶

sklearn has an Object Oriented interface

Most models/transforms/objects in sklearn are Estimator objects

class Estimator(object):

def fit(self, X, y=None):

"""Fit model to data X (and y)"""

self.some_attribute = self.some_fitting_method(X, y)

return self

def predict(self, X_test):

"""Make prediction based on passed features"""

pred = self.make_prediction(X_test)

return pred

model = Estimator()

The Estimator class defines a fit() method as well as a predict() method. For an instance of an Estimator stored in a variable model:

model.fit: fits the model with the passed in training data. For supervised models, it also accepts a second argumentythat corresponds to the labels (model.fit(X, y). For unsupervised models, there are no labels so you only need to pass in the feature matrix (model.fit(X))Since the interface is very OO, the instance itself stores the results of the

fitinternally. And as such you must alwaysfit()before youpredict()on the same object.model.predict: predicts new labels for any new datapoints passed in (model.predict(X_test)) and returns an array equal in length to the number of rows of what is passed in containing the predicted labels.

Types of subclass of estimator:¶

- Supervised

- Unsupervised

- Feature Processing

Supervised¶

Supervised estimators in addition to the above methods typically also have:

model.predict_proba: For classifiers that have a notion of probability (or some measure of confidence in a prediction) this method returns those "probabilities". The label with the highest probability is what is returned by themodel.predict()` mehod from above.model.score: For both classification and regression models, this method returns some measure of validation of the model (which is configurable). For example, in regression the default is typically R^2 and classification it is accuracy.

Unsupervised - Transformer interface¶

Some estimators in the library implement this.

Unsupervised in this case refers to any method that does not need labels, including unsupervised classifiers, preprocessing (like tf-idf), dimensionality reduction, etc.

The transformer interface usually defines two additional methods:

model.transform: Given an unsupervised model, transform the input into a new basis (or feature space). This accepts on argument (usually a feature matrix) and returns a matrix of the input transformed. Note: You need tofit()the model before you transform it.model.fit_transform: For some models you may not need tofit()andtransform()separately. In these cases it is more convenient to do both at the same time.

X = [[0], [1], [2], [3]]

y = [0, 0, 1, 1]

from sklearn.neighbors import KNeighborsClassifier

neigh = KNeighborsClassifier(n_neighbors=3)

neigh.fit(X, y)

print(neigh.predict([[1.1]]))

print(neigh.predict_proba([[0.9]]))

[0] [[0.66666667 0.33333333]]

General Scikit Use¶

import the module

from sklearn.neighbors import KNeighborsClassifierConstruct an instance of the class. Set hyperparameters here generally.

neigh = KNeighborsClassifier(n_neighbors=1)Fit mode to your dataset using a subset (e.g. 70 percent) of samples

neigh.fit(X_train, y_train)For supervised methods, test accuracy using different samples than were used for training...

y_pred = neigh.predict(X_test, y_test) accuracy = sum(y_pred==y_test)/len(y_test) # note predictions may be non-binary