Unstructured Data & Natural Language Processing

Keith Dillon

Spring 2020

Topic 11: Attention

This topic:¶

- Attention & Memory

- Encoder-decoder RNNs with attention

- Transformer networks

Reading:¶

- J&M Chapter 10 (Encoder-Decoder Models, Attention, and Contextual Embeddings)

- Young et al, "Recent trends in deep learning based natural language processing" 2018

- Sebastian Ruder, "A Review of the Neural History of Natural Language Processing" 2018

- Vaswani et al, "Attention Is All You Need" (Transformer networks) 2017

- Devlin et al, "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding", 2018

I. Attention & Memory¶

Attention¶

"the key idea that enabled NMT models to outperform classic phrase-based MT systems"

Xu et al 2015, "Show, Attend and Tell: Neural Image Caption Generation with Visual Attention"

Memory-based Networks¶

Reading from memory - output memory state most similar to input state

Writing to memory - creating readable states

- Neural Turing Machines (Graves et al., 2014)

- Memory Networks (Weston et al., 2015)

- End-to-end Memory Newtorks (Sukhbaatar et al., 2015)

- Dynamic Memory Networks (Kumar et al., 2015)

- Neural Differentiable Computer (Graves et al., 2016)

- Recurrent Entity Network (Henaff et al., 2017)

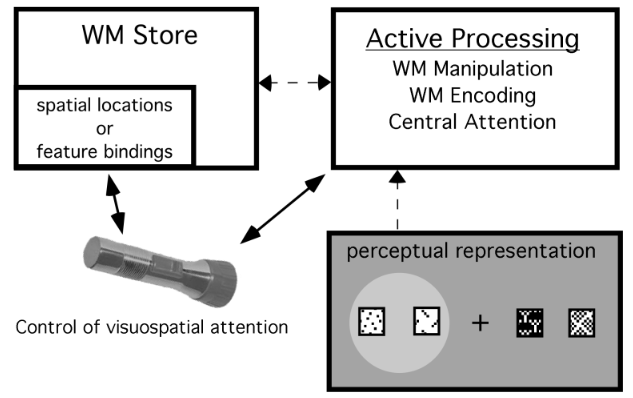

Attention - Cognitive science perspective¶

- Prior knowledge is used in processing a scene

- Important details receive more focus

- Effect is seen at lowest levels of vision

Fougnie 2008, "The Relationship between Attention and Working Memory"

Attention - network implementation¶

Weighted linear combination(s) of encoded input

Bahdanau et al 2015, "Neural Machine Translation by Jointly Learning to Align and Translate"

II. RNNs with Attention¶

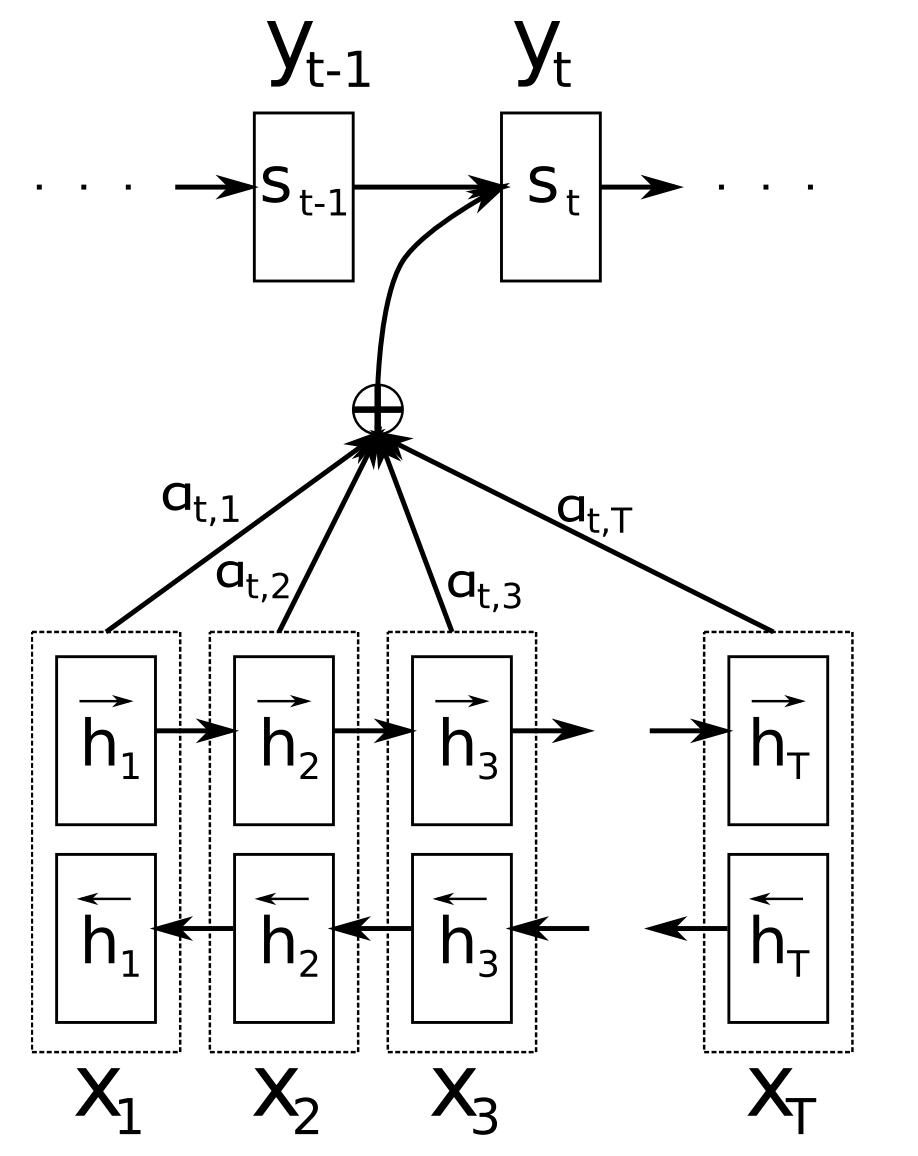

Attention¶

- More sophisticated context mechanism

- Replace the static context vector with one that is dynamically derived from the encoder hidden states at each point during decoding (i.e. a function of encoder outputs)

- Pass as input to decoding at each sequence step

Step 1. compute a score that measures how important encoder hidden states are to decoder hidden states,

Dot product $$ score(\mathbf h_{i-1}^{(d)}, \mathbf h_j^{(e)}) = \mathbf h_{i-1}^{(d)}\cdot \mathbf h_j^{(e)} $$

Weighted dot product $$ score(\mathbf h_{i-1}^{(d)}, \mathbf h_j^{(e)}) = (\mathbf h_{i-1}^{(d)})^T \mathbf W_s \mathbf h_j^{(e)} $$

Get one number for each prior state decoder $j$, relating to current ($i$th) decoder state.

Step 2. Use softmax over scores to normalize over the decoder states

\begin{align} \alpha_{ij} &= softmax(score(\mathbf h_{i-1}^{(d)}, \mathbf h_j^{(e)})) \\ &= \frac{\exp(score(\mathbf h_{i-1}^{(d)}, \mathbf h_j^{(e)}))}{\sum_k\exp(score(\mathbf h_{i-1}^{(d)}, \mathbf h_k^{(e)}))} \end{align}Context vector = linear combination of encoder state vectors, weighted by normalized scores

$$ \mathbf c_i = \sum_j \alpha_{ij} \mathbf h_j^{(e)} $$

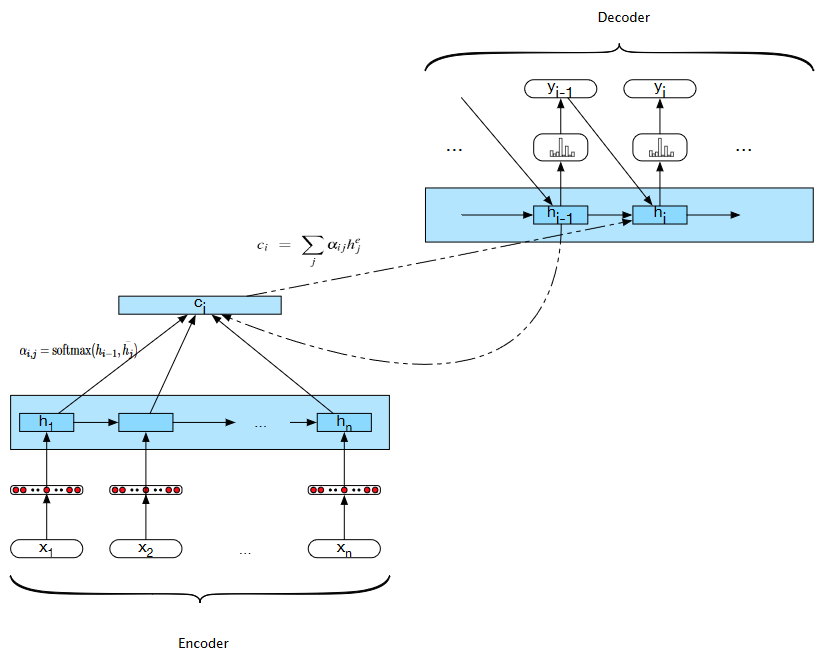

Encoder-decoder network with attention. Computing the value for $\mathbf h_i$ is based on the previous hidden state, the previous word generated, and the current context vector $\mathbf c_i$. This context vector is derived from the attention computation based on comparing the previous hidden state to all of the encoder hidden states.

III. Transformer Networks¶

Recall: Sequence Optimality Problem¶

- Greedily choosing decoder outputs -- at any step in sequence can pick most likely individual output -- may produce a sequence of most-likely outputs may not be very likely at all (e.g. "the the the")

- Want way of preferentially getting likely sequences, as done with Markov models

- Dynamic programming tricks to make this practical (like Viterbi decoding) can't be easily done here due to recurrent feedback of chosen outputs -- output distributions vary depending on prior sequence

IV. BERT¶