Deep Learning

Keith Dillon

Spring 2019

Topic 8: Keras

Today:¶

- Keras Quick Overview & Demo

- Implementing MLP's in Keras

- Normalizing data

- Comparing Keras MLP with ours

- Multi-category classifier in Keras

Reading:

- Chollet Chapter 1

- Chollet: Chapter 4

- GBC: Chapter 8

- "Getting Started with TensorFlow and Deep Learning: SciPy 2018 Tutorial", Josh Gordon, https://www.youtube.com/watch?v=tYYVSEHq-io, https://www.tensorflow.org/tutorials/keras/

$\bf x$ - input data ~ a single image, or sentence of text.

$\bf y$ - prediction ~ "cat" vs "dog", "positive" vs "negative"

$f(\cdot)$ - mapping from input to output - function we fit

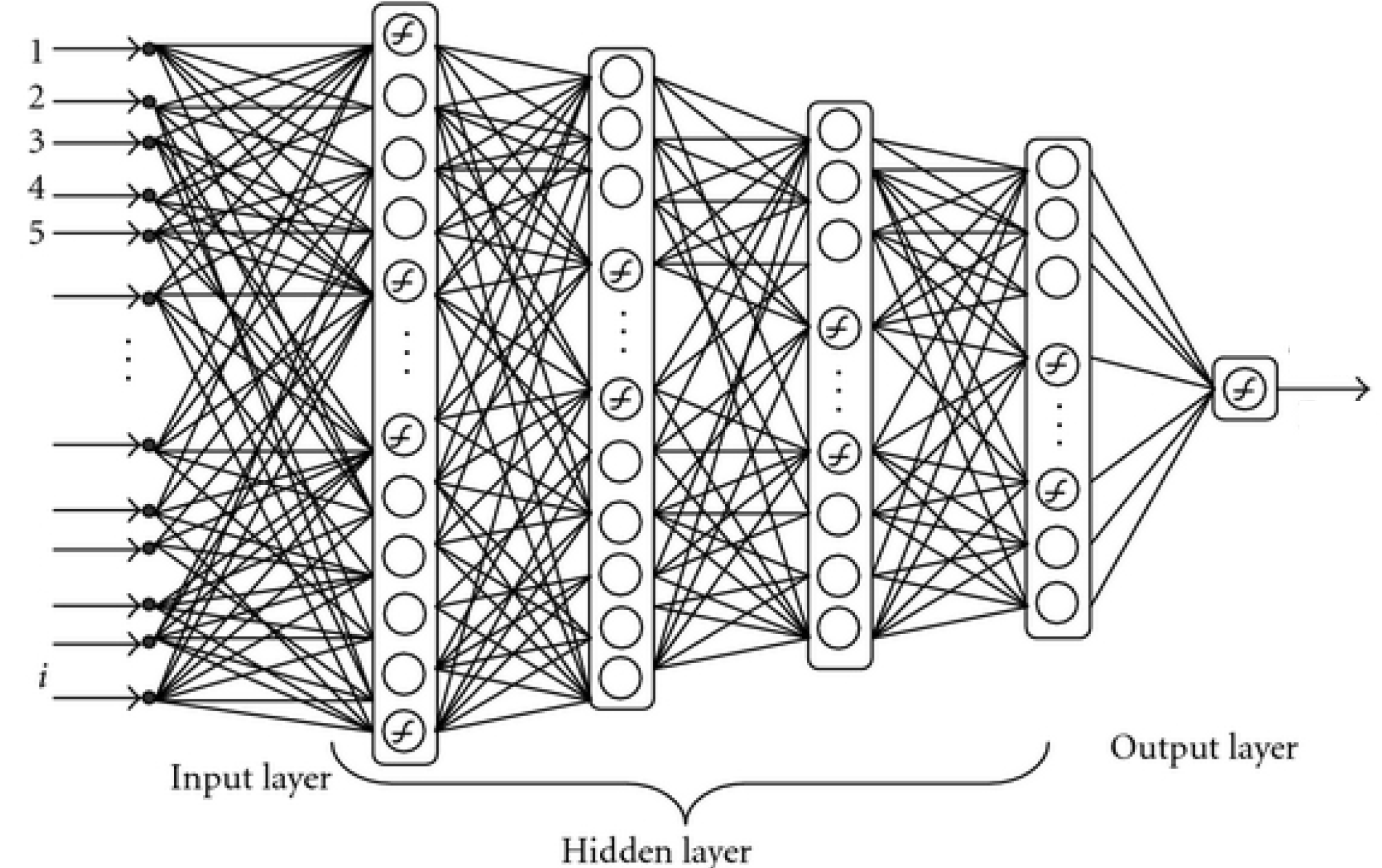

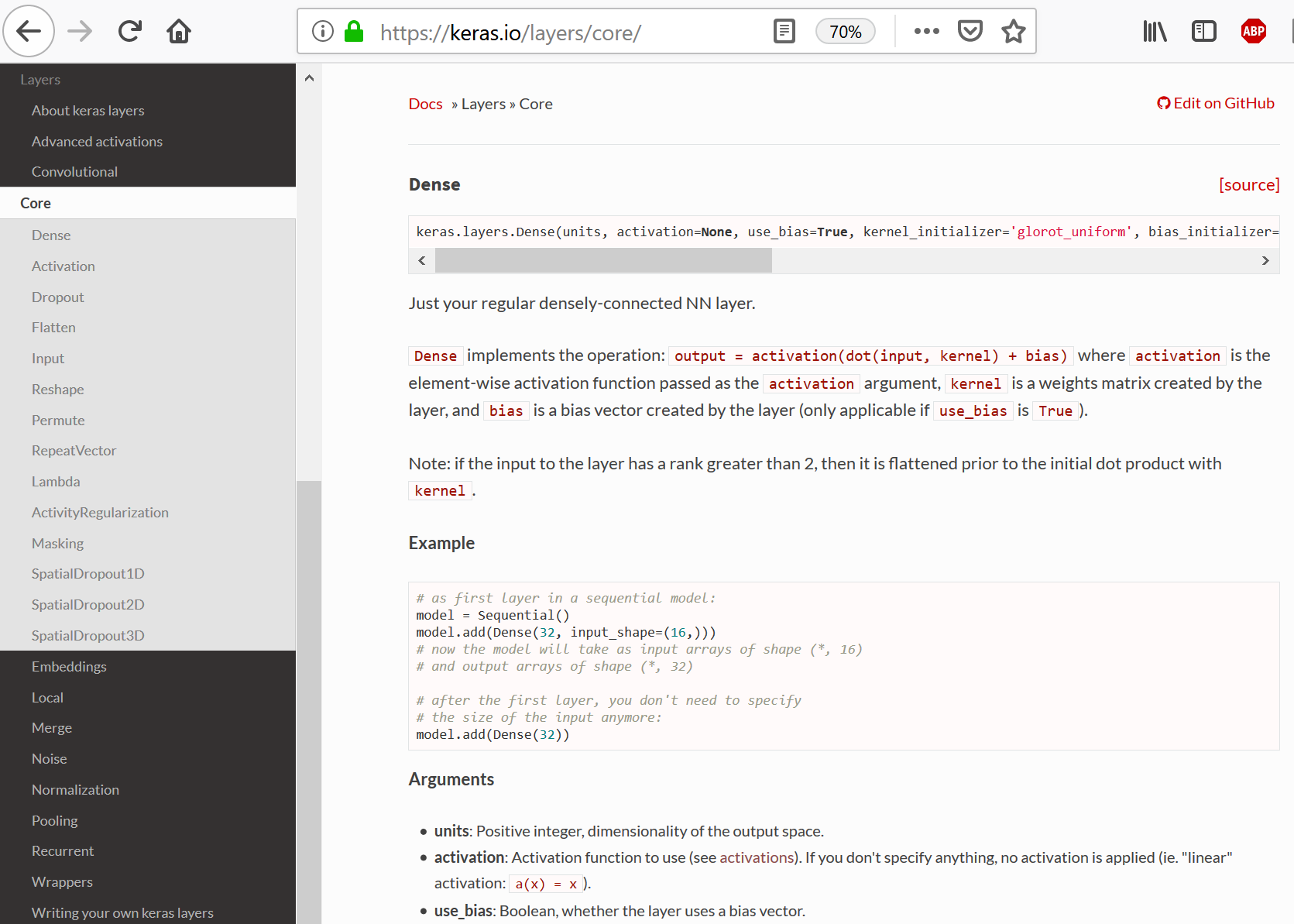

Layers in Keras¶

Connection decisions: Fully-connected Layers, Convolutional Layers

Unified way to define other aspects of network: activation function, dropout, even pre-processing steps

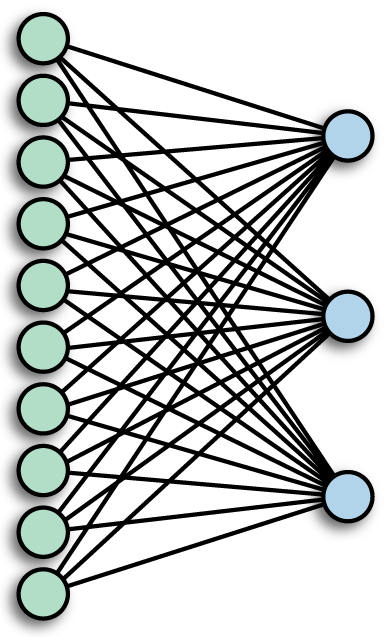

Fully-connected Layer¶

a.k.a. Densely-connected Layer - every input connects to every output (with weights)

Formal Machine Learning Framework & Jargon¶

Given training data $(\mathbf x_{(i)},y_i)$ for $i=1,...,m$.

Choose a model $f(\cdot)$ where we want to make $f(\mathbf x_{(i)})\approx y_i$ (for all $i$)

Define a loss function $L(f(\mathbf x), y)$ to minimize by changing $f(\cdot)$ ...by adjusting the weights.

Deep Network Design¶

Make up an architcture - choose layers and their parameters

Choose a Loss function - how compute error for $f(\mathbf x_{(i)}) \ne y_i$

Choose a optimization method - many variants of same basic method

Choose "regularization" tricks to prevent overfitting

Other important details like initializing and normalizing data

Keras example¶

"Deep Learning with Python", Francois Chollet, Ch. 2,

Train your first neural network: basic classification¶

This tutorial is an updated version of code from book:

- https://www.tensorflow.org/tutorials/keras/basic_classification

- https://github.com/tensorflow/docs/blob/master/site/en/tutorials/keras/basic_classification.ipynb

Variation on the Chollet version in the following...

# TensorFlow

import tensorflow as tf

print(tf.__version__)

1.11.0

import keras

keras.__version__

Using TensorFlow backend.

'2.2.4'

The Data¶

Look at the data and understand the structure

fashion_mnist = keras.datasets.fashion_mnist

(train_images, train_labels), (test_images, test_labels) = fashion_mnist.load_data()

class_names = ['T-shirt/top', 'Trouser', 'Pullover', 'Dress', 'Coat',

'Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle boot']

print(train_images.shape)

print(test_images.shape)

print(train_labels)

(60000, 28, 28) (10000, 28, 28) [9 0 0 ... 3 0 5]

"Preprocessing"¶

Pixel values commonly range from 0 to 255 (8 bit integers)

We want to normalize to between 0 and 1

pixel0 = train_images[0][0][0]

print(pixel0)

0

plt.imshow(train_images[0])

plt.colorbar();

train_images[0]

array([[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1,

0, 0, 13, 73, 0, 0, 1, 4, 0, 0, 0, 0, 1,

1, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 3,

0, 36, 136, 127, 62, 54, 0, 0, 0, 1, 3, 4, 0,

0, 3],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 6,

0, 102, 204, 176, 134, 144, 123, 23, 0, 0, 0, 0, 12,

10, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 155, 236, 207, 178, 107, 156, 161, 109, 64, 23, 77, 130,

72, 15],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0,

69, 207, 223, 218, 216, 216, 163, 127, 121, 122, 146, 141, 88,

172, 66],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 0,

200, 232, 232, 233, 229, 223, 223, 215, 213, 164, 127, 123, 196,

229, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

183, 225, 216, 223, 228, 235, 227, 224, 222, 224, 221, 223, 245,

173, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

193, 228, 218, 213, 198, 180, 212, 210, 211, 213, 223, 220, 243,

202, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 3, 0, 12,

219, 220, 212, 218, 192, 169, 227, 208, 218, 224, 212, 226, 197,

209, 52],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 6, 0, 99,

244, 222, 220, 218, 203, 198, 221, 215, 213, 222, 220, 245, 119,

167, 56],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 4, 0, 0, 55,

236, 228, 230, 228, 240, 232, 213, 218, 223, 234, 217, 217, 209,

92, 0],

[ 0, 0, 1, 4, 6, 7, 2, 0, 0, 0, 0, 0, 237,

226, 217, 223, 222, 219, 222, 221, 216, 223, 229, 215, 218, 255,

77, 0],

[ 0, 3, 0, 0, 0, 0, 0, 0, 0, 62, 145, 204, 228,

207, 213, 221, 218, 208, 211, 218, 224, 223, 219, 215, 224, 244,

159, 0],

[ 0, 0, 0, 0, 18, 44, 82, 107, 189, 228, 220, 222, 217,

226, 200, 205, 211, 230, 224, 234, 176, 188, 250, 248, 233, 238,

215, 0],

[ 0, 57, 187, 208, 224, 221, 224, 208, 204, 214, 208, 209, 200,

159, 245, 193, 206, 223, 255, 255, 221, 234, 221, 211, 220, 232,

246, 0],

[ 3, 202, 228, 224, 221, 211, 211, 214, 205, 205, 205, 220, 240,

80, 150, 255, 229, 221, 188, 154, 191, 210, 204, 209, 222, 228,

225, 0],

[ 98, 233, 198, 210, 222, 229, 229, 234, 249, 220, 194, 215, 217,

241, 65, 73, 106, 117, 168, 219, 221, 215, 217, 223, 223, 224,

229, 29],

[ 75, 204, 212, 204, 193, 205, 211, 225, 216, 185, 197, 206, 198,

213, 240, 195, 227, 245, 239, 223, 218, 212, 209, 222, 220, 221,

230, 67],

[ 48, 203, 183, 194, 213, 197, 185, 190, 194, 192, 202, 214, 219,

221, 220, 236, 225, 216, 199, 206, 186, 181, 177, 172, 181, 205,

206, 115],

[ 0, 122, 219, 193, 179, 171, 183, 196, 204, 210, 213, 207, 211,

210, 200, 196, 194, 191, 195, 191, 198, 192, 176, 156, 167, 177,

210, 92],

[ 0, 0, 74, 189, 212, 191, 175, 172, 175, 181, 185, 188, 189,

188, 193, 198, 204, 209, 210, 210, 211, 188, 188, 194, 192, 216,

170, 0],

[ 2, 0, 0, 0, 66, 200, 222, 237, 239, 242, 246, 243, 244,

221, 220, 193, 191, 179, 182, 182, 181, 176, 166, 168, 99, 58,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 40, 61, 44, 72, 41, 35,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0],

[ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0]], dtype=uint8)

plt.plot(train_images[0]);

Normalizing¶

train_images = train_images / 255.0

test_images = test_images / 255.0

plt.imshow(train_images[0])

plt.colorbar();

plt.figure(figsize=(10,10))

for i in range(25):

plt.subplot(5,5,i+1)

plt.xticks([])

plt.yticks([])

plt.grid(False)

plt.imshow(train_images[i], cmap=plt.cm.binary)

plt.xlabel(class_names[train_labels[i]])

Define the network model¶

from keras import models

from keras import layers

model = models.Sequential() # model as a list (sequence) of layers

model.add(layers.Flatten(input_shape=(28, 28)))

model.add(layers.Dense(128, activation=tf.nn.relu))

model.add(layers.Dense(32, activation=tf.nn.relu))

model.add(layers.Dense(10, activation=tf.nn.softmax))

model.summary()

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= flatten_6 (Flatten) (None, 784) 0 _________________________________________________________________ dense_12 (Dense) (None, 128) 100480 _________________________________________________________________ dense_13 (Dense) (None, 32) 4128 _________________________________________________________________ dense_14 (Dense) (None, 10) 330 ================================================================= Total params: 104,938 Trainable params: 104,938 Non-trainable params: 0 _________________________________________________________________

"Compile" the network¶

model.compile(optimizer=tf.train.AdamOptimizer(),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

- Sparse - recall what this means?

- Cross-entropy

- Categorical Cross-entropy

Optimize the network¶

I.e. change weights in $f(\cdot)$ such that $f(\mathbf x) \approx y$, using training set

model.fit(train_images, train_labels, epochs=5)

Epoch 1/5 60000/60000 [==============================] - 6s 101us/step - loss: 0.5142 - acc: 0.8167 Epoch 2/5 60000/60000 [==============================] - 6s 95us/step - loss: 0.3750 - acc: 0.8636 Epoch 3/5 60000/60000 [==============================] - 6s 105us/step - loss: 0.3380 - acc: 0.8761 Epoch 4/5 60000/60000 [==============================] - 6s 95us/step - loss: 0.3138 - acc: 0.8844 Epoch 5/5 60000/60000 [==============================] - 6s 94us/step - loss: 0.2972 - acc: 0.8896

<keras.callbacks.History at 0x1ff99a83128>

Compute accuracy on test set¶

test_loss, test_acc = model.evaluate(test_images, test_labels)

print('Test accuracy:', test_acc)

10000/10000 [==============================] - 1s 53us/step Test accuracy: 0.8718

Use network to classify samples - compute $y = f(\mathbf x)$¶

predictions = model.predict(test_images)

plt.plot(predictions[3]);

print(np.argmax(predictions[0]), test_labels[0])

9 9

print("Prediction:",class_names[np.argmax(predictions[0])], "vs. Truth:",class_names[test_labels[0]])

Prediction: Ankle boot vs. Truth: Ankle boot

Note: .predict() expects an array of samples (as a tensor)¶

img = test_images[0]

print(img.shape)

(28, 28)

img_tensor = (np.expand_dims(img,0))

print(img_tensor.shape)

(1, 28, 28)

predictions_single = model.predict(img_tensor)

print(predictions_single)

[[9.0164267e-06 1.8462065e-07 8.3386325e-08 2.8834060e-10 8.6999995e-07 4.3465357e-02 1.4766589e-06 1.5969688e-02 3.3855224e-06 9.4054997e-01]]

predictions_single = model.predict(np.array([img]))

print(predictions_single)

[[9.0164267e-06 1.8462065e-07 8.3386325e-08 2.8834060e-10 8.6999995e-07 4.3465357e-02 1.4766589e-06 1.5969688e-02 3.3855224e-06 9.4054997e-01]]

II. Part 2...¶

Normalizing data¶

from sklearn.datasets import load_breast_cancer

bcdata = load_breast_cancer()

X=bcdata.data

y=bcdata.target

#print(X.shape,y.shape,y)

print(X[0])

[1.799e+01 1.038e+01 1.228e+02 1.001e+03 1.184e-01 2.776e-01 3.001e-01 1.471e-01 2.419e-01 7.871e-02 1.095e+00 9.053e-01 8.589e+00 1.534e+02 6.399e-03 4.904e-02 5.373e-02 1.587e-02 3.003e-02 6.193e-03 2.538e+01 1.733e+01 1.846e+02 2.019e+03 1.622e-01 6.656e-01 7.119e-01 2.654e-01 4.601e-01 1.189e-01]

np.linalg.norm(keras.utils.normalize([[1,2,3],[2,3,4]],axis=0)[:,0])

0.9999999999999999

Formal Machine Learning Framework & Jargon¶

Given training data $(\mathbf x_{(i)},y_i)$ for $i=1,...,m$.

Choose a model $f(\cdot)$ where we want to make $f(\mathbf x_{(i)})\approx y_i$ (for all $i$)

Define a loss function $L(f(\mathbf x), y)$ to minimize by changing $f(\cdot)$ ...by adjusting the weights.

In Keras¶

Make up an architecture - choose layers and their parameters

Choose a Loss function - how compute error for $f(\mathbf x_{(i)}) \ne y_i$

Choose a optimization method - many variants of same basic method

Choose "regularization" tricks to prevent overfitting

Handle other important details like initializing and normalizing data

Perceptron in Keras¶

Redo the single class predictor using Keras for the breast cancer data.

Use the sequential model in Keras and make a single neuron. https://keras.io/getting-started/sequential-model-guide/

# load dataset, "preprocess"

from sklearn.datasets import load_breast_cancer

bcdata = load_breast_cancer()

X=bcdata.data

y=bcdata.target

#print(X.shape,y.shape,y)

print(X[0])

print(bcdata.feature_names)

[1.799e+01 1.038e+01 1.228e+02 1.001e+03 1.184e-01 2.776e-01 3.001e-01 1.471e-01 2.419e-01 7.871e-02 1.095e+00 9.053e-01 8.589e+00 1.534e+02 6.399e-03 4.904e-02 5.373e-02 1.587e-02 3.003e-02 6.193e-03 2.538e+01 1.733e+01 1.846e+02 2.019e+03 1.622e-01 6.656e-01 7.119e-01 2.654e-01 4.601e-01 1.189e-01] ['mean radius' 'mean texture' 'mean perimeter' 'mean area' 'mean smoothness' 'mean compactness' 'mean concavity' 'mean concave points' 'mean symmetry' 'mean fractal dimension' 'radius error' 'texture error' 'perimeter error' 'area error' 'smoothness error' 'compactness error' 'concavity error' 'concave points error' 'symmetry error' 'fractal dimension error' 'worst radius' 'worst texture' 'worst perimeter' 'worst area' 'worst smoothness' 'worst compactness' 'worst concavity' 'worst concave points' 'worst symmetry' 'worst fractal dimension']

test_loss, test_acc = perceptron1.evaluate(X, y)

print('Test accuracy:', test_acc)

569/569 [==============================] - 0s 247us/step Test accuracy: 0.9824253075571178

Comparing with our Perceptron¶

perceptron1.get_weights()

[array([[-1.1621244 ],

[-3.5179307 ],

[-0.9596353 ],

[-3.1887 ],

[-0.4067479 ],

[ 1.946467 ],

[-4.874168 ],

[-6.93264 ],

[ 0.05423523],

[ 4.2408476 ],

[-7.7104926 ],

[ 0.56591684],

[-5.798534 ],

[-4.647944 ],

[-1.4257054 ],

[ 4.697496 ],

[ 1.0625879 ],

[ 0.05174711],

[ 1.7445477 ],

[ 2.517967 ],

[-6.0287294 ],

[-5.990441 ],

[-4.8194003 ],

[-5.4408607 ],

[-4.224742 ],

[-0.13395718],

[-3.756704 ],

[-5.428169 ],

[-5.116797 ],

[-0.9797471 ]], dtype=float32), array([16.857447], dtype=float32)]

Use weights and bias from Keras in our python program¶

w = perceptron1.get_weights()[0]

w.shape

(30, 1)

b = perceptron1.get_weights()[1][0]

b

16.857447

def f(x,w,b):

sum = 0

for i in range(0,len(w)):

sum = sum+x[i]*w[i]

#print(x[i],w[i],sum)

return activation(sum+b)

def activation(y_0):

if y_0 >= 0:

return 1

else:

return 0 # <----- using zero

Our network:¶

acc = 0

for j in np.arange(0,len(X)):

acc = acc + (y[j]==f(X[j].reshape(-1),w,b))/len(X);

print(acc)

0.9824253075571117

Keras network:¶

test_loss, test_acc = perceptron1.evaluate(X, y)

print('Test accuracy:', test_acc)

569/569 [==============================] - 0s 247us/step Test accuracy: 0.9824253075571178

...perfect!¶

Multi-category classification¶

Iris Dataset¶

https://scikit-learn.org/stable/modules/generated/sklearn.datasets.load_iris.html

# load dataset

from sklearn import datasets

iris = datasets.load_iris()

X=iris.data

from keras.utils import to_categorical

y = to_categorical(iris.target)

print(X.shape,y.shape)

X = 2*X*(1/np.max(np.abs(X),0))-1 # normalize

print(np.max(X,0),np.min(X,0))

(150, 4) (150, 3) [1. 1. 1. 1.] [ 0.08860759 -0.09090909 -0.71014493 -0.92 ]

y

array([[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[1., 0., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 1., 0.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.],

[0., 0., 1.]], dtype=float32)

perceptron2.fit(X, y, epochs = 100, batch_size = 12, shuffle = True)

Epoch 1/100 150/150 [==============================] - 0s 2ms/step - loss: 0.6829 - acc: 0.7733 Epoch 2/100 150/150 [==============================] - 0s 208us/step - loss: 0.4283 - acc: 0.8600 Epoch 3/100 150/150 [==============================] - 0s 312us/step - loss: 0.3559 - acc: 0.9267 Epoch 4/100 150/150 [==============================] - 0s 208us/step - loss: 0.2962 - acc: 0.9533 Epoch 5/100 150/150 [==============================] - 0s 208us/step - loss: 0.2617 - acc: 0.9400 Epoch 6/100 150/150 [==============================] - 0s 208us/step - loss: 0.2367 - acc: 0.9467 Epoch 7/100 150/150 [==============================] - 0s 208us/step - loss: 0.2199 - acc: 0.9533 Epoch 8/100 150/150 [==============================] - 0s 329us/step - loss: 0.2097 - acc: 0.9467 Epoch 9/100 150/150 [==============================] - 0s 208us/step - loss: 0.1933 - acc: 0.9533 Epoch 10/100 150/150 [==============================] - 0s 312us/step - loss: 0.1884 - acc: 0.9533 Epoch 11/100 150/150 [==============================] - 0s 208us/step - loss: 0.1820 - acc: 0.9667 Epoch 12/100 150/150 [==============================] - 0s 208us/step - loss: 0.1743 - acc: 0.9600 Epoch 13/100 150/150 [==============================] - 0s 208us/step - loss: 0.1620 - acc: 0.9600 Epoch 14/100 150/150 [==============================] - 0s 208us/step - loss: 0.1598 - acc: 0.9667 Epoch 15/100 150/150 [==============================] - 0s 208us/step - loss: 0.1583 - acc: 0.9600 Epoch 16/100 150/150 [==============================] - 0s 104us/step - loss: 0.1512 - acc: 0.9600 Epoch 17/100 150/150 [==============================] - 0s 208us/step - loss: 0.1487 - acc: 0.9600 Epoch 18/100 150/150 [==============================] - 0s 207us/step - loss: 0.1410 - acc: 0.9667 Epoch 19/100 150/150 [==============================] - 0s 208us/step - loss: 0.1394 - acc: 0.9533 Epoch 20/100 150/150 [==============================] - 0s 312us/step - loss: 0.1358 - acc: 0.9600 Epoch 21/100 150/150 [==============================] - 0s 208us/step - loss: 0.1315 - acc: 0.9600 Epoch 22/100 150/150 [==============================] - 0s 208us/step - loss: 0.1294 - acc: 0.9600 Epoch 23/100 150/150 [==============================] - 0s 208us/step - loss: 0.1293 - acc: 0.9533 Epoch 24/100 150/150 [==============================] - 0s 208us/step - loss: 0.1241 - acc: 0.9600 Epoch 25/100 150/150 [==============================] - 0s 208us/step - loss: 0.1237 - acc: 0.9533 Epoch 26/100 150/150 [==============================] - 0s 208us/step - loss: 0.1198 - acc: 0.9600 Epoch 27/100 150/150 [==============================] - 0s 208us/step - loss: 0.1211 - acc: 0.9600 Epoch 28/100 150/150 [==============================] - 0s 208us/step - loss: 0.1166 - acc: 0.9667 Epoch 29/100 150/150 [==============================] - 0s 208us/step - loss: 0.1142 - acc: 0.9600 Epoch 30/100 150/150 [==============================] - 0s 313us/step - loss: 0.1128 - acc: 0.9600 Epoch 31/100 150/150 [==============================] - 0s 208us/step - loss: 0.1117 - acc: 0.9533 Epoch 32/100 150/150 [==============================] - 0s 312us/step - loss: 0.1095 - acc: 0.9733 Epoch 33/100 150/150 [==============================] - 0s 104us/step - loss: 0.1111 - acc: 0.9600 Epoch 34/100 150/150 [==============================] - 0s 208us/step - loss: 0.1071 - acc: 0.9667 Epoch 35/100 150/150 [==============================] - 0s 313us/step - loss: 0.1058 - acc: 0.9667 Epoch 36/100 150/150 [==============================] - 0s 208us/step - loss: 0.1053 - acc: 0.9600 Epoch 37/100 150/150 [==============================] - 0s 208us/step - loss: 0.1043 - acc: 0.9667 Epoch 38/100 150/150 [==============================] - 0s 208us/step - loss: 0.1069 - acc: 0.9600 Epoch 39/100 150/150 [==============================] - 0s 208us/step - loss: 0.1031 - acc: 0.9600 Epoch 40/100 150/150 [==============================] - 0s 208us/step - loss: 0.0998 - acc: 0.9600 Epoch 41/100 150/150 [==============================] - 0s 208us/step - loss: 0.0994 - acc: 0.9600 Epoch 42/100 150/150 [==============================] - 0s 208us/step - loss: 0.0979 - acc: 0.9600 Epoch 43/100 150/150 [==============================] - 0s 208us/step - loss: 0.0964 - acc: 0.9600 Epoch 44/100 150/150 [==============================] - 0s 208us/step - loss: 0.0999 - acc: 0.9600 Epoch 45/100 150/150 [==============================] - 0s 208us/step - loss: 0.0966 - acc: 0.9600 Epoch 46/100 150/150 [==============================] - 0s 208us/step - loss: 0.0958 - acc: 0.9667 Epoch 47/100 150/150 [==============================] - 0s 208us/step - loss: 0.0924 - acc: 0.9733 Epoch 48/100 150/150 [==============================] - 0s 208us/step - loss: 0.0931 - acc: 0.9667 Epoch 49/100 150/150 [==============================] - 0s 313us/step - loss: 0.0924 - acc: 0.9600 Epoch 50/100 150/150 [==============================] - 0s 312us/step - loss: 0.0932 - acc: 0.9600 Epoch 51/100 150/150 [==============================] - 0s 312us/step - loss: 0.0908 - acc: 0.9600 Epoch 52/100 150/150 [==============================] - 0s 208us/step - loss: 0.0894 - acc: 0.9733 Epoch 53/100 150/150 [==============================] - 0s 208us/step - loss: 0.0900 - acc: 0.9600 Epoch 54/100 150/150 [==============================] - 0s 312us/step - loss: 0.0890 - acc: 0.9600 Epoch 55/100 150/150 [==============================] - 0s 208us/step - loss: 0.0889 - acc: 0.9733 Epoch 56/100 150/150 [==============================] - 0s 104us/step - loss: 0.0872 - acc: 0.9733 Epoch 57/100 150/150 [==============================] - 0s 312us/step - loss: 0.0869 - acc: 0.9600 Epoch 58/100 150/150 [==============================] - 0s 208us/step - loss: 0.0862 - acc: 0.9600 Epoch 59/100 150/150 [==============================] - 0s 208us/step - loss: 0.0847 - acc: 0.9733 Epoch 60/100 150/150 [==============================] - 0s 208us/step - loss: 0.0875 - acc: 0.9733 Epoch 61/100 150/150 [==============================] - 0s 208us/step - loss: 0.0850 - acc: 0.9733 Epoch 62/100 150/150 [==============================] - 0s 208us/step - loss: 0.0851 - acc: 0.9600 Epoch 63/100 150/150 [==============================] - 0s 208us/step - loss: 0.0845 - acc: 0.9600 Epoch 64/100 150/150 [==============================] - 0s 208us/step - loss: 0.0834 - acc: 0.9667 Epoch 65/100 150/150 [==============================] - 0s 312us/step - loss: 0.0838 - acc: 0.9600 Epoch 66/100 150/150 [==============================] - 0s 212us/step - loss: 0.0821 - acc: 0.9667 Epoch 67/100 150/150 [==============================] - 0s 208us/step - loss: 0.0833 - acc: 0.9667 Epoch 68/100 150/150 [==============================] - 0s 208us/step - loss: 0.0819 - acc: 0.9667 Epoch 69/100 150/150 [==============================] - 0s 312us/step - loss: 0.0813 - acc: 0.9600 Epoch 70/100 150/150 [==============================] - 0s 208us/step - loss: 0.0803 - acc: 0.9733 Epoch 71/100 150/150 [==============================] - 0s 208us/step - loss: 0.0804 - acc: 0.9667 Epoch 72/100 150/150 [==============================] - 0s 208us/step - loss: 0.0805 - acc: 0.9600 Epoch 73/100 150/150 [==============================] - 0s 208us/step - loss: 0.0791 - acc: 0.9600 Epoch 74/100 150/150 [==============================] - 0s 208us/step - loss: 0.0770 - acc: 0.9733 Epoch 75/100 150/150 [==============================] - 0s 208us/step - loss: 0.0790 - acc: 0.9667 Epoch 76/100 150/150 [==============================] - 0s 208us/step - loss: 0.0777 - acc: 0.9733 Epoch 77/100 150/150 [==============================] - 0s 208us/step - loss: 0.0802 - acc: 0.9600 Epoch 78/100 150/150 [==============================] - 0s 208us/step - loss: 0.0788 - acc: 0.9600 Epoch 79/100 150/150 [==============================] - 0s 208us/step - loss: 0.0774 - acc: 0.9733 Epoch 80/100 150/150 [==============================] - 0s 208us/step - loss: 0.0789 - acc: 0.9733 Epoch 81/100 150/150 [==============================] - 0s 208us/step - loss: 0.0777 - acc: 0.9667 Epoch 82/100 150/150 [==============================] - 0s 208us/step - loss: 0.0768 - acc: 0.9733 Epoch 83/100 150/150 [==============================] - 0s 208us/step - loss: 0.0754 - acc: 0.9733 Epoch 84/100 150/150 [==============================] - 0s 104us/step - loss: 0.0792 - acc: 0.9600 Epoch 85/100 150/150 [==============================] - 0s 104us/step - loss: 0.0779 - acc: 0.9667 Epoch 86/100 150/150 [==============================] - 0s 208us/step - loss: 0.0757 - acc: 0.9733 Epoch 87/100 150/150 [==============================] - 0s 104us/step - loss: 0.0753 - acc: 0.9667 Epoch 88/100 150/150 [==============================] - 0s 208us/step - loss: 0.0738 - acc: 0.9733 Epoch 89/100 150/150 [==============================] - 0s 104us/step - loss: 0.0759 - acc: 0.9600 Epoch 90/100 150/150 [==============================] - 0s 104us/step - loss: 0.0728 - acc: 0.9733 Epoch 91/100 150/150 [==============================] - 0s 208us/step - loss: 0.0736 - acc: 0.9733 Epoch 92/100 150/150 [==============================] - 0s 104us/step - loss: 0.0744 - acc: 0.9667 Epoch 93/100 150/150 [==============================] - 0s 208us/step - loss: 0.0738 - acc: 0.9800 Epoch 94/100 150/150 [==============================] - 0s 208us/step - loss: 0.0739 - acc: 0.9733 Epoch 95/100 150/150 [==============================] - 0s 104us/step - loss: 0.0734 - acc: 0.9733 Epoch 96/100 150/150 [==============================] - 0s 208us/step - loss: 0.0733 - acc: 0.9733 Epoch 97/100 150/150 [==============================] - 0s 104us/step - loss: 0.0723 - acc: 0.9733 Epoch 98/100 150/150 [==============================] - 0s 208us/step - loss: 0.0722 - acc: 0.9733 Epoch 99/100 150/150 [==============================] - 0s 210us/step - loss: 0.0703 - acc: 0.9733 Epoch 100/100 150/150 [==============================] - 0s 104us/step - loss: 0.0716 - acc: 0.9733

<keras.callbacks.History at 0x16aaaeebeb8>

test_loss, test_acc = perceptron2.evaluate(X, y)

print('Test accuracy:', test_acc)

150/150 [==============================] - 0s 725us/step Test accuracy: 0.9733333309491475