This topic:¶

- Machine Learning Bare Essentials:

- Supervised Learning

- Classification

- Overfitting

- Regularization

Reading:

- Chollet: Chapter 2, 4.4

- GBC: Chapter 5, 7

- Google Machine Learning Crash Course: https://developers.google.com/machine-learning/crash-course/ml-intro

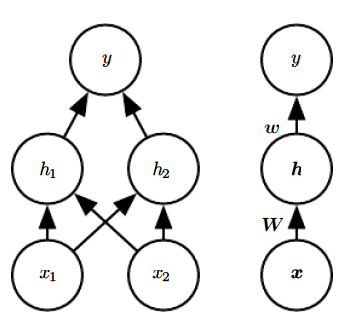

Formal Machine Learning Framework & Jargon¶

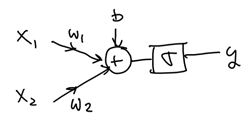

Given training data $(\mathbf x_{(i)},y_i)$ for $i=1,...,m$.

Choose a model $f(\cdot)$ where we want to make $f(\mathbf x_{(i)})\approx y_i$ (for all $i$)

Define a loss function $L(f(\mathbf x), y)$ to minimize by changing $f(\cdot)$ ...by adjusting the weights.

In Keras¶

Make up an architecture - choose layers and their parameters

Choose a Loss function - how compute error for $f(\mathbf x_{(i)}) \ne y_i$

Choose a optimization method - many variants of same basic method

Choose "regularization" tricks to prevent overfitting

Handle other important details like initializing and normalizing data

...Then what??¶

Exercise¶

Given training data $(\mathbf x_{(i)},y_i)$ for $i=1,...,m$.

Choose a model $f(\cdot)$ where we want to make $f(\mathbf x_{(i)})\approx y_i$ (for all $i$)

Define a loss function $L(f(\mathbf x), y)$ to minimize by changing $f(\cdot)$ ...by adjusting the weights.

Explain how this applies to the breast cancer data. And Iris data.

What is $(\mathbf x_{(i)},y_i)$?

What is $L(f(\mathbf x), y)$?

How would you use your model to accomplish something useful?

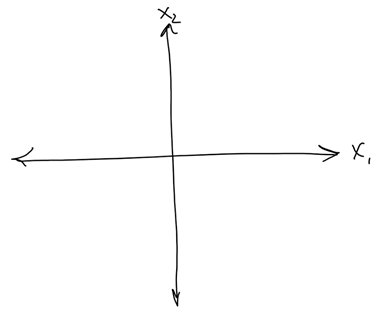

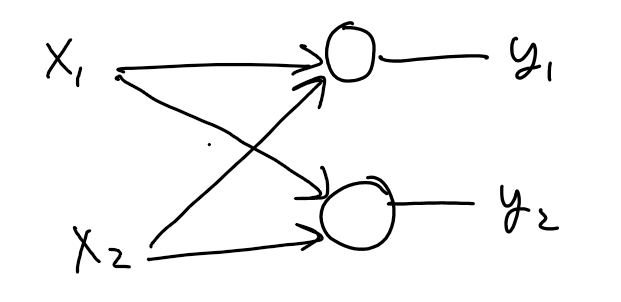

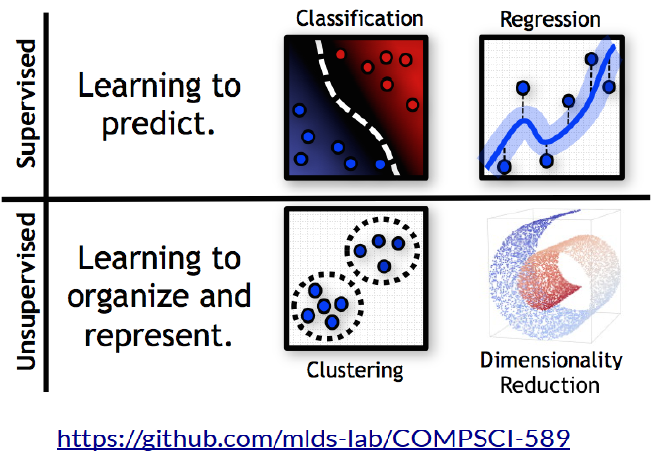

Machine Learning:¶

- Classification - determining what something is, given features

- Regression - determining future value, cost, sea level, from past data

- Clustering - finding subgroups

- Dimensionality Reduction - find the meaningful variations in the data

What are these pictures depicting?

Exercise¶

- Which one does the breast cancer model fit under?

- Draw the picture from the table which applies

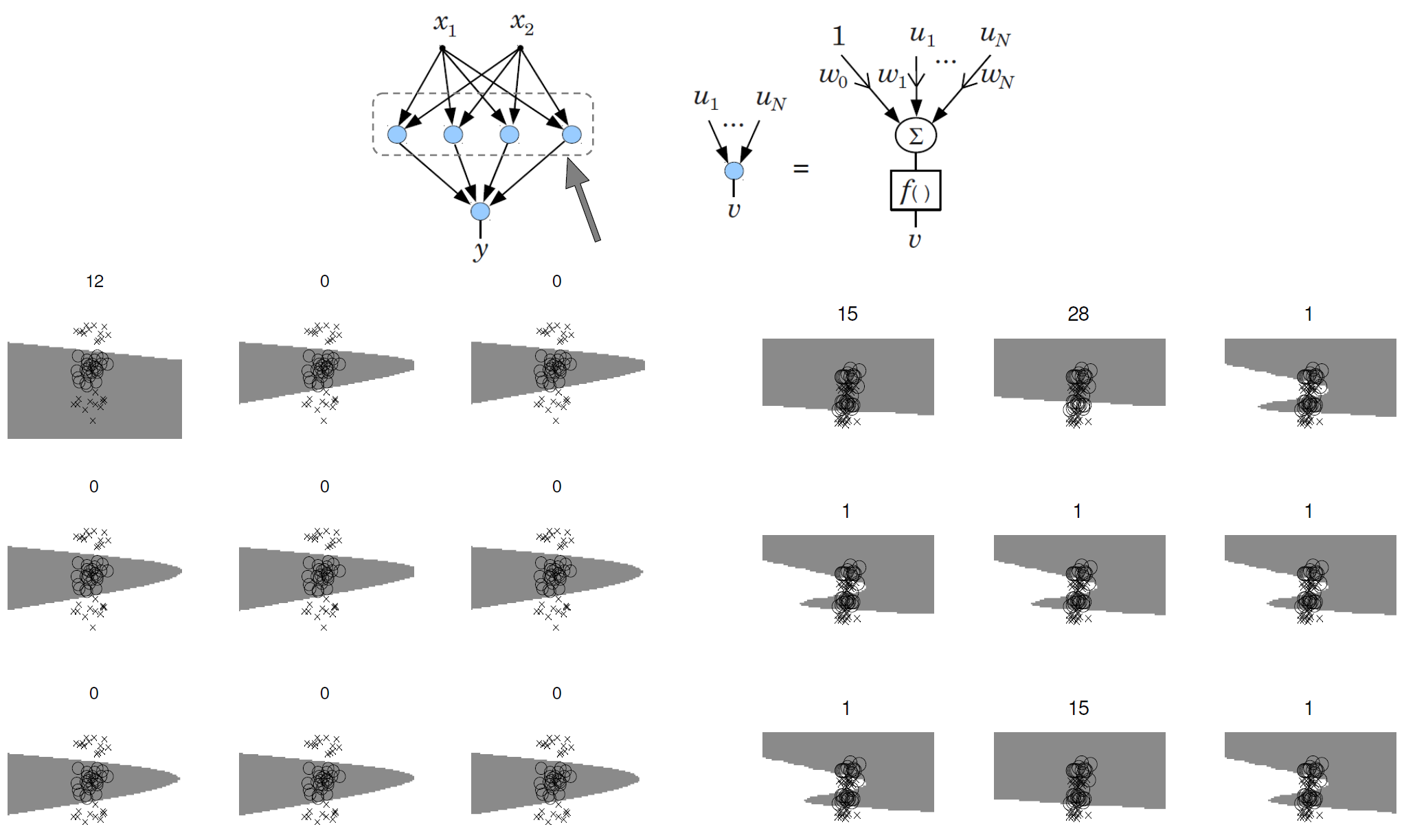

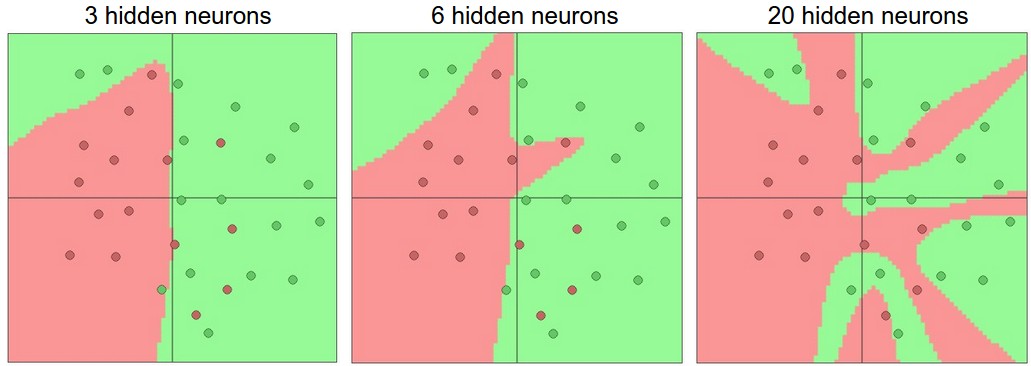

ConvnetJS demo: toy 2d classification¶

https://cs.stanford.edu/people/karpathy/convnetjs/demo/classify2d.html

Danger: decision surfaces that are too nonlinear¶

Making a model to predict sick versus healthy tree given a 2D data measurement (leaf stiffness $\times$ leaf moistness)

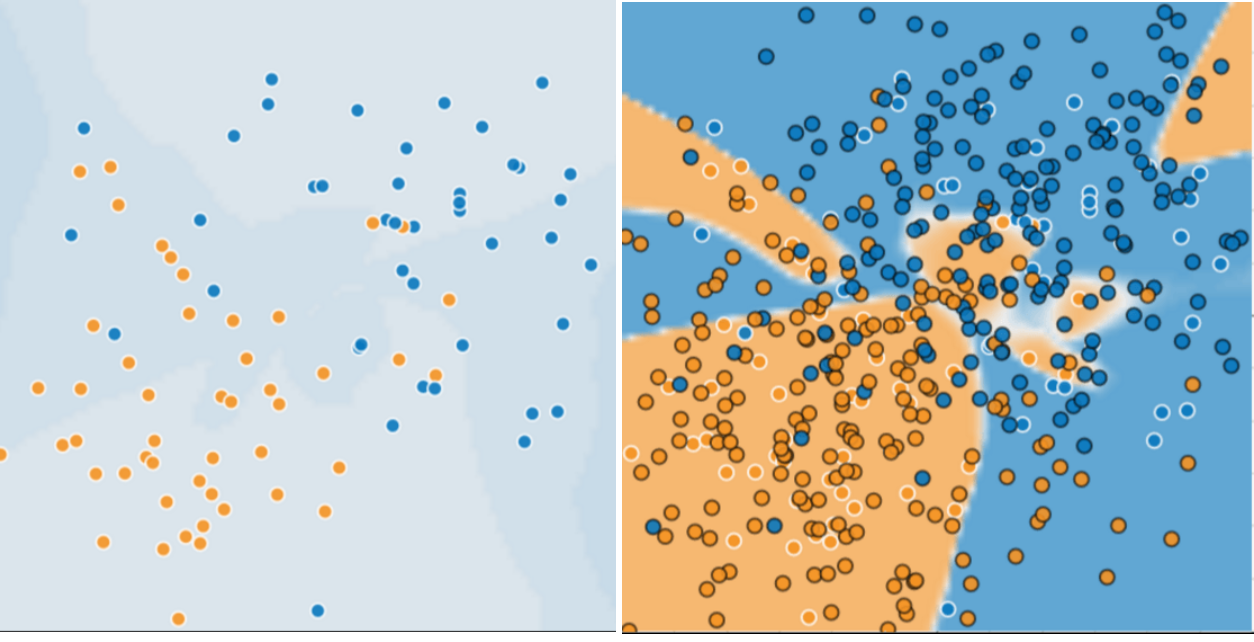

Overfitting¶

Overfitting is a massive issue in deep learning, and correcting it is difficult. There are many ways to combat it (many of the strange options and functions you see in Keras are for this).

Consider overfitting as memorization: a student can repeat perfectly all the information given in class, yet still performs poorly on the exam. Why does this happen?

Training versus Testing¶

Testing for overfitting is easy: similar to testing for student memorization versus deeper understanding. Use new examples (i.e., different data) to compute performance.

- Split your dataset into a training set and a test set.

- Use the training set to optimize the parameters

- Use the test set to evaluate how well the network really performs

Lab¶

Split your data into subsets with one part for training and one part for testing.

Note subsets must be normalized separately (or you can apply the exact scaling you used from the training set to the test set)

Adapt your network to Iris dataset

Regularization¶

Regularization is a very important category of techniques used in optimization methods.

The goal of regularizatio is to try and "bias" the solution to come out in a way that looks better (in some way).

All regularization methods implicitly impose some kind of prior knowledge.

Example: suppose you think the output of a multi-class classification should be "sparse" in that only a single class can be chosen (note this isn't always true). So you adjust your algorithm in some way to make the output tend to be more sparse. What you have done is regularization.

Formal Machine Learning Framework & Jargon 1/3¶

Given training data $(\mathbf x_{(i)},y_i)$ for $i=1,...,m$.

Choose a model $f(\cdot)$ where we want to make $f(\mathbf x)\approx y$

Define a loss function $L(f(\mathbf x), y)$ to minimize by adjusting weights in $f$ (using gradient descent)

Formal Machine Learning Framework & Jargon 2/3¶

Have data $(\mathbf x_{(i)},y_i)$, model $f(\cdot)$, and loss function $L(f(\mathbf x), y)$.

Want to minimize true loss which is the expected value of the loss.

We approximate it by minimizing the Empirical loss (a.k.a "risk") $$ L_{emp}(f(\mathbf x), y) = \sum_{k=1} L\big(f(\mathbf x^{(k)}), y^{(k)}) $$

Formal Machine Learning Framework & Jargon 3/3¶

Emirical Risk minimization: $$ (\mathbf w, b)^* = \arg \min\limits_{\mathbf w, b} L_{emp}(f(\mathbf x; \mathbf w, b), y) $$ To trade-off model risk and "simplicity", we include regularizer: $$ (\mathbf w, b)^* = \arg \min\limits_{\mathbf w, b} \big( L_{emp}(f(\mathbf x; \mathbf w, b), y) + \lambda R(f(\mathbf x; \mathbf w, b)) \big) $$

The regularizer function quantifies how "regular" $f$ is.

$\lambda$ is a hyperparameter we have to pick.

Example: L2 for both loss and regularization¶

$$ (\mathbf w, b)^* = \arg \min\limits_{\mathbf w, b} \big( L_{emp}(f(\mathbf x; \mathbf w, b)) + \lambda R(f(\mathbf x; \mathbf w, b)) \big) $$$$ (\mathbf w, b)^* = \arg \min\limits_{\mathbf w, b} \left( \sum_k \Vert \mathbf y^{(k)} - f(\mathbf x^{(k)}; \mathbf w, b) \Vert^2 + \lambda \Vert \mathbf w \Vert^2 \right) $$Note pernalty terms generally only apply to weights, not bias.

Exercise¶

$$ (\mathbf w, b)^* = \arg \min\limits_{\mathbf w, b} \left( \sum_k \Vert \mathbf y^{(k)} - f(\mathbf x^{(k)}; \mathbf w, b) \Vert^2 + \lambda \Vert \mathbf w \Vert^2 \right) $$Compute the effect of the regularizer on each gradient update.

Hint: you don't have to compute the gradient update to loss term since the gradient is a linear operation.

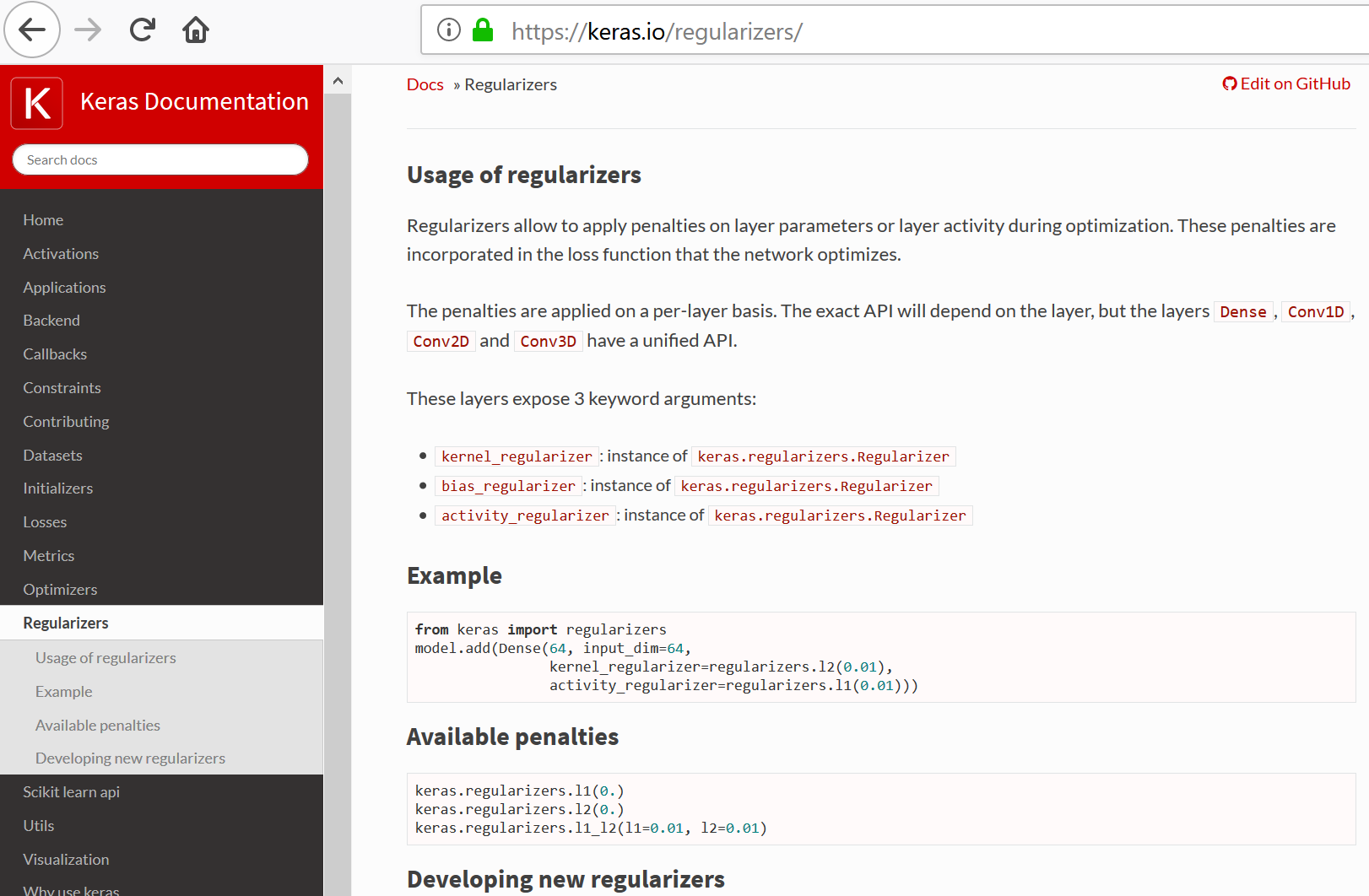

Penalty Term Diversity¶

$\ell_1$ - make weights sparser. May be useful for interpretability.

$\ell_2$ - make weights smaller. ("weight decay"). Gaussian statistics implied.

Elastic - weighted combination of both L1 and L2

$\ell_{1,2}$. Mixed norm. Impose sparsity over groups, but L2 within group.

Total-Variation - shrinkage to spaial derivative

Regularization methods in Keras¶

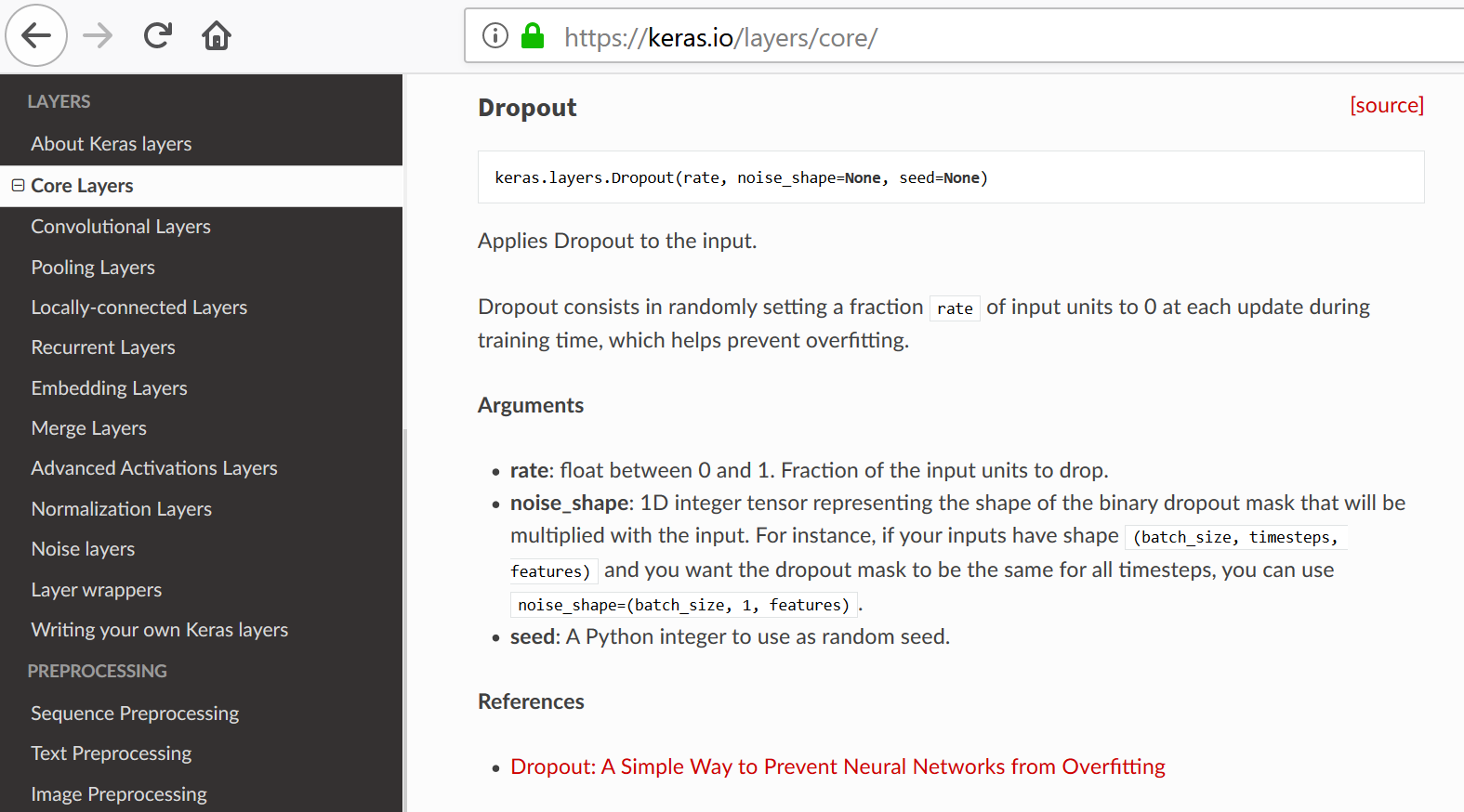

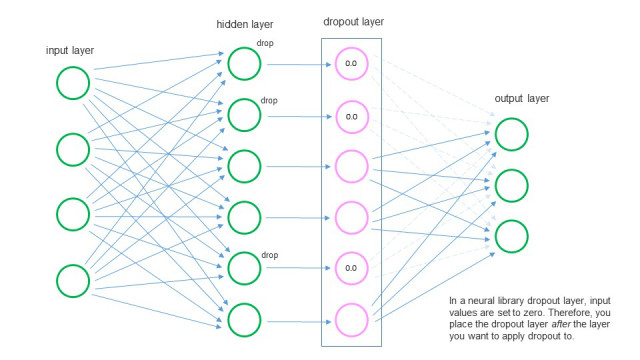

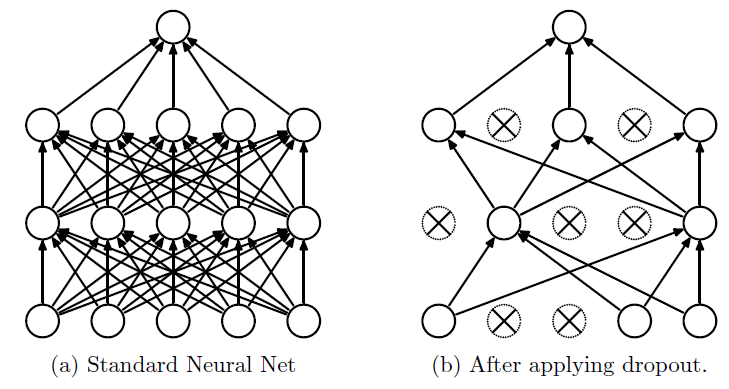

Dropout¶

Dropout is a very different technique that is extremely popular in deep learning. It randomly "switches off" neurons from one batch update to the next, to prevent individual neurons from getting too important for prediction. The goal is to force the network to use a wide range of information from the sample, rather than picking just a few key details.

Keras Dropout Layer¶