Outline:¶

- Convolution

- Convolutional layers

- Pooling Layers

- Keras CNN

- Using a pre-optimized model

Reading:

- Chollet Chapter 5

- GBC 9.0,9.1,9.2,9.3,9.10,9.11

- "A guide to convolution arithmetic for deep learning", https://arxiv.org/pdf/1603.07285.pdf

CNN's¶

- "perhaps the greatest success story of biologically-inspired artificial intelligence" -GBC

- ImageNet 2012 challenge (and computer visions competitions since).

- Best approach for many major computer vision tasks.

- Applied to text, speech signals, and other data types also.

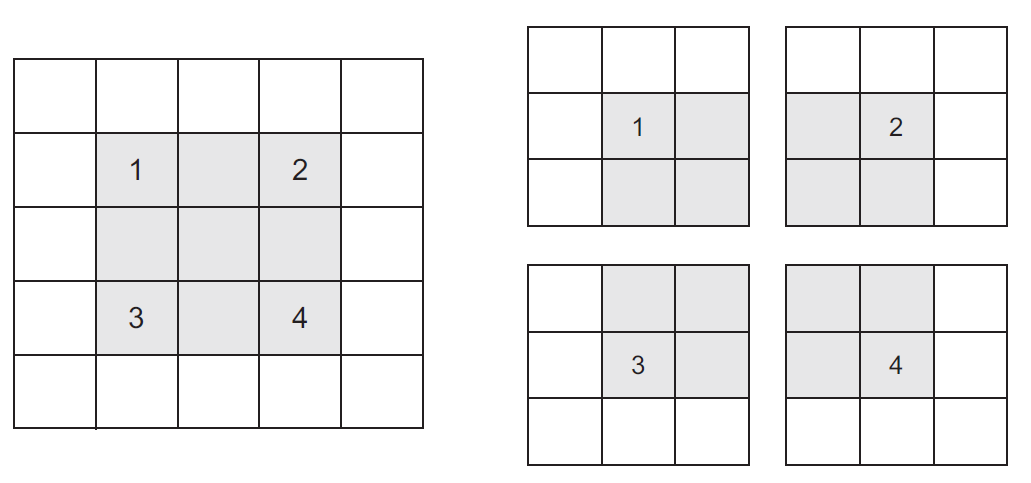

Inspiration - Computer vision¶

Note the features can be anywhere in image, hence "unstructured".

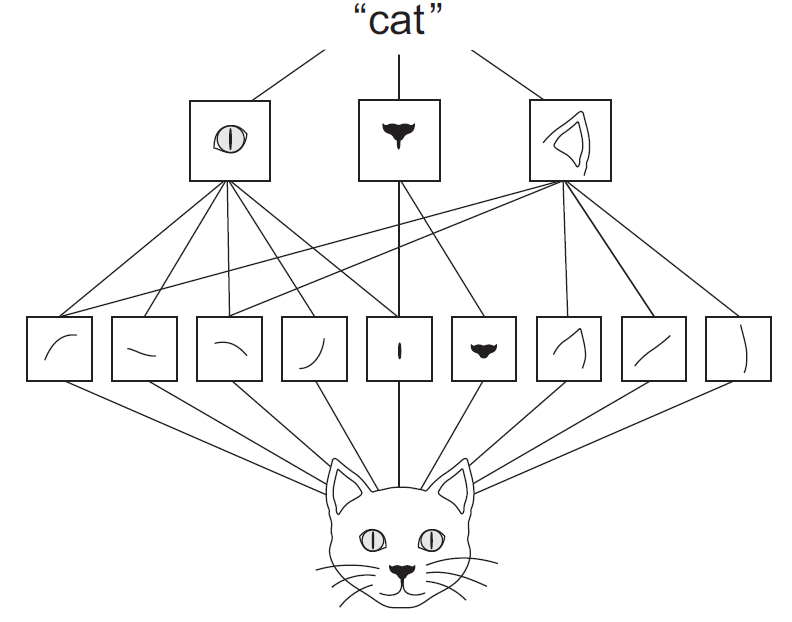

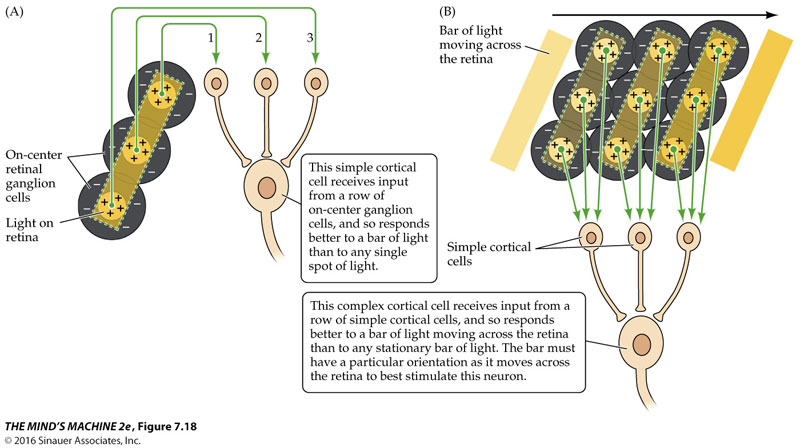

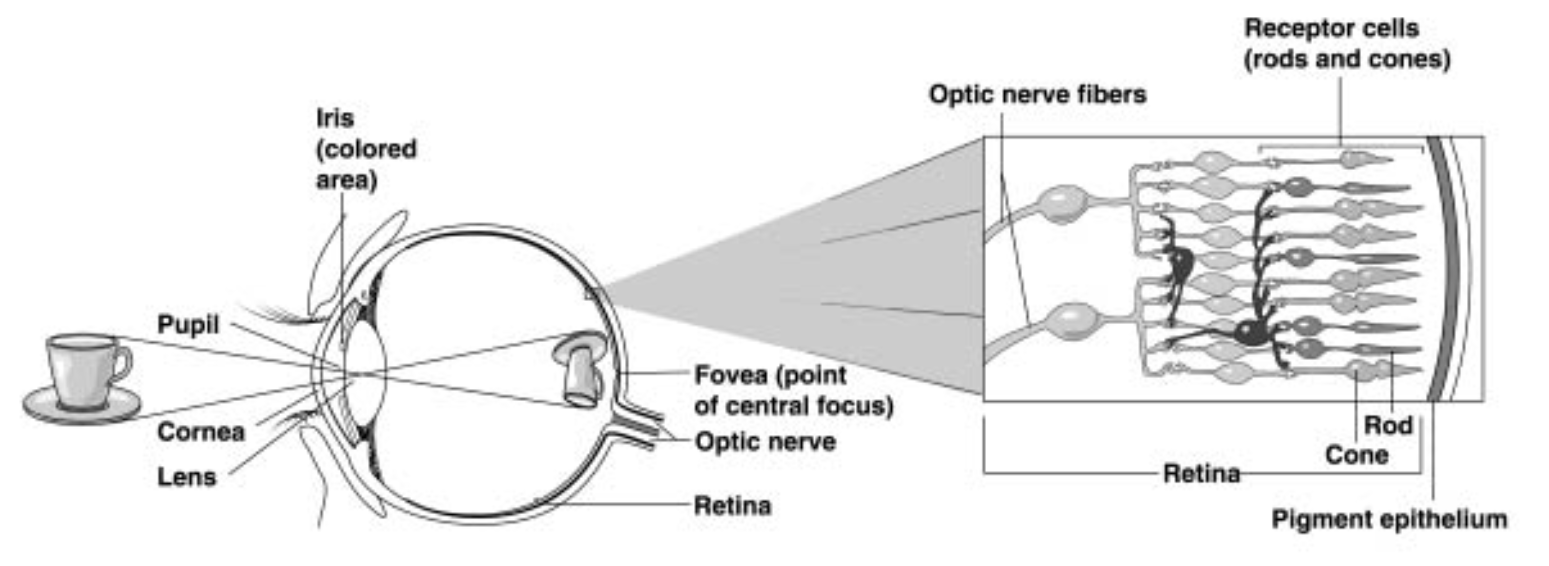

Biological inspiration - the Receptive Field¶

"Center-surround" fields at every point in visual field

Can implement via 2D convolution

Combine in subsequent neurons to make more sophisticated receptive fields.

Convolution¶

A "sliding dot product": \begin{align} s[i] &= \sum_{n=-\infty}^\infty x[n] w[i-n] = (x * w)(i) \end{align} Compare to \begin{align} s'[i] &= \sum_{n=-\infty}^\infty x[n] w[i+n] = (x \star w)(i) \end{align}

Exercise: Compute convolution of w = [-1, +1, -1] with:

- x = [1, 2, 3, 4, 6, 7, 7, 6]

- x = [0, 0, 0, -1, +1, -1, 0]

for $i$=0, 1, ..., $N$

How might you deal with the edges?

What do the following convolution kernels do?¶

- w=[1,1,1]

- w=[-1, +1]

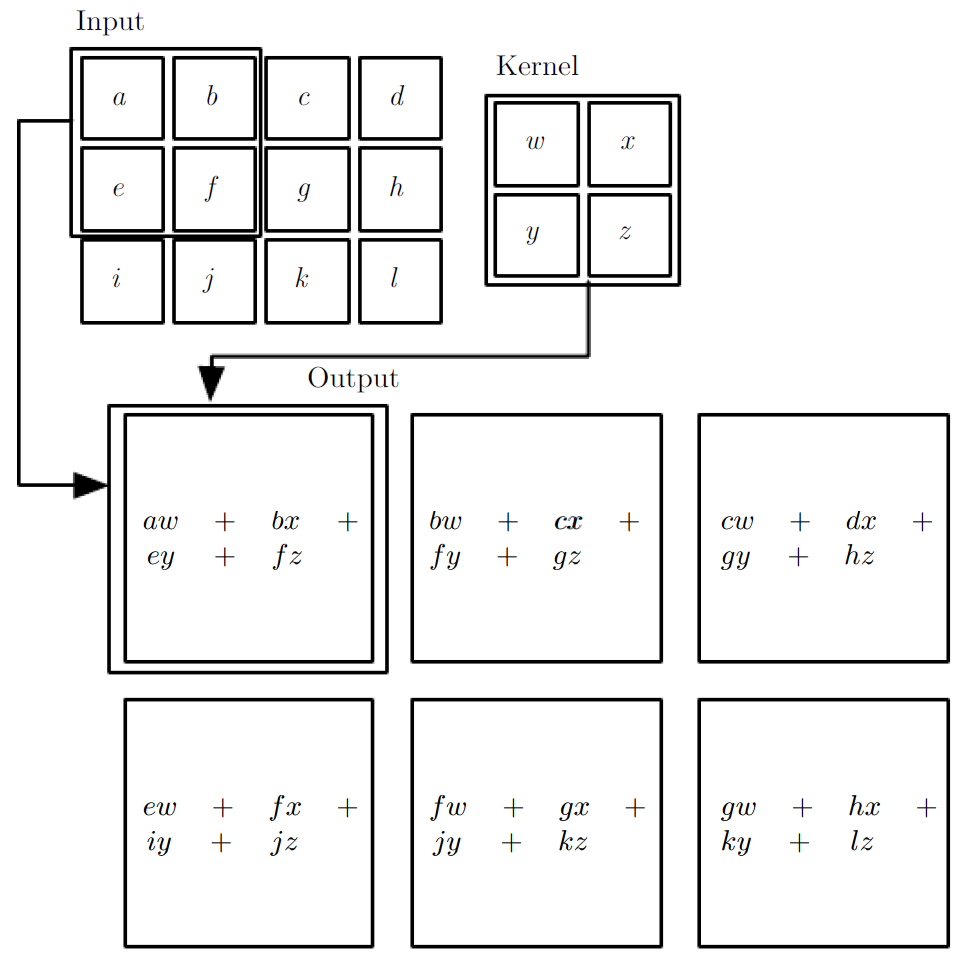

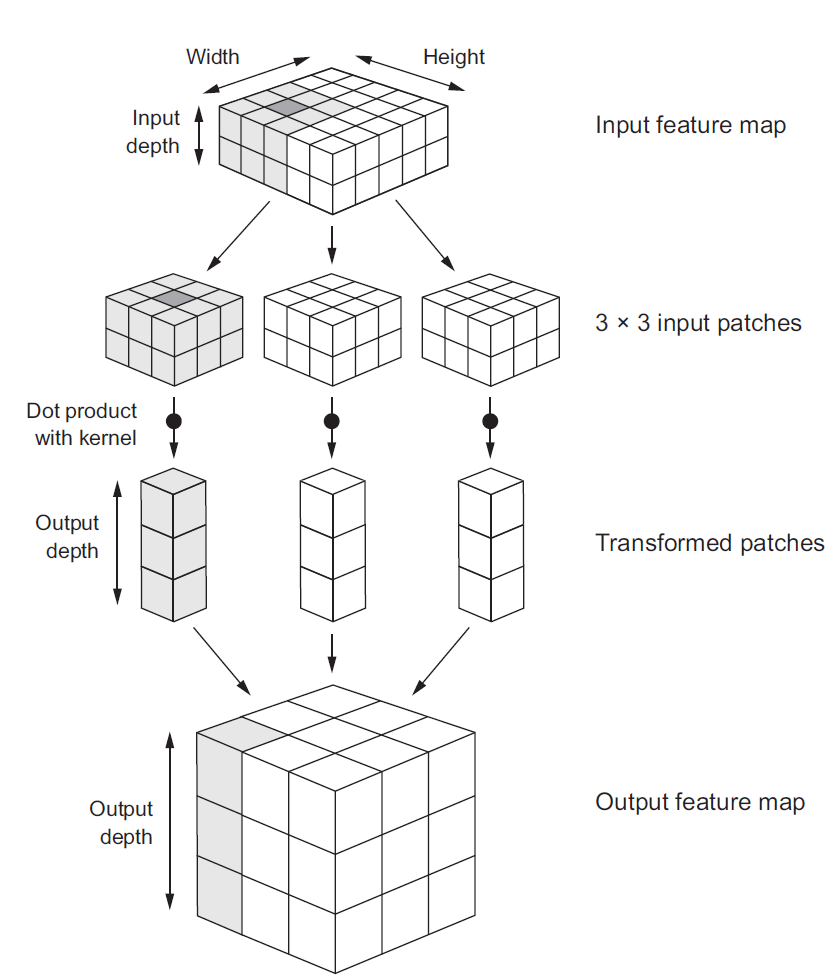

2D Convolution¶

\begin{align} s[i,j] &= \sum_{m=0}^M\sum_{n=0}^N x[m,n] w[i-m][j-n] = (x * w)(i,j) \\ s'[i,j] &= \sum_{m=0}^M\sum_{n=0}^N x[m,n] w[i+m][j+n] = (x \star w)(i,j) \\ \end{align}Recall sliding dot product $\rightarrow$ pattern matching

Some enlightening gifs found on internet: https://towardsdatascience.com/intuitively-understanding-convolutions-for-deep-learning-1f6f42faee1

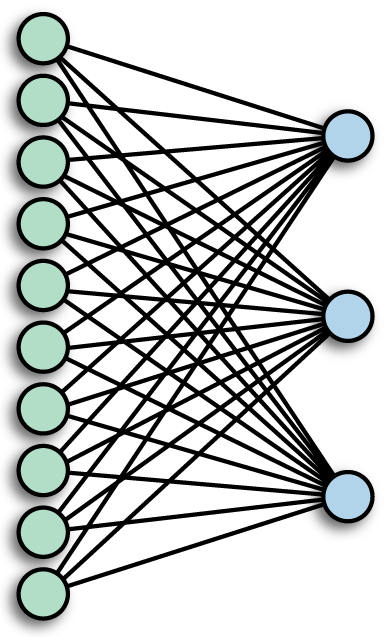

Q: How do we implement Convolution as a network layer?¶

Recall fully-connected a.k.a. Densely-connected a.k.a dense Layer

Suppose input was an image, and output is an image (with convolution applied). Apply equation: \begin{align} s[i] &= \sum_{n=0}^N x[n] w[i-n] = (x * w)(i) \end{align}

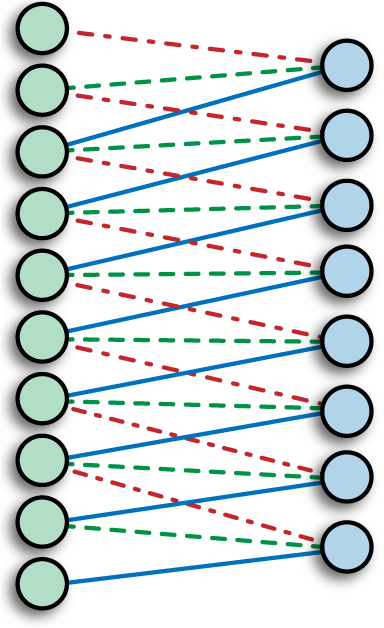

Convolutional Layer¶

Parameter sharing (same color = same weight)

Far fewer weights to deal with versus dense layer.

Also note how edges handled ~ image gets smaller.

Edge handling¶

Two options:

- Edges thrown away, each layer gets smaller

- Must pad image (which then shrinks back to original size)

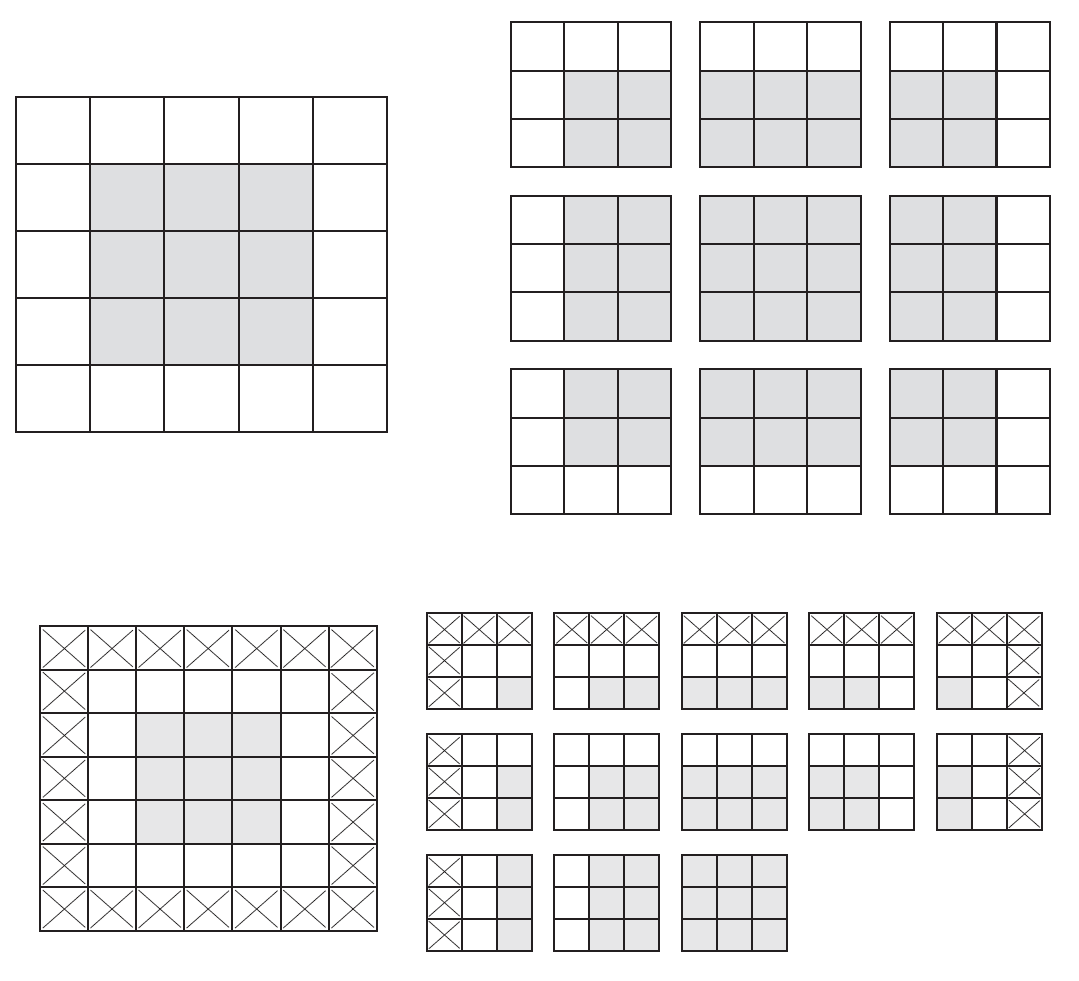

Multiple Channels & Kernels¶

We know how to make a 2D image into a 1D vector (right?). What is a color image however?

We can also have multiple convolution kernels for the same input image

Biological inspiration II¶

- Retina $\approx$ 100 million photoreceptors

- Optic nerve $\approx$ 1 million axons

100x downsampling.

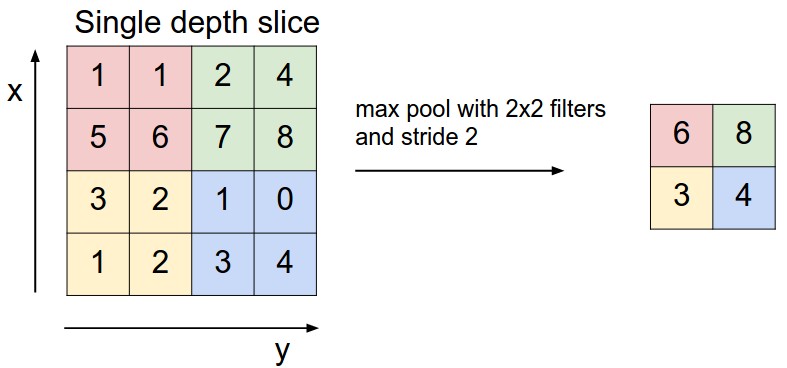

Pooling Layer¶

Combining everything...¶

Keras time¶

"Deep Learning with Python", Francois Chollet, Ch. 2, https://github.com/fchollet/deep-learning-with-python-notebooks

"Getting Started with TensorFlow and Deep Learning: SciPy 2018 Tutorial", Josh Gordon, https://www.youtube.com/watch?v=tYYVSEHq-io, https://www.tensorflow.org/tutorials/keras/

Classification demos

https://www.tensorflow.org/tutorials/keras/basic_classification

https://github.com/tensorflow/docs/blob/master/site/en/tutorials/keras/basic_classification.ipynb

First test a Dense network on the fashion MNIST dataset¶

mnist = keras.datasets.mnist

(X_train, y_train), (X_test, y_test) = mnist.load_data()

print(X_train.shape)

print(X_test.shape)

print(y_train)

(60000, 28, 28) (10000, 28, 28) [5 0 4 ... 5 6 8]

showMNIST10x10()

"Preprocessing"¶

Note pixel values range from 0 to 255 (8 bit integers)

We want to "normalize" to between 0 and 1

plt.imshow(X_train[0])

plt.colorbar();

X_train = X_train / 255.0

X_test = X_test / 255.0

Dense network model¶

from keras import models

from keras import layers

model = models.Sequential()

model.add(layers.Flatten(input_shape=(28, 28)))

model.add(layers.Dense(128, activation=tf.nn.relu))

model.add(layers.Dense(10, activation=tf.nn.softmax))

model.summary()

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= flatten_1 (Flatten) (None, 784) 0 _________________________________________________________________ dense_1 (Dense) (None, 128) 100480 _________________________________________________________________ dense_2 (Dense) (None, 10) 1290 ================================================================= Total params: 101,770 Trainable params: 101,770 Non-trainable params: 0 _________________________________________________________________

model.compile(optimizer=tf.train.AdamOptimizer(),

loss='categorical_crossentropy',

metrics=['accuracy'])

from keras.utils import to_categorical

y_train_cat = to_categorical(y_train)

model.fit(X_train, y_train_cat, epochs=5)

Epoch 1/5 60000/60000 [==============================] - 5s 89us/step - loss: 0.2577 - acc: 0.9261 Epoch 2/5 60000/60000 [==============================] - 5s 77us/step - loss: 0.1148 - acc: 0.9657 Epoch 3/5 60000/60000 [==============================] - 5s 79us/step - loss: 0.0792 - acc: 0.9759: 0s - loss: 0.0802 - ac Epoch 4/5 60000/60000 [==============================] - 5s 79us/step - loss: 0.0600 - acc: 0.9815 Epoch 5/5 60000/60000 [==============================] - 6s 96us/step - loss: 0.0469 - acc: 0.9856

<keras.callbacks.History at 0x1c544cbc2b0>

y_test_cat = to_categorical(y_test)

test_loss, test_acc = model.evaluate(X_test, y_test_cat)

print('Test accuracy:', test_acc)

10000/10000 [==============================] - 0s 42us/step Test accuracy: 0.9782

Using Validation data¶

from sklearn.model_selection import train_test_split

X_test, X_valid, y_test, y_valid = train_test_split(X_test, y_test, test_size=0.33)

from keras.utils import to_categorical

y_test_cat = to_categorical(y_test)

y_valid_cat = to_categorical(y_valid)

model = models.Sequential()

model.add(layers.Flatten(input_shape=(28, 28)))

model.add(layers.Dense(128, activation=tf.nn.relu))

model.add(layers.Dense(10, activation=tf.nn.softmax))

model.compile(optimizer=tf.train.AdamOptimizer(),

loss='categorical_crossentropy',

metrics=['accuracy'])

history = model.fit(X_train, y_train_cat, validation_data=(X_valid,y_valid_cat), epochs=20)

Train on 60000 samples, validate on 3300 samples Epoch 1/20 60000/60000 [==============================] - 5s 89us/step - loss: 0.2612 - acc: 0.9247 - val_loss: 0.1361 - val_acc: 0.9567 Epoch 2/20 60000/60000 [==============================] - 5s 90us/step - loss: 0.1128 - acc: 0.9661 - val_loss: 0.1003 - val_acc: 0.9697 Epoch 3/20 60000/60000 [==============================] - 6s 98us/step - loss: 0.0779 - acc: 0.9765 - val_loss: 0.0797 - val_acc: 0.9755 Epoch 4/20 60000/60000 [==============================] - 5s 86us/step - loss: 0.0585 - acc: 0.9825 - val_loss: 0.0815 - val_acc: 0.9764 Epoch 5/20 60000/60000 [==============================] - ETA: 0s - loss: 0.0440 - acc: 0.986 - 5s 87us/step - loss: 0.0439 - acc: 0.9869 - val_loss: 0.0738 - val_acc: 0.9773 Epoch 6/20 60000/60000 [==============================] - 5s 81us/step - loss: 0.0367 - acc: 0.9886 - val_loss: 0.0744 - val_acc: 0.9755 Epoch 7/20 60000/60000 [==============================] - 5s 83us/step - loss: 0.0291 - acc: 0.9906 - val_loss: 0.0830 - val_acc: 0.9752 Epoch 8/20 60000/60000 [==============================] - 5s 82us/step - loss: 0.0233 - acc: 0.9927 - val_loss: 0.0764 - val_acc: 0.9806 Epoch 9/20 60000/60000 [==============================] - 5s 85us/step - loss: 0.0196 - acc: 0.9939 - val_loss: 0.0780 - val_acc: 0.9782 Epoch 10/20 60000/60000 [==============================] - 5s 86us/step - loss: 0.0157 - acc: 0.9956 - val_loss: 0.0776 - val_acc: 0.9779 Epoch 11/20 60000/60000 [==============================] - 5s 89us/step - loss: 0.0139 - acc: 0.9959 - val_loss: 0.0798 - val_acc: 0.9776 Epoch 12/20 60000/60000 [==============================] - 5s 90us/step - loss: 0.0124 - acc: 0.9959 - val_loss: 0.0882 - val_acc: 0.9791 Epoch 13/20 60000/60000 [==============================] - 5s 85us/step - loss: 0.0093 - acc: 0.9974 - val_loss: 0.0842 - val_acc: 0.9800 Epoch 14/20 60000/60000 [==============================] - 5s 87us/step - loss: 0.0099 - acc: 0.9969 - val_loss: 0.0867 - val_acc: 0.9806 Epoch 15/20 60000/60000 [==============================] - 6s 99us/step - loss: 0.0086 - acc: 0.9975 - val_loss: 0.0826 - val_acc: 0.9821 Epoch 16/20 60000/60000 [==============================] - 6s 99us/step - loss: 0.0078 - acc: 0.9973 - val_loss: 0.0978 - val_acc: 0.9785 Epoch 17/20 60000/60000 [==============================] - 7s 114us/step - loss: 0.0068 - acc: 0.9979 - val_loss: 0.0977 - val_acc: 0.9791 Epoch 18/20 60000/60000 [==============================] - 5s 89us/step - loss: 0.0067 - acc: 0.9981 - val_loss: 0.1000 - val_acc: 0.9782 Epoch 19/20 60000/60000 [==============================] - 6s 102us/step - loss: 0.0051 - acc: 0.9985 - val_loss: 0.1215 - val_acc: 0.9733 Epoch 20/20 60000/60000 [==============================] - 5s 87us/step - loss: 0.0068 - acc: 0.9980 - val_loss: 0.1078 - val_acc: 0.9803

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend();

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend();

...what are these plots telling us?¶

Now use Convolutional and max-pooling layers¶

# reload and normalize the data for clarity

mnist = keras.datasets.mnist

(X_train, y_train), (X_test, y_test) = mnist.load_data()

X_train = X_train / 255.0

X_test = X_test / 255.0

# note new step needed - convolutional layers expect channel dimension

X_train = X_train.reshape((60000, 28, 28, 1))

X_test = X_test.reshape((10000, 28, 28, 1))

from sklearn.model_selection import train_test_split

X_test, X_valid, y_test, y_valid = train_test_split(X_test, y_test, test_size=0.33)

from keras.utils import to_categorical

y_train_cat = to_categorical(y_train)

y_test_cat = to_categorical(y_test)

y_valid_cat = to_categorical(y_valid)

from keras import layers

from keras import models

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.Flatten())

model.add(layers.Dense(64, activation='relu'))

model.add(layers.Dense(10, activation='softmax'))

model.summary()

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= conv2d_1 (Conv2D) (None, 26, 26, 32) 320 _________________________________________________________________ max_pooling2d_1 (MaxPooling2 (None, 13, 13, 32) 0 _________________________________________________________________ conv2d_2 (Conv2D) (None, 11, 11, 64) 18496 _________________________________________________________________ flatten_1 (Flatten) (None, 7744) 0 _________________________________________________________________ dense_1 (Dense) (None, 64) 495680 _________________________________________________________________ dense_2 (Dense) (None, 10) 650 ================================================================= Total params: 515,146 Trainable params: 515,146 Non-trainable params: 0 _________________________________________________________________

model.compile(optimizer=tf.train.AdamOptimizer(),

loss='categorical_crossentropy',

metrics=['accuracy'])

history = model.fit(X_train, y_train_cat, validation_data=(X_valid,y_valid_cat), epochs=20)

Train on 60000 samples, validate on 3300 samples Epoch 1/20 60000/60000 [==============================] - 40s 671us/step - loss: 0.1236 - acc: 0.9622 - val_loss: 0.0452 - val_acc: 0.9885 Epoch 2/20 60000/60000 [==============================] - 39s 658us/step - loss: 0.0394 - acc: 0.9878 - val_loss: 0.0348 - val_acc: 0.9891 Epoch 3/20 60000/60000 [==============================] - 40s 665us/step - loss: 0.0268 - acc: 0.9915 - val_loss: 0.0330 - val_acc: 0.9909 Epoch 4/20 60000/60000 [==============================] - 39s 642us/step - loss: 0.0192 - acc: 0.9936 - val_loss: 0.0226 - val_acc: 0.9936 Epoch 5/20 60000/60000 [==============================] - 40s 659us/step - loss: 0.0133 - acc: 0.9958 - val_loss: 0.0292 - val_acc: 0.9918 Epoch 6/20 60000/60000 [==============================] - 40s 673us/step - loss: 0.0096 - acc: 0.9970 - val_loss: 0.0354 - val_acc: 0.9909 Epoch 7/20 60000/60000 [==============================] - 39s 655us/step - loss: 0.0085 - acc: 0.9972 - val_loss: 0.0305 - val_acc: 0.9921- loss: 0.0085 - acc: 0. Epoch 8/20 60000/60000 [==============================] - 40s 667us/step - loss: 0.0071 - acc: 0.9977 - val_loss: 0.0467 - val_acc: 0.9912 Epoch 9/20 60000/60000 [==============================] - 40s 671us/step - loss: 0.0054 - acc: 0.9984 - val_loss: 0.0308 - val_acc: 0.9930 Epoch 10/20 60000/60000 [==============================] - 40s 664us/step - loss: 0.0059 - acc: 0.9983 - val_loss: 0.0427 - val_acc: 0.9927 Epoch 11/20 60000/60000 [==============================] - 43s 717us/step - loss: 0.0058 - acc: 0.9983 - val_loss: 0.0312 - val_acc: 0.9930 Epoch 12/20 60000/60000 [==============================] - 40s 663us/step - loss: 0.0031 - acc: 0.9991 - val_loss: 0.0515 - val_acc: 0.9918 Epoch 13/20 60000/60000 [==============================] - 40s 663us/step - loss: 0.0051 - acc: 0.9985 - val_loss: 0.0351 - val_acc: 0.9927 Epoch 14/20 60000/60000 [==============================] - 43s 715us/step - loss: 0.0038 - acc: 0.9989 - val_loss: 0.0381 - val_acc: 0.9924 Epoch 15/20 60000/60000 [==============================] - 42s 693us/step - loss: 0.0032 - acc: 0.9990 - val_loss: 0.0441 - val_acc: 0.9930 Epoch 16/20 60000/60000 [==============================] - 41s 686us/step - loss: 0.0034 - acc: 0.9990 - val_loss: 0.0358 - val_acc: 0.9933 Epoch 17/20 60000/60000 [==============================] - 43s 714us/step - loss: 0.0035 - acc: 0.9990 - val_loss: 0.0531 - val_acc: 0.9906 Epoch 18/20 60000/60000 [==============================] - 42s 699us/step - loss: 0.0043 - acc: 0.9990 - val_loss: 0.0484 - val_acc: 0.9906 Epoch 19/20 60000/60000 [==============================] - 36s 598us/step - loss: 0.0041 - acc: 0.9990 - val_loss: 0.0381 - val_acc: 0.9924 Epoch 20/20 60000/60000 [==============================] - 36s 593us/step - loss: 0.0024 - acc: 0.9993 - val_loss: 0.0572 - val_acc: 0.9900

model.save('myCNN1.h5')

C:\Users\keith\Anaconda3\lib\site-packages\keras\engine\saving.py:118: UserWarning: TensorFlow optimizers do not make it possible to access optimizer attributes or optimizer state after instantiation. As a result, we cannot save the optimizer as part of the model save file.You will have to compile your model again after loading it. Prefer using a Keras optimizer instead (see keras.io/optimizers). 'TensorFlow optimizers do not '

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend();

test_loss, test_acc = model.evaluate(X_test, y_test_cat)

6700/6700 [==============================] - 1s 201us/step

test_acc

0.9905970149253731

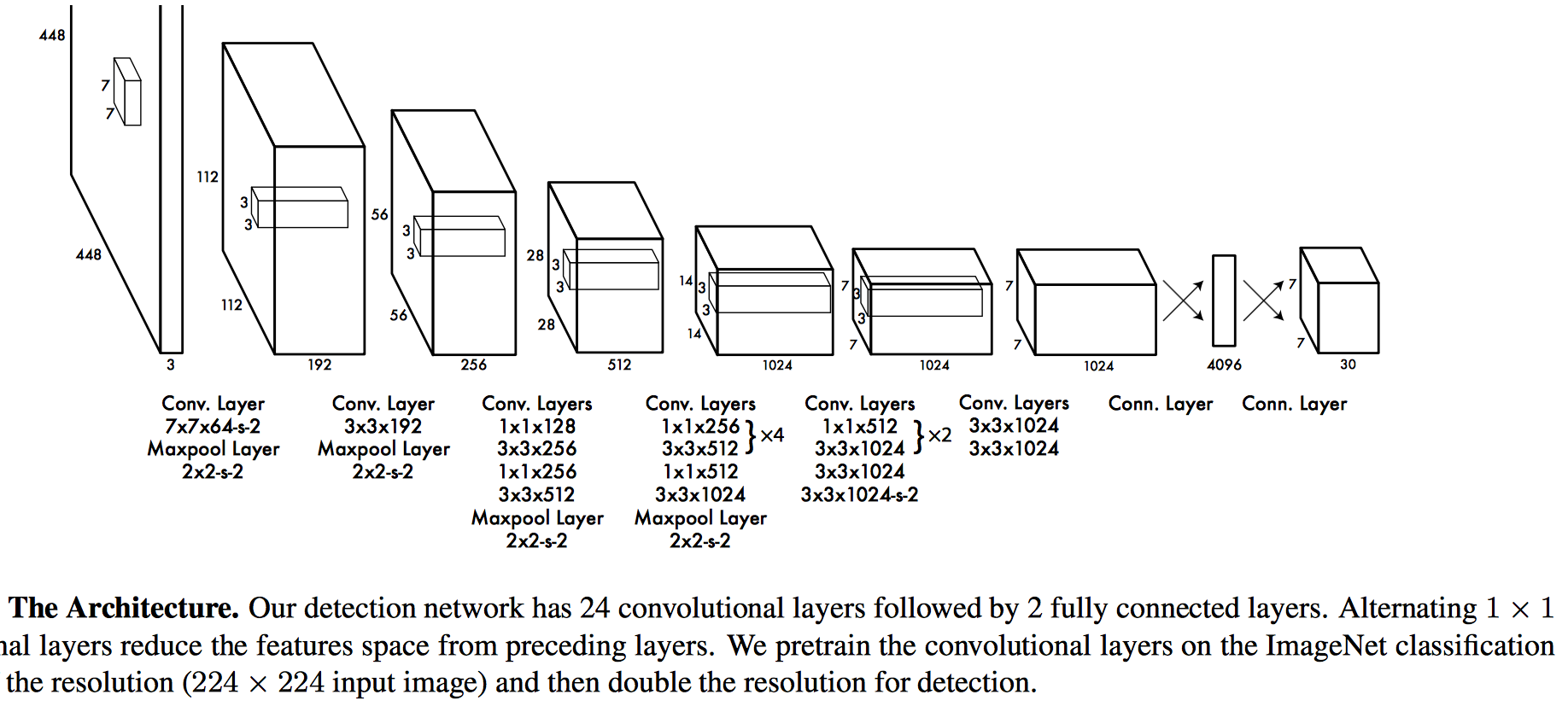

Using pre-optimized models¶

As you can see, convolutional networks perform quite a bit better than dense networks, but also much slower.

The real power becomes evident when trained on huge datasets, which also allow much deeper networks to be optimized without overfitting (too much).

However this requires significant supercomputing resources and days to weeks of time to optimize.

Rather than optimize a network from scratch however, we can load a pre-optimized network to use.