Outline:¶

- Data augmentation

- Python Generators

- Using (home made) generators in Keras

- Built-in generators in Keras

Reading:

- Chollet Chapter 5

- https://docs.python.org/3/tutorial/classes.html#generators

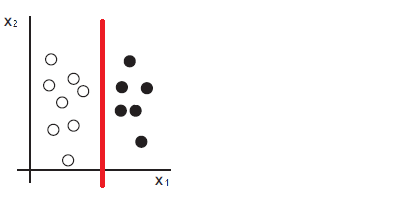

Data Augmentation: Motivation¶

It is essential that we get not only a lot of data to train with, but that the data varies in important ways

Otherwise our network will generalize poorly to new data.

Data Augmentation: Motivation¶

It is essential that we get not only a lot of data to train with, but that the data varies in important ways

Otherwise our network will generalize poorly to new data.

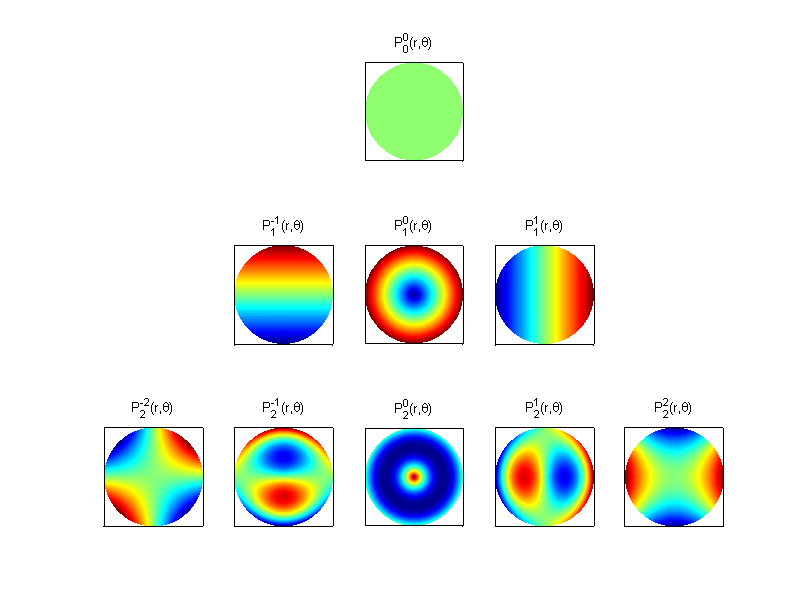

Invariant Features¶

A concept from computer vision

Compute features which are unchanged by certain variations of the image

Example: inner-product with rotationally-symmetric shape (moments) resulting number is invariant to rotation.

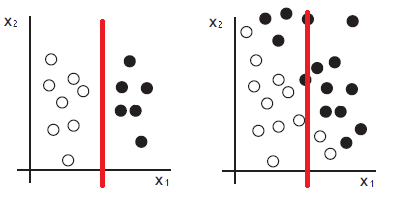

Deep Network Invariance to Transformations¶

Suppose $\mathbf x$ is image data and $\mathbf R_\theta$ is a rotation, then you want your model $f(\mathbf x) = y$ to make the same prediction regardless of rotation, i.e.

$f(\mathbf x) = f(\mathbf R_{\theta_1} \mathbf x) = f(\mathbf R_{\theta_2} \mathbf x) = ... = y$

In a data-driven approach, we enforce this by adding training data $(\mathbf x^{(k)},y^{(k)}), (\mathbf R_{\theta_1} \mathbf x^{(k)},y^{(k)}), (\mathbf R_{\theta_2} \mathbf x^{(k)},y^{(k)}), ...$

We created rotated versions of our image, and used them to train with same label.

Doing this is (generally) called Data augmentation.

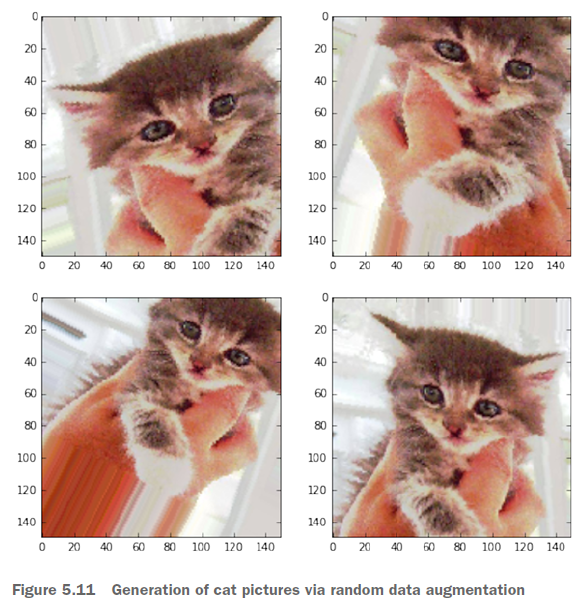

Exercise: Image variations¶

Suppose you want to make a network that can recognize cats in pictures (of all kinds).

List all the different ways you can think of which cat pictures might vary.

Which of these variations can be applied with software (e.g, MS paint or photoshop)

Data Augmentation¶

Usually, we know many ways data should be able to vary, even if our dataset does not have much of these variations.

We can easily use our dataset to create new data with these variations.

Big Data problem:¶

Suppose your had 500 cat pictures for training, and you wanted to apply the following variations:

- 100 different shifts

- 10 different scales

- 20 different lighting variations

- 10 different color variations

- 36 different rotations

- 4 different orientations

How many images would you end up with?

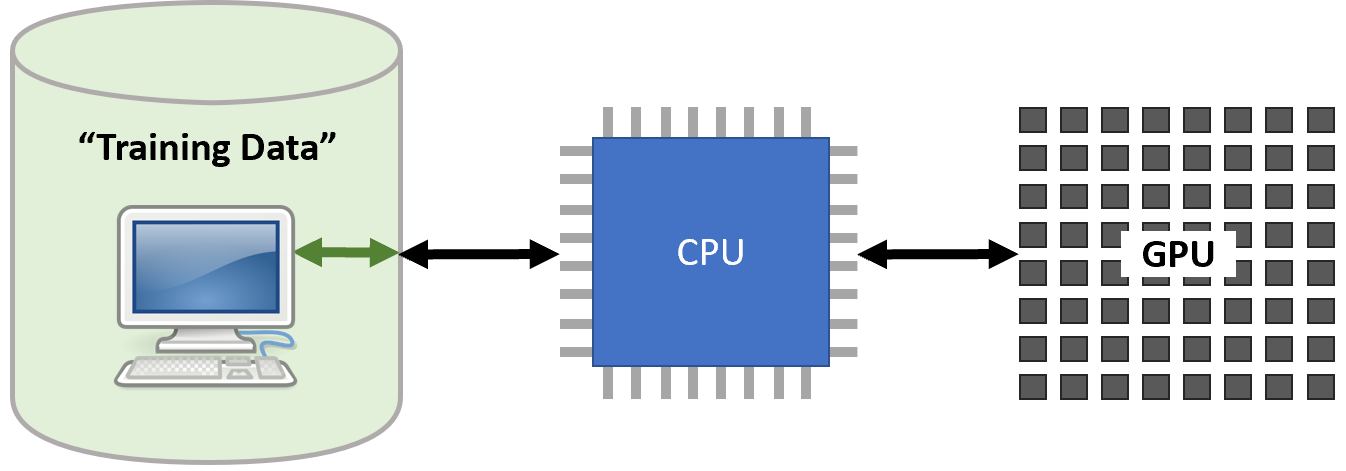

Solution: On-the-fly Data generation¶

A software function loads data and feeds it to the network for training.

This can be used to process data stored remotely, or just data that is too large to fit in RAM.

It can also be used to modify the data for data augmentation while loading.

The approch is based on a common programming technique called generators.

Long story short: pass a function pointer (the generator) instead of the $\mathbf X$ and $y$ data inputs.

Python Iterators and Generators¶

- Classes

- Iterators

- Generators

- Keras Generators

0. Python classes¶

# can make a C-style struct using an empty class

class Employee:

pass

john = Employee() # Create an empty employee record

# Fill the fields of the record

john.name = 'John Doe'

john.dept = 'computer lab'

john.salary = 1000

# can define inside class, and encapsulate functions too

class MyClass:

"""A simple example class"""

i = 12345

def f(self):

return 'hello world'

def g(): # this won't work, it will pass self when called anyway

return("hi")

c = MyClass()

c.f()

'hello world'

c.i

12345

c.g()

--------------------------------------------------------------------------- TypeError Traceback (most recent call last) <ipython-input-146-7e97d8d6150b> in <module>() ----> 1 c.g() TypeError: g() takes 0 positional arguments but 1 was given

class Complex0:

r=0

i=0

print("hello")

hello

a = Complex0() # <-- parenthesis are important or very strange things happen

print(a.r)

a.r=1

print(a.r)

a = Complex0()

print(a.r)

0 1 0

Constructors¶

Use __init__() function to do constructor code properly

class Complex0:

i=0

r=0

def __init__(self): # self always first input to init

self.r=0

self.i=0

print("hello")

a = Complex0()

print(a.r)

a.r=1

print(a.r)

a = Complex0()

print(a.r)

hello 0 1 hello 0

class Complex:

def __init__(self, realpart=0, imagpart=0):

self.r = realpart

self.i = imagpart

print("hello")

a = Complex(0,0)

print(a.r)

hello 0

1. Iterators¶

Utilizes __iter__() and __next___ functions of a class.

# some examples of iteration (for classes that have built-in support)

for element in [1, 2, 3]:

print(element)

for element in (1, 2, 3):

print(element)

for key in {'one':1, 'two':2}:

print(key)

for key in {'one':1, 'two':2}:

print(key)

for char in "123":

print(char)

#for line in open("myfile.txt"):

# print(line, end='')

1 2 3 1 2 3 one two one two 1 2 3

s = 'abc'

it = iter(s)

print(it)

print(next(it))

print(next(it))

print(next(it))

print(next(it))

print(next(it))

<str_iterator object at 0x7fee88644eb8> a b c

--------------------------------------------------------------------------- StopIteration Traceback (most recent call last) <ipython-input-162-da7cadc5f5ac> in <module>() 5 print(next(it)) 6 print(next(it)) ----> 7 print(next(it)) 8 print(next(it)) 9 for i in it: StopIteration:

s = 'abc'

it = iter(s)

for i in it:

print(i)

a b c

To add iterator support to a class, add the __iter__() and __next___() functions

class Reverse:

"""Iterator for looping over a sequence backwards."""

def __init__(self, data):

self.data = data

self.index = len(data)

def __iter__(self): # notifies python that this is iterable

return self

def __next__(self): # defines what happens at each iteration

if self.index == 0:

raise StopIteration # notify python that iteration has ended

self.index = self.index - 1

return self.data[self.index]

rev = Reverse('spam')

for char in rev:

print(char)

m a p s

2. Generators¶

Simpler way to write iterators. Automatically create the __iter__() and __next___() functions behind the scenes.

Local variables are automatically stored internally between calls without needing to add them to struct.

Automatically raise the StopIteration interrupt for you.

def veryshort():

yield "hi" # <--- "yield" instead of "return" makes it a generator

for string in veryshort():

print(string)

hi

def globalcounter():

for i in range(0,9999):

yield i

def globalcounter2():

i=0

while 1:

i=i+1

yield i

g = globalcounter()

print(next(g))

print(next(g))

0 1

def reverse(data):

for index in range(len(data)-1, -1, -1):

yield data[index]

for char in reverse('golf'):

print(char)

f l o g

It basically stops at each encounter of "yield" until iterated on again.

Take advantage of this "pausing at yield" behavior by putting an iterator in the generator

def longer():

for i in range(0,7):

yield i*i

for k in longer():

print(k)

0 1 4 9 16 25 36

Then your generator is basically a wrapper on top of the built-in iterator to add code to execute.

More examples:

# this one just iterates over even numbers forever

def all_even():

n = 0

while True:

yield n

n += 2

More examples:

# python can use the generator like a list

def firstn3(n):

num = 0

while num < n:

yield num

num += 1

sum_of_first_n = sum(firstn3(1000000))

firstn1

<function __main__.firstn1(n)>

Using data generators as inputs in Keras¶

In the Sequential model:

fit_generator()instead offit()evaluate_generator()instead ofevaluate()predict_generator()instead ofpredict()

https://keras.io/models/sequential/#fit_generator

Trains the model on data generated batch-by-batch by a Python generator (or an instance of Sequence).

fit_generator(

generator,

steps_per_epoch=None,

epochs=1,

verbose=1,

callbacks=None,

validation_data=None,

validation_steps=None,

validation_freq=1,

class_weight=None,

max_queue_size=10,

workers=1,

use_multiprocessing=False,

shuffle=True,

initial_epoch=0)Using data generators as inputs in Keras¶

Generator argument: A generator or an instance of Sequence (keras.utils.Sequence) object in order to avoid duplicate data when using multiprocessing. The output of the generator must be either

- a tuple (inputs, targets)

- a tuple (inputs, targets, sample_weights).

This tuple (a single output of the generator) makes a single batch. Therefore, all arrays in this tuple must have the same length (equal to the size of this batch). Different batches may have different sizes. For example, the last batch of the epoch is commonly smaller than the others, if the size of the dataset is not divisible by the batch size.

The generator is expected to loop over its data indefinitely.

An epoch finishes when steps_per_epoch batches have been seen by the model.

Lab: inputs via data generators¶

Adapt your CNN model to feed inputs via a data generator

Impose a random horizontal flip on the images with your generators. First try in testing and get accuracy, then training too

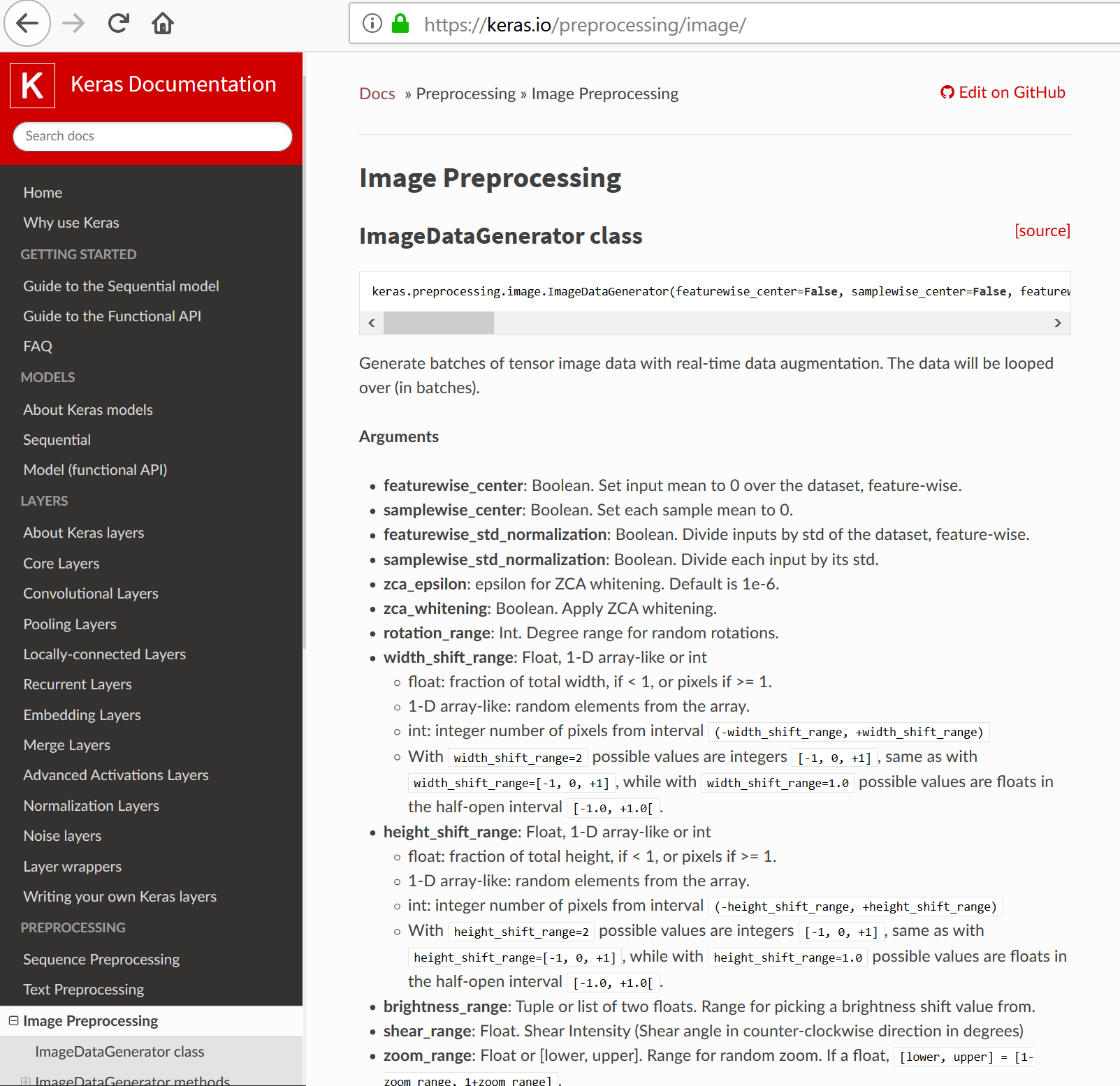

Keras Data Generators¶

Keras also has built-in functions for popular image data-augmentation tasks in the preprocessing library.

from keras import layers

from keras import models

model = models.Sequential()

model.add(layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)))

model.add(layers.MaxPooling2D((2, 2)))

model.add(layers.Conv2D(64, (3, 3), activation='relu'))

model.add(layers.Flatten())

model.add(layers.Dense(64, activation='relu'))

model.add(layers.Dense(10, activation='softmax'))

model.summary()

_________________________________________________________________ Layer (type) Output Shape Param # ================================================================= conv2d_7 (Conv2D) (None, 26, 26, 32) 320 _________________________________________________________________ max_pooling2d_4 (MaxPooling2 (None, 13, 13, 32) 0 _________________________________________________________________ conv2d_8 (Conv2D) (None, 11, 11, 64) 18496 _________________________________________________________________ flatten_4 (Flatten) (None, 7744) 0 _________________________________________________________________ dense_7 (Dense) (None, 64) 495680 _________________________________________________________________ dense_8 (Dense) (None, 10) 650 ================================================================= Total params: 515,146 Trainable params: 515,146 Non-trainable params: 0 _________________________________________________________________

model.compile(optimizer=tf.train.AdamOptimizer(),

loss='categorical_crossentropy',

metrics=['accuracy'])

#history = model.fit(X_train, y_train_cat, validation_data=(X_valid,y_valid_cat), epochs=10)

traingen0 = training_generator()

validgen0 = validation_generator()

history = model.fit_generator(traingen0,

steps_per_epoch = len(X_train)/blocksize,

validation_data=validgen0,

validation_steps = len(X_valid)/blocksize,

epochs=10)

Epoch 1/10 938/937 [==============================] - 45s 48ms/step - loss: 0.1516 - acc: 0.9544 - val_loss: 0.0587 - val_acc: 0.9800 Epoch 2/10 938/937 [==============================] - 46s 49ms/step - loss: 0.0461 - acc: 0.9860 - val_loss: 0.0389 - val_acc: 0.9867 Epoch 3/10 938/937 [==============================] - 37s 40ms/step - loss: 0.0286 - acc: 0.9914 - val_loss: 0.0387 - val_acc: 0.9870 Epoch 4/10 938/937 [==============================] - 38s 40ms/step - loss: 0.0191 - acc: 0.9943 - val_loss: 0.0417 - val_acc: 0.9885 Epoch 5/10 938/937 [==============================] - 40s 42ms/step - loss: 0.0148 - acc: 0.9955 - val_loss: 0.0389 - val_acc: 0.9885 Epoch 6/10 938/937 [==============================] - 38s 40ms/step - loss: 0.0132 - acc: 0.9954 - val_loss: 0.0477 - val_acc: 0.9879 Epoch 7/10 938/937 [==============================] - 39s 41ms/step - loss: 0.0096 - acc: 0.9969 - val_loss: 0.0639 - val_acc: 0.9821 Epoch 8/10 938/937 [==============================] - 40s 43ms/step - loss: 0.0081 - acc: 0.9975 - val_loss: 0.0425 - val_acc: 0.9891 Epoch 9/10 938/937 [==============================] - 55s 58ms/step - loss: 0.0060 - acc: 0.9981 - val_loss: 0.0406 - val_acc: 0.9909 Epoch 10/10 938/937 [==============================] - 40s 43ms/step - loss: 0.0066 - acc: 0.9981 - val_loss: 0.0610 - val_acc: 0.9858

testgen0 = testing_generator()

test_loss, test_acc = model.evaluate_generator(testgen0, steps = len(X_test)/blocksize)

print(test_acc)

0.9859701492893163