Hacking Math I

Spring 2020

Topic 4: Advanced Functions

This topic:¶

- Activation functions

- Vector functions

- Linear functions

- Compositions and diagrams of functions

Reading:

- I2ALA Chapter 2 (Linear functions)

I. Activation functions¶

Recall Python functions¶

def f(x): # <--- def keyword and colon after name and arguments

return x**2 # <--- return output

y = f(4)

print("y =",y)

y = 16

Scalar-valued Function $f(x)$ for scalar input $x$¶

- inputs $x \in \mathbb R$, the Domain of $f$.

- targets (function values) $f(x)$, the image/codomain of $f$

This says

- $f$ is a mapping from $\mathbb R$ to $\mathbb R$

- $f$ assigns every input scalar $x$ to exactly one output scalar $f(x)$

(recall we are exclusively working with real numbers in this class)

Activation functions¶

An artificial neuron is "on" or "off" depending on its inputs

This on/off behavior is modeled with the activation function, a scalar funtion of a scalar input

Popular functions, in historical order

- step function

- sign function

- logistic function

- rectified linear unit

Exercise: code these in python

Exercise: convert a step function into a sign function and vice versa

Logistic Function¶

$$f(z) = \frac{c}{1+e^{-a(z-z_0)}}$$$a$, $c$, and $z_0$ are constants.

Sigmoid Activation Function = Special case of Logistic function: $c=1$, $z_0=0$, $a=1$ (typically)

Exercise: code in python

Rectified Linear Unit¶

$$ \text{ReLU}(z) = \alpha \max(0,z)= \begin{cases} 0, \text{ if } z < 0 \\ \alpha z, \text{ if } z > 0 \end{cases} $$The activation function that was popular when Deep Learning became famous.

Not smooth but gradient still simple to do computationally.

Variants such as "Leaky ReLU" improve implementation details.

Exercise: code in python

II. Vector Functions¶

Function $f$¶

Relates two quantities together

- inputs $x \in \mathbb R^D$, the Domain of $f$.

- targets (function values) $f(x)$, the image/codomain of $f$

This says

- $f$ is a mapping from $\mathbb R^n$ to $\mathbb R^m$

- $f$ assigns every input vector $x$ to exactly one output vector $f(x)$

(recall we are exclusively working with real numbers in this class)

Univariate function¶

\begin{align} f:\mathbb R &\rightarrow \mathbb R \end{align}Examples:

- most functions probably: $\sin,\cos$, $\log,\ln,\exp$, $x^n, \sqrt{x},...$

- activation functions

Scalar-valued Function of Vectors¶

\begin{align} f:\mathbb R^n &\rightarrow \mathbb R \end{align}$$f(x) = f_1(x_1,x_2,...,x_n)$$Example¶

\begin{align} f:\mathbb R^4 &\rightarrow \mathbb R \end{align}$$f(x) = x_1 + x_2 - x_4^2$$What is $x$?

Example: the Sum function¶

$$x_1+x_2+...+x_n$$What is $x$?

The inner product function¶

\begin{align} f:\mathbb R^n &\rightarrow \mathbb R \end{align}$$f(x) = f_1(x_1,x_2,...,x_n) = a^Tx = a_1x_1+a_2x_2+...+a_nx_n$$Think of $x$ as the variable and $a$ as a vector of fixed parameters

Suppose both $x$ and $a$ were viewed as variables, what is $f$? ($\mathbb R^? \rightarrow \mathbb R^?$)

Linearity of inner product function¶

$$f(x) = f_1(x_1,x_2,...,x_n) = a^Tx = a_1x_1+a_2x_2+...+a_nx_n$$- $f(x+y) = ?$

- $f(\alpha x) =?$

Linearity of inner product function - converse¶

any linear function $\mathbb R^n \rightarrow \mathbb R$ can be described as an inner product

Exercise: prove by using linearity assumption to expand $f(x_1e_1+..+x_ne_n)$.

Affine Function¶

\begin{align} f:\mathbb R^n &\rightarrow \mathbb R \\ f(x) = a^T x + b, \, & a\in \mathbb R^n, b\in \mathbb R \end{align}A linear function with an offset

Exercise¶

consider $a = \begin{pmatrix}2\\1 \end{pmatrix}$, and $b=1$

Draw the linear function $f(x)=a^Tx$ and the affine function $f(x)=a^Tx+b$

Exercise: Regression¶

- We have data for drug dosage and resulting blood concentration of drug.

- We want a model to help us predict how much dosage to give to achieve a desired blood concentration.

print ("dosage = "+str(np.round(dosage,2)))

print ("blood concentration = "+str(np.round(conc_noisy,2)))

plt.scatter(dosage, conc_noisy, color='black', linewidth='1.5');

dosage = [ 0. 166.67 333.33 500. 666.67 833.33 1000. ] blood concentration = [17.06 31.89 35.01 34.18 65.33 79.27 47.47]

Univariate vector-valued function $f(x)$¶

\begin{align} f:\mathbb R &\rightarrow \mathbb R^m \end{align}$$ f(x) = \begin{pmatrix} f_1(x) \\ f_2(x) \\ \vdots \\ f_m(x) \end{pmatrix} $$- $x$ is a scalar

- $f(x)$ is a vector

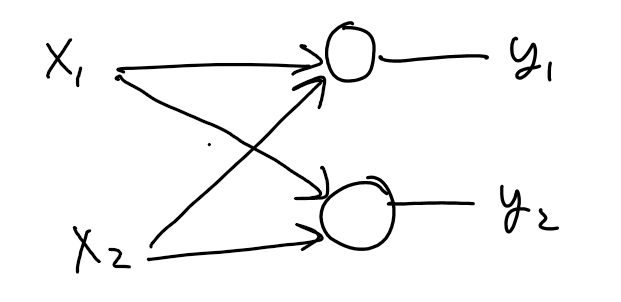

Multivariate vector-valued function¶

\begin{align} f:\mathbb R^n &\rightarrow \mathbb R^m \end{align}$$ f(x) = \begin{pmatrix} f_1(x_1,x_2,...,x_n) \\ f_2(x_1,x_2,...,x_n) \\ \vdots \\ f_m(x_1,x_2,...,x_n) \end{pmatrix} $$II. Function compositions¶

Exercise: make two python functions to implement the following function composition

- $h(x) = g(f(x))$

- $f(x) = \exp(x)$

- $g(y) = y^2$

Compute $h(4)$

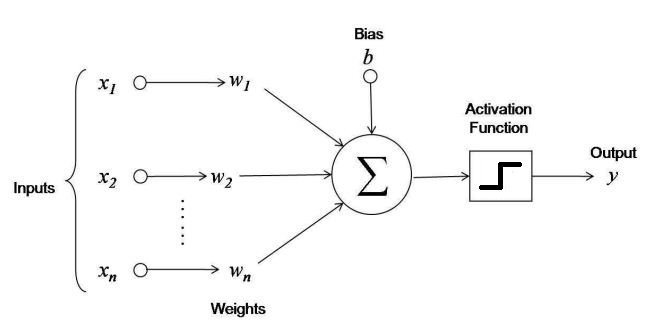

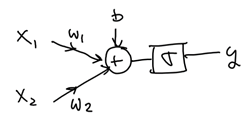

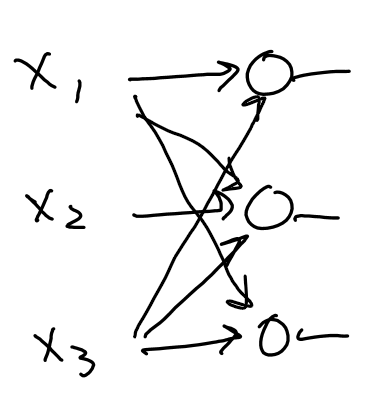

Example: Perceptron Function¶

- input of some size

- weights

- Activation function

Describe as a composition of simple functions

Consider the output regions for this network (given parameters):¶

What values can $y$ be? where are those values taken?

What is the function?¶

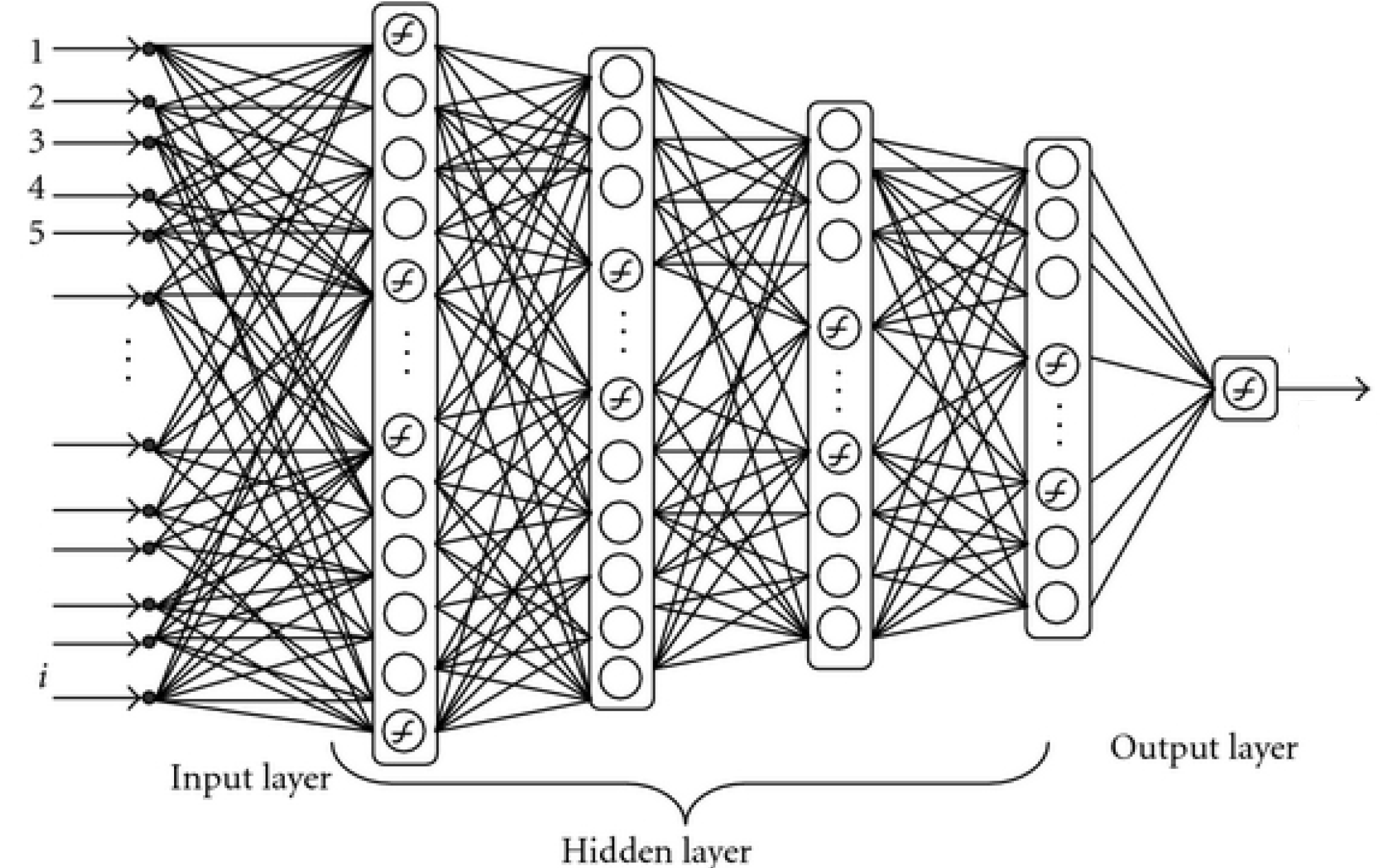

Example: Deep Neural Network¶

- Make a function for a single neuron

- Make function for a layer

- Make a composition of layers